As many people are aware, the best performance can be achieved from MySQL Cluster by using the native (C++) NDB API (rather than using SQL via a MySQL Server). What’s less well known is that you can improve the performance of your NDB-API enabled application even further by ‘batching’. This article attempts to explain why batching helps and how to do it.

What is batching and why does it help?

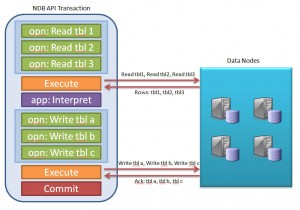

Batching involves sending multiple operations from the application to the Cluster in one group rather than individually; the Cluster then processes these operations and sends back the results. Without batching, each of these operations incurs the latency of crossing the network as well as consuming CPU time on both the application and data node hosts.

By batching together multiple operations, all of the requests can be sent in one message and all of the replies received in another – thus reducing the number of messages and hence the latency and CPU time consumed.

How to use batching with the MySQL Cluster NDB API

The principle is that you batch together as many operations as you can, execute them together and then interpret the results. After interpretting the results, the application may then decide to send in another batch of operations.

An NDB API transaction consists of one or more operations where each operation (currently) acts on a single table and could be a simple primary key read or write or a complex table scan.

The operation is not sent to the Cluster at the point that it’s defined. Instead, the application must explicitly request that all operations defined within the transaction up to that point be executed – at which point, the NDB API can send the batch of operations to the data nodes to be processed. The application may request that the transaction be committed at that point or it may ask for the transaction to be held open so that it can analyse the results from the first set of operations and then use that information within a subsequent series of operations and then commit the transaction after executing that second batch of operations.

The following code sample shows how this can be implemented in practice (note that the application logic and all error handling has been ommited).

const NdbDictionary::Dictionary* myDict= myNdb.getDictionary();

const NdbDictionary::Table *myTable= myDict->getTable("tbl1");

const NdbDictionary::Table *myTable2= myDict->getTable("tbl2");

NdbTransaction *myTransaction= myNdb.startTransaction();

// Read all of the required data as part of a single batch

NdbOperation *myOperation= myTransaction->getNdbOperation(myTable1);

myOperation->readTuple(NdbOperation::LM_Read);

myOperation->equal("ref", asset_num);

myRecAttr= myOperation->getValue("cost", NULL);

NdbOperation *myOperation2= myTransaction->getNdbOperation(myTable2);

myOperation2->readTuple(NdbOperation::LM_Read);

myOperation2->equal("ref", asset_num);

myRecAttr= myOperation->getValue("volume", NULL);

myTransaction->execute(NdbTransaction::NoCommit);

// NOT SHOWN: Application logic interprets results from first set of operations

// Based on the data read during the initial batch, make the necessary changes

myOperation *myOperation3= myTransaction->getNdbOperation(myTable1);

myOperation3->updateTuple();

myOperation3->equal("ref", asset_num);

myOperation2->setValue("cost", new_cost);

myOperation *myOperation4= myTransaction->getNdbOperation(myTable2);

myOperation4->updateTuple();

myOperation4->equal("ref", asset_num);

myOperation4->setValue("volume", new_volume);

myTransaction->execute( NdbTransaction::Commit);

myNdb.closeTransaction(myTransaction);

[…] described in “Batching – improving MySQL Cluster performance when using the NDB API“, reducing the number of times the application node has to access the data nodes can greatly […]