There is often confusion as to how it can be claimed that MySQL Cluster delivers in-memory performance while also providing durability (the “D” in ACID). This post explains how that can be achieved as well as how to mix and match scalability, High Availability and Durability.

As an aside, the user can specify specific MySQL Cluster tables or columns to be stored on disk rather than in memory – this is a solution for extra capacity but you don’t need to take this performance hit just to have the data persisted to disk. This post focuses on the in-memory approach.

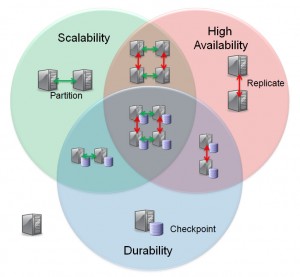

There is a great deal of flexibility in how you deploy MySQL Cluster with in-memory data – allowing the user to decide which features they want to make use of.

The simplest (and least common) topology is represented by the server sitting outside of the circles in the diagram. The data is held purely in memory in a single data node and so if power is lost then so is the data. This is an option if you’re looking for an alternative to the MEMORY storage engine (and should deliver better write performance as well as more functionality). To implement this, your configuration file would look something like this:

config.ini (no Durability, Scalability or HA)

[ndbd default] NoOfReplicas=1 datadir=E:am233268DocumentsMySQL_ClusterMy_Clusterdata [ndbd] hostname=localhost [ndb_mgmd] hostname=localhost [mysqld] hostname=localhost

By setting NoOfReplicas to 1, you are indicating that data should not be duplicated on a second data node. By only having one [ndbd] section you are specifying that there should be only 1 data node.

To indicate that the data should not be persisted to disk, make the following change:

mysql> SET ndb_table_no_logging=1;

Once ndb_table_no_logging has been set to 1, any Cluster tables that are subsequently created will be purely in-memory (and hence the contents will be volatile).

Durability can be added as an option. In this case, the changes to the in-memory data is persisted to disk asynchronously (thus minimizing any increase in transaction latency). Persistent is implemented using 2 mechanisms in combination:

- Periodically a snapshot of the in-memory data in the data node is written to disk – this is referred to as a Local Checkpoint (LCP)

- Each change is written to a Redo log buffer and then periodically these buffers are flushed to a disk-based Redo log file – this is coordinated across all data nodes in the Cluster and is referred to as a Global Checkpoint (GCP)

This checkpointing to disk is enabled by default but if you’ve previously turned it off then you can turn it back on with:

mysql> SET ndb_table_no_logging=0;

Following this change, any new Cluster tables will be asynchronously persisted to disk. If you have existing, volatile MySQL Cluster tables then you can now make them persistent:

mysql> ALTER TABLE tab1 ENGINE=ndb;

High Availability can be implemented by including extra data node(s) in the Cluster and increasing the value of NoOfReplicas (2 is the normal value so that all data is held in 2 data nodes). The set (pair) of data nodes storing the same set of data is referred to as a node group. Data is synchronously replicated between the data nodes in the node group and so changes cannot be lost unless both data nodes fail at the same time. If the 2 data nodes making up a node group are run on different servers then the data can remain available for use even if one of the servers fails. The configuration file for single, 2 data node node group Cluster would look something like:

config.ini (HA but no scalability)

[ndbd default] NoOfReplicas=2 datadir=E:am233268DocumentsMySQL_ClusterMy_Clusterdata

[ndbd] hostname=192.168.0.1

[ndbd] hostname=192.168.0.2

[ndb_mgmd] hostname=192.168.0.3

[mysqld] hostname=192.168.0.1

[mysqld] hostname=192.168.0.2

[ndbd default] NoOfReplicas=1 datadir=E:am233268DocumentsMySQL_ClusterMy_Clusterdata

[ndbd] hostname=192.168.0.1

[ndbd] hostname=192.168.0.2

[ndb_mgmd] hostname=192.168.0.1

[mysqld] hostname=192.168.0.1

[mysqld] hostname=192.168.0.2

[ndbd default] NoOfReplicas=2 datadir=E:am233268DocumentsMySQL_ClusterMy_Clusterdata

[ndbd] hostname=192.168.0.1

[ndbd] hostname=192.168.0.2

[ndbd]

hostname=192.168.0.3

[ndbd]

hostname=192.168.0.4

[ndb_mgmd] hostname=192.168.0.5

[ndb_mgmd]

hostname=192.168.0.6

[mysqld] hostname=192.168.0.5

[mysqld] hostname=192.168.0.6

[…] configure out those features to provide a pure-memory, single host database as described in “How can a database be in-memory and durable at the same time?“. What’s more if/when you need to add extra capabilities such as ACID transactions, […]

Hi

I have seen the below statement in MySQL cluster document

>>B.10.19: In the event of a catastrophic failure—say, for instance, the whole city loses power and my UPS fails—would I lose all my data?

All committed transactions are logged. Therefore, although it is possible that some data could be lost in the event of a catastrophe, this should be quite limited. Data loss can be further reduced by minimizing the number of operations per transaction. (It is not a good idea to perform large numbers of operations per transaction in any case.)<<

But is this correct?. MySQL cluster transactions commit the data in to the memory and write the data into the log is asynchronous to improve the write performance. So if the total cluster failure happened after the Commit in the memory and befo asynchronous write in to the log file, we will lose that transaction. Am I correct in saying that?

Regards

Anil

Correct – by default, you can lose up to 2 seconds of writes (that haven’t yet made it to the redo log files). Note that from Cluster 7.2 we support splitting the Cluster between sites and so you could split a node group between data centres so that you don’t have an outage if one site loses all power.

Hi Andrew,

I am trying to set up an in-memory table in a mysql cluster. I used

CREATE TABLE City_test_new1 (id INT, INDEX USING HASH (id)) ENGINE=MEMORY as SELECT * FROM City_test;

Will this be replicated across the cluster? How can I verify?

regards,

Lakshmi

If you’re using the MEMORY table then it is not really a Cluster table and will be local to the mysqld where you created. Set the engine to NDBCLUSTER to have it visible from all MySQL Servers.

Andrew.

Hi Andrew,

If I would like to mirror a full disk-based mysql database entirelyto memory (for read purposes only, that is if the system reboots it would have to sync the full DB back to ram again).

– Is this possible with MySQL?

– How would this work? is it a complex venture on uncharted terrain :-)?

– Is there a high price to pay in overhead (ie. write – the variable cost of syncing write on the disk-based db to memory, the initial cost of fully rebuilding a say 5 GB db in memory.)

greetings,

Coen

Thanks for your question. I’m no longer directly involved with MySQL (I’m now with MongoDB) and so I’d suggest that you seek help from http://forums.mysql.com/.

Best Regards, Andrew.

HI Andrew,

have you tried measuring performance of tables created with “SET ndb_table_no_logging=1;” VS “regular” tables? We conducted many read/write tests with large number of keys and got almost identical results. That is strange, since we are expecting memory tables to be significantly faster.

Best regards,

Mika

Note that the writes to disk are asynchronous and so the real saving is that disk IØ doesn’t end up being a bottleneck.