MySQL Cluster is designed to be a High Availability, Fault Tolerant database where no single failure results in any loss of service.

This is however dependent on how the user chooses to architect the configuration – in terms of which nodes are placed on which physical hosts, and which physical resources each physical host is dependent on (for example if the two blades containing the data nodes making up a particular node group are cooled by the same fan then the failure of that fan could result in the loss of the whole database).

Of course, there’s always the possibility of an entire data center being lost due to earthquake, sabotage etc. and so for a fully available system, you should consider using asynchronous replication to a geographically remote Cluster.

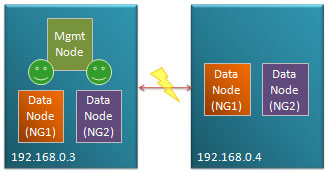

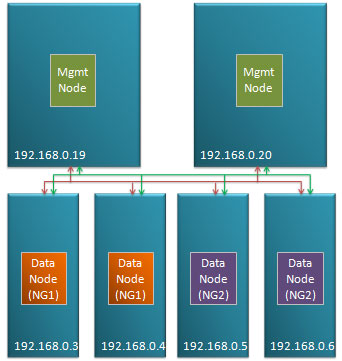

Fig 1. illustrates a typical small configuration with one or more data nodes from different node groups being stored on two different physical hosts and a management node on an independent machines (probably co-located with other applications as its resource requirements are minimal. If any single node (process) or physical host is lost then service can continue.

The basics of MySQL Cluster fault tolerance

Data held within MySQL Cluster is partitioned, with each node group being responsible for 2 or more fragments. All of the data nodes responsible for the same fragments form a Node Group (NG). If configured correctly, any single data node can be lost and the other data nodes within its node group will continue to provide service.

The management node (ndb_mgmd process) is required when adding nodes to the cluster – either when it was initially configured or when a node has been lost and restarted.

A heart-beat protocol is used between the data nodes in order to identify when a node has been lost. In many cases, the community of surviving data nodes can reconfigure themselves but in some cases they need help from the management node – much of this article focuses on how to identify these cases so that you can decide what level of redundancy is required for the management node.

Goals of the algorithm

The algorithm used by MySQL Cluster has 2 priorities (in order):

- Prevent database inconsistencies through “split brain” syndrome

- Keep the database up and running, serving the application

Split brain would occur if 2 data nodes within a node group lost contact with each other and independently decided that they should be master for the fragments controlled by their node group. This could lead to them independently applying conflicting changes to the data – making it very hard to recover the database (which would include undoing the changes that the application believes to have been safely committed). Note that a particular node doesn’t know whether its peer(s) has crashed or if it has just lost its connection to it. If the algorithm is not confident of avoiding a split brain situation then all of the data nodes are shut down – obviously that isn’t an ideal result and so it’s important to understand how to configure your cluster so that doesn’t happen.

The algorithm

If all of the data nodes making up a node group are lost then the cluster shuts down.

When data nodes lose contact with each other (could be failure of a network connection, process or host) then all of the data nodes that can still contact each other form a new community. Each community must decide whether its data nodes should stay up or shut down:

- If the community doesn’t contain at least 1 data node from each node group then it is not viable and its data nodes should shut down.

- If this community is viable and it can determine that it contains enough of the data nodes such that there can be no other viable community out there (one with at least 1 data node from each node group) then it will decide (by itself) to keep up all of its data nodes.

- If the community is viable but there is the possibility of another viable community then it contacts the arbitrator which decides which amongst all viable communities should be allowed to stay up. If the community can not connect to the arbitrator then its data nodes shut down.

In this way, at most one community of data nodes will survive and there is no chance of split brain.

The arbitrator will typically run on a management node. As you’ll from the algorithm and the following examples, the cluster can sometimes survive a failure without needing a running management node but sometimes it can’t. In most of the examples, a single management node is used but you may well decide to have a second for redundacy so that more multiple-point-of-failures can be handled. At any point in time, just one of the management nodes would act as the active arbitrator, if the active one is lost then a majority community of data nodes can hand control over to the other management node.

Note that the management node consumes very little resource and so can be co-located with other functions/applications but as you’ll see from the examples, you would normally avoid running it on the same host as a data node.

Example 1: Simplest cluster – loss of management node followed by a data node

Fig 2. shows a very simple cluster with 3 hosts, the management node running on 192.168.0.19 and then a single node group (NG1) made up of 2 data nodes split between 192.168.0.3 and 192.168.0.4. In this scenario, the management node is ‘lost’ (could be process, host or network failure) followed by one of the data nodes.

The surviving data node forms a community of 1. As it can’t know whether the other data node from NG1 is still viable and it can’t contact the arbitrator (the management node) it must shut itself down and so service is lost.

Note that the 2 nodes could be lost instantaneously or the management node might be lost first followed some time later by a data node.

To provide a truly HA solution there are 2 steps:

- Ensure that there is no single point of failure that could result in 192.168.0.19 and either of the other 2 hosts being lost.

- Run a second management node on a 4th host that can take over as arbitrator if 192.168.0.19 is lost

Example 2: Half of data nodes isolated but management node available

In Fig 3. host 192.168.0.3 and its 2 data nodes remains up and running but becomes isolated from the management node and the other data nodes. 2 communities of connected data nodes are formed. As each of these communities are viable but recognize that there could be another viable surviving communitycontain a data node from each node group, they must defer to the management node. As192.168.0.3 has lost it’s connection to the management node, the community of data nodes hosted there shut themselves down. The community hosted on 192.168.0.4 can contact the management node which as it’s the only community it can see, allows its data nodes to stay up and so service is maintained.

.

.

.

Example 3: Half of data nodes isolated and management node lost

The scenario shown in Fig 4. builds upon Example 2 but in the case, the management node is lost before one of the data node hosts loses its connection to the other.

In this case, both communities defer to the management node but as that has been lost they both shut themselves down and service is lost.

Refer to Example 1 to see what steps could be taken to increase the tolerance to multiple failures.

.

.

.

.

.

Example 4: Management node co-located with data nodes

Fig. 4 shows a common, apparent short-cut that people may take, with just 2 hosts available hosting the management node of the same machine as some of the data nodes. In this example, the connection between the 2 hosts is lost. As each community is viable they each attempt to contact the arbitrator – the data nodes on 192.168.0.3 are allowed to stay up while those on 192.168.0.4 shut down as they can’t contact the management node.

However this configuration is inherently unsafe, if 192.168.0.3 failed then there would be a complete loss of service as the data nodes on 192.168.0.4 would form a viable community but be unable to confirm that they represent the only viable community.

It would be tempting to make this more robust by running a second management node on 192.168.0.4 – in that case, when each host becomes isolated from the other, the data nodes local to the management node that’s the current arbitrator will stay up however if the entire host on which the active arbitrator failed then you would again lose service. The management node must be run on a 3rd host for a fault-tolerant solution.

Example 5: Odd number of data node hosts

Fig 6. shows a configuration running the management node on the same host as some of the data nodes does provide a robust solution.

In this example 192.168.0.3 becomes isolated its data nodes form a community of 2 which doesn’t include a data node from NG2 and so they shut themselves down. 192.168.0.4 and 192.168.0.19 are still connected and so they form a commiunity of 4 data nodes; they recognize that the community holds all data nodes from NG2 and so there can be no other viable community and so they are kept up without having to defer to the arbitrator.

Note that as there was no need to consult the management node, service would be maintained even if it was the machine hosting the management node that became isolated.

.

.

Example 6: Loss of all data nodes in a node group

Fig 7. illustrates the case where there are multiple data node failures such that all of the data nodes making up a node group are lost. In this scenario, the cluster has to shut down as that node group is no longer viable and the cluster would no longer be able to provide access to all data.

.

.

.

.

.

.

Example 7: Loss of multiple data nodes from different node groups

Fig 8. is similar to Example 6 but in this case, there is still a surviving data node from each node group. The surviving data node from NG1 forms a community with the one from NG2. As there could be another viable community (containing a data node from NG1 and NG2), they defer to the management node and as they form the only viable community they’re allowed to stay up.

.

.

.

.

.

.

Example 8: Classic, fully robust configuration

Fig 9. shows the classic, robust configuration. 2 independent machines both host management nodes. These are in turn connected by 2 independent networks to each of the data nodes (which are in turn all connected to each other via duplicated network connections).

Excellent article thanks.

Can you point me at a link that describes how to configure nodegroups ? For example, how exactly do you set up the configuration shown in Fig. 3 ?

In example 7, should’n it read

“The surviving data node from NG2 forms a community with the one from NG1” ?

Thanks for pointing out the typo in example 7 – I’ve fixed it now.

You can take a look at http://www.clusterdb.com/mysql-cluster/deploying-mysql-cluster-over-multiple-hosts/ for an example of how to configure a node group. In that example, the node group is defined implicitly…

By setting noofreplicas=2 in config.ini I specify that there should be two data nodes in each node group.

I then define [ndbd] sections in config.ini, for each one specifying which host it should run on. The bit that’s implicit is that Cluster looks at the order of those [ndbd] sections to decide which data nodes to place in which node groups – as there were only 2 specified, they’re both placed into Node Group 0. If there were 4 [ndbd] sections then the first 2 would form NG0 and the second 2 NG1. If there were 6 [ndbd] sections then the fith and sixth sections would form NG2….

If you want to play around with the make up of nodegroups (or when using on-line add-node) there are CREATE NODEGROUP and DROP NODEGROUP commands within the ndb_mgm tool.

When I update my config.ini as you suggest and start the cluster I see;

ndb_mgm> show

Cluster Configuration

———————

[ndbd(NDB)] 4 node(s)

id=2 @10.1.101.23 (mysql-5.1.34 ndb-7.0.6, Nodegroup: 0)

id=3 @10.1.101.31 (mysql-5.1.34 ndb-7.0.6, Nodegroup: 0, Master)

id=22 @10.1.101.23 (mysql-5.1.34 ndb-7.0.6, no nodegroup)

id=33 @10.1.101.31 (mysql-5.1.34 ndb-7.0.6, no nodegroup)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @10.1.101.23 (mysql-5.1.34 ndb-7.0.6)

[mysqld(API)] 2 node(s)

id=4 @10.1.101.23 (mysql-5.1.34 ndb-7.0.6)

id=5 @10.1.101.31 (mysql-5.1.34 ndb-7.0.6)

I then execute;

create nodegroup 22,33

and it creates the nodegroup 1 just fine.

Is this correct ? I had thought I could create the 2 nodegroups just from the static configuration without having to issue the create nodegroup command?

Hi Paul,

I’d have expected Node Group 1 to be formed automatically – could you please post your config.ini file and the command lines you used to launch your ndbd processes?

The command line for the ndbd is;

/usr/sbin/ndbd -c 10.1.101.23:1186 –nodaemon –ndb-nodeid=2

and config.ini is;

[ndbd default]

noofreplicas=2

datadir=/var/lib/mysql/data/mysqlcluster/

[ndb_mgmd default]

datadir=/var/lib/mysql/data/mysqlcluster/

[ndbd]

hostname=10.1.101.23

id=2

[ndbd]

hostname=10.1.101.31

id=3

[ndbd]

hostname=10.1.101.23

id=22

[ndbd]

hostname=10.1.101.31

id=33

[ndb_mgmd]

id = 1

hostname=10.1.101.23

[mysqld]

id=4

hostname=10.1.101.23

[mysqld]

id=5

hostname=10.1.101.31

Hi Paul,

I tried building a Cluster with your config.ini and the same command to launch the ndbds and Nodegroups 0 & 1 were created automatically.

Just to check, are you starting up the Cluster from scratch or are you trying to add a new node group to an existing Cluster?

One other thing you can try is that you can explicitly specify NG by adding “NodeGroup=1” to the relevant ndbd sections of config.ini (if you’ve run the Cluster previously then you’ll need to start the ndbds with the –initial option which will also erase any data you’ve added to the database).

Andrew.

I’ve set up MySQL Cluster with the minimal “classic” number of nodes (two data nodes, 1 management node – all 3 on separate servers), but with just a single (same) switch for all 3 servers.

One significant problem is that if you hit the scenario in example 6 (management node is up, both data nodes are down) which can happen if you reboot the switch, MySQL Cluster by default doesn’t attempt to automatically restart the cluster when the switch returns.

Is there a way to configure MySQL Cluster so that when the data nodes can see each other and the management node too (e.g. when the network connection is restored to all 3), then it automatically restarts the cluster? You’d hope it would be something perhaps the management node would do if the data nodes can’t manage this.

My quick and dirty workaround is to check the output of “ndb_mgm -e show” every 30 seconds, parse it and decide whether the cluster needs restarting on one or both of the data nodes. Surely there’s a better solution than this (I know, redundant switches are one possible fix, but I’d still like a software solution too)?

Sorry to drag up an old article, however I was hoping you could tell me how to setup the config.ini to accomodate the two networks in Example 8? I have tried [NDB_MGMD] HostName=IP1,IP2 and setting two nodes on the same host. Both obviously not supported.

Hi Warren,

in your config.ini file you should include:

[ndb_mgmd]

HostName=IP1

[ndb_mgmd]

HostName=IP2

Regards, Andrew.

Hi andrew,

I configed my cluster as fig2. And deploy one sql node on each ndb node. Then there are always warning in cluster log like “[MgmtSrvr] WARNING — Node 40: Node 60 missed heartbeat 4”. But node 40(data node) and node 60(api) are in the same server. Anything wrong in my config? Here is my config.ini:

[ndbd default]

NoOfReplicas= 2

DataMemory= 2000M

IndexMemory= 240M

TimeBetweenWatchDogCheck= 30000

DataDir= /var/lib/mysql-cluster

MaxNoOfConcurrentTransactions:4096

MaxNoOfConcurrentOperations:500000

MaxNoOfLocalOperations:550000

MaxNoOfAttributes: 10000

MaxNoOfTables: 3000

MaxNoOfOrderedIndexes: 3000

MaxNoOfUniqueHashIndexes: 3000

MaxNoOfTriggers: 500

TransactionDeadlockDetectionTimeout=30000

StopOnError=0

ArbitrationTimeout=5000

[ndb_mgmd default]

DataDir= /var/lib/mysql-cluster

[ndb_mgmd]

NodeId=1

HostName= 192.168.39.155

ArbitrationRank: 1

[ndbd]

NodeId= 30

HostName= 192.168.35.7

[ndbd]

NodeId= 40

HostName= 192.168.35.8

[mysqld]

NodeId= 50

HostName= 192.168.35.7

#ArbitrationRank=2

[mysqld]

NodeId= 60

HostName= 192.168.35.8

#ArbitrationRank=2

[mysqld]

NodeId= 70

[mysqld]

NodeId= 80

[tcp default]

PortNumber= 55555

SendBufferMemory=8M

ReceiveBufferMemory=8M

Kizashi,

not sure why the default mysqld->data node heartbeat interval is occasionally being exceeded but you can try changing it from the default (1500 ms) thorugh the HeartbeatIntervalDbApi parameter…. http://dev.mysql.com/doc/refman/5.5/en/mysql-cluster-ndbd-definition.html#ndbparam-ndbd-heartbeatintervaldbapi

Andrew.

Thanks for the link of mysql doc. One more question about fig 2. Does the fig 2’s cluster has the same robust with the fig 1? If cluster configed as fig 1,it need double times memory and disk than fig2. What’s the benefit with two data nodes on each host?

Thanks again.

kizashi,

> Thanks for the link of mysql doc. One more question about fig 2. Does the fig 2’s cluster has the same robust with the fig 1?

Yes – both can survive tghe loss of any one of the hosts,

>If cluster configed as fig 1,it need double times memory and disk than fig2. What’s the benefit with two data nodes on each host?

This is less useful from Cluster 7.2. Prior to Cluster 7.0 a data node (ndbd) was always single threaded and so on multi-core machines you might have multiple data nodes to exploit the available cores. From 7.0 the ndbmtd could exploit up to 8 cores and in 7.2 it increased to ~50 cores.

Regards, Andrew.

Hi, what if only the back-to-back connection of the data nodes fail?

I have recently run a test where I disconnect the back to back connection, and it resulted in a slip-brain (i have 2 management nodes on seperate machines, and 2 data nodes on seperate machines, a total of 4 machines).

I run a query and it was returning different results, thus I noticed that they are split-brained.

How can this be avoided? shouldn’t the arbitrators have noticed this? how will they know that the communication between the data nodes have failed?

Thanks.

Hi Keith,

If the data nodes cannot communicate with each other then the heartbeats will fail and both data nodes would realise that they had become isolated from the other. In this case, each would realise that it made up exactly half of the Cluster and so would attempt to contact the arbitrator to see if they should stay up or shut down. The arbitrator should select one of the data nodes – tell that one to stay up and tell the other to shut down.

Have you checked if you still have 2 (angel + real process) ndbmtd (or ndbd) processes running on both hosts (if not then one has correctly shut down)? What’s the output from “ndb_mgm -e show”?

Andrew.

Hi All,

Thanks for this comment. I just want to know How can we use mysql cluster with Hardware Load balancer.

Please help me to do this

Thanks

Ashok,

just have load ballancer distribute the queries evenly accross all of the available MySQL Servers.

Andrew.

Default value of HeartbeatIntervalDbDb was set 5000 in 7.2 while it was 1500 in earlier releases. This seems to be significant difference. This will increase the cluster outage in case of data node failure.

Dinesh,

obviously, you can change any of these settings if you’re not happy with the default.

Best Regards, Andrew.

Hi Andrew,

I have a challenge for you.

We have an enterprise level MySQL cluster and it appears that when the active node fails, a row update is not replicated to the passive node.

The passive node becomes active and is missing the data changes made on the first node before the failure.

My technical staff are telling me that this is inevitable. Is this really the case ?

Thanks,

Jes

Hi Jes,

is this MySQL Cluster or “regular” MySQL with replication?

Andrew.

Dear Admin !

I have a question.

pls help me !

I have 2 datanode,2 management.

when one of 2 datanodes ( datanode 1) fail ( maybe network, datanode 1 shutdown server..)

when datanode 1 start or datanode connected cluster,

how to datanode 1 resync data ?

what process do it ?

thank Admin

When the data node starts back up it loads in the data that has been checkpointed to its local disk; it then receives any subsequent changes from the other data node in the same node group.

Andrew.

tks andrew !

it mean: it will compare checkpointed data in its and other datanode. If it diferences, it will receives data from other datanode ?

But which process allow it does this action ?

dear Admin !

I have a question, pls help me !

my question about timout network.

the transaction timeout ? how long ?

when transaction running, the network error, have timeout.

So when network connected ?

how to sync from another datanode ? that use checkpointed ?

tks admin !

Hi Andrew,

I was wondering about the most resilient setup of a MySQL Cluster in an AWS deployment, which also applies for other similar redundant setups.

It’s obvious that the Data nodes must be placed in different Availability Zones. For the sake of simplicity, lets assume we have only “Zone A” and “Zone B”. All our other infrastructure is redundantly deployed in both Availability zones. The Internet-facing part of this setup, for example web servers, are made redundantly accessible by an Elastic Load Balancer (ELB) by AWS. This ELB could easily be two standard routers running BGP too, so that we’re not bound to the AWS terms here.

We have the option to place the Management node in either “Zone A” or “Zone B”. Lets assume we deployed it in “Zone A”. In the case of a failure, we could end up in the following situation:

– “Zone A” fails completely and is not accessible via Internet

– “Zone B” works OK and is accessible via Internet

However, in our case the Management node happened to be in “Zone A”. This will shutdown the Data nodes in “Zone B” because they have no quorum and can’t talk to the Management node either. So we end up in the unfortunate situation, where we have enough Data nodes to continue operation but shutdown the wrong redundant group of Data nodes. Note: Even if we had a redundant Management node in “Zone B”, it won’t be promoted because we lack quorum.

There is only one way to know which redundant group of Data nodes to shutdown in such a split-brain situation. The Management node must reside outside of the two Availability Zones, somewhere in Internet. This way, whichever Availability Zone can still talk to it must stay alive, and the other dead or split-brain zone must be shutdown.

Does this make sense — placing the Management node outside the private networks? Or the network latency will be a show-stopper here? Or we have too much security implications because Data and Management nodes will talk via a non-encrypted channel over the Internet?

Your comment is appreciated.

Hi Ivan,

I agree that for the best HA, the management node should not be in either Zone A or Zone B as if the zone containing the management node should completely fail the data nodes in the other zone would shut down. If there’s a Zone C you can use or something completely external from the zones then that would let you survive the loss of an entire zone.

Andrew.

Dear Admin !

pls help me!

I have 2 server install mysql cluster.

Ram: 15gb.

But i cannt config datamemory.

i set: datamemory=8G and indexmemory=4G

but when i check:

Node 1: Data usage is 93%(153752 32K pages of total 163840)

Node 1: Index usage is 47%(62469 8K pages of total 131104)

Node 2: Data usage is 26%(69233 32K pages of total 262144)

Node 2: Index usage is 5%(28087 8K pages of total 524320)

the usage is very high, i want decrease it, but i dont know how i do ?

pls help me !

tks Admin

You could consider moving some of the tables/columns onto disk (rather than it all being held in RAM); instructions can be found here.

Usual rules also apply are you using columns that are too large; do you have indexes that aren’t being used; delete old rows; run

OPTIMIZE TABLE….Best Regards, Andrew.

tks Andrew.

But i have some question.

My db was alter to ndb engine.

I dont create tablespace, not store in disk.

So i want alter this to store disk.

how can i do it online, because application still connect it.

The first thing would be to create the new table space.

You can change from memory to disk using the ALTER TABLE command but I confess that I haven’t tested that it’s an on-line operation.

Andrew.

Hello,

I have 3 mysqld nodes.

[mysqld(API)] 3 node(s)

id=30 @192.168.0.52 (mysql-5.6.19 ndb-7.3.6)

id=31 @192.168.0.56 (mysql-5.6.19 ndb-7.3.6)

id=32 @192.168.0.57 (mysql-5.6.19 ndb-7.3.6)

I was restored stored procedure on “id=30” node. But, when i checked id=31 and 32 there not any stored procedure are present.

Please let me how can we synchronize stored procedure automatically in mysql-cluster.

Thanks,

Bhairav

Stored procedures are local to each mysqld and so you would need to create each one 3 times.

Andrew.

Hello Andrew,

Is there any another way to synchronize stored procedure automatically ?

Because this is much

Hello Andrew,

Thanks for your reply..

Please let us know, If is there any other workaround to synchronize stored procedure automatically ?

Thanks,

Bhairav

Hello Sir,

I am getting below error while changing table storage engine.

[ERROR] NDB Binlog: Skipping locally defined table ‘Test.SRLSchema’ from binlog schema event ‘ALTER TABLE Test.SRLSchema ENGINE = NDBCLUSTER’ from node 33.

Thanks,

Daya

[…] Can I colocate MySQL Cluster data nodes with MySQL Servers in order use all posible resources? Yes, you can co-locate data nodes and MySQL Servers. Note that the management node (ndb_mgmd) should not run on the same machine as any of the data nodes – read this post for details on how to deploy MySQL Cluster nodes for High Availability […]

Hello, Sir.

I’m wondering about a scenario similar to Example 5 — only that all 3 servers have both management and data nodes.

Does this still benefit from the loss of any single server?

If the server failed that contained the active arbiter, would one of the management nodes on the other 2 servers automatically become the active arbiter?

Thank you for your time!

Best Regards,

Joe

Joe,

If the arbiter failed at the same time as half of the data nodes then the Cluster would shut down.

Thank you for the response. I apologize for the delayed response — I was pulled off task for a couple of months. Back to the scenario I mentioned — there really isn’t ‘half’ of the data nodes — Each node belongs to the same node group (i.e. 3 replicas).

To go in a bit more depth, there would be 3 physical hosts running hypervisors. each hypervisor would run 2 VMs — 1 VM would run both SQL and Data Nodes. The other VM would run a management node.

It seems to me that the only split brain scenario that can occur follows:

If all hosts/VMs are running fine, and then the host with the arbiter (hostA) becomes network isolated from the other 2 hosts (hostB and hostC) (say network cables disconnected), then we arrive at a split brain b/c:

1) Data node on hostA can still communicate with arbiter (also on hostA) on therefore stays up. However, if all DML/DDL originates from networked clients, then the contents of this data node will effectively remain unchanged.

2) Data nodes on hostB and hostC can no longer detect heartbeat from hostA arbiter but form a majority so they can elect a new aribiter from the remaining management nodes on hostB and hostC and stay up/online. Furthermore, they can accept new DML/DDL commands and consequently remain current.

If this is true, then when network connectivity is restored to hostA (disconnected cables are discovered and reconnected), would MySQL Cluster realize that hostB and hostC actually contained the latest legitimate data ultiamtely bring the data node on hostA current? Would the management node on hostA become the arbiter again?

Thank you for your time and expertise. It is truly appreciated!

Best Regards,

Joe

Hello, thanks for sharing this knowledge.

Maybe this is just a dumb question, but I have to ask it anyway, since this article half cleared my doubts and left only this little thing to clear before implementing a cluster.

Let say I have 5 machines. May I configure 5 management nodes AND 5 data nodes, 1 for each machine?

In this case I’d have:

Machine 1: 1 NDB and 1 MGMT node (id1)

Machine 2: 1 NDB and 1 MGMT node (id2)

…

and so on.

This make sense or is a complete waste of resource or worse, a risk for data? On top of this architecture I would use Keepalived to create a virtual IP to be used to reach the active management node.

Apart from needing an even number of data nodes, that configuration should be fault tolerant. If you had 6 machines, each with a data node and a management node then you could lose 2 machines (providing they weren’t part of the same node group) and the Cluster would stay up. If you lost 3 machines (again not 2 from the same node group) then the Cluster would stay up if the management node that is currently acting as the arbiter wasn’t on one of the failed machines. Note that only 1 management node is able to act as the aribter at any one time and so running more than 2 doesn’t normally add a lot of value.

Andrew.

“Apart from needing an even number of data nodes…”

I assume you said this because 5 is not a valid value for NoOfReplicas… if I’m incorrect, can you please explain the even number of data nodes constraint?

Hello, thanks for sharing this knowledge.

I’m a beginner about MySQL and i have a question. As i read, MySQL Cluster store data on RAM. What happened to data when all server failed ? We lost all data? Can you explaint for me ? Thanks so much

Good question – this post explains all 🙂

Hi Andrew, thanks for your answer

Can you tell me know whether i have to install mysql-server-5.6 befor i install mysql cluster 7.4 ?

I readed configuration guide and setup mysql cluster like this link. On management node, i SHOW and result like i read but i can’t creat database or table

https://dev.mysql.com/doc/refman/5.6/en/mysql-cluster-install-first-start.html

You should use the MySQL Server that comes as part of the MySQL Cluster package.

Hello Andrew,

I have 2 sites, and I’m running a data node and a mgmt node in each site (4 servers total). If one site loses connectivity from the other, how would the non-arbiter mgmt node know that it should not become arbiter and therefore allow its data node to keep running in a split-brain scenario?

As there isn’t a majority of nodes available (and speaking to each other) a new arbiter cannot be elected.

Andrew.

Hi Andrew,

Can I set up a cluster with one data node and one mgmt node?

I know his won’t provide HA, but still can I?

And what if that data node fails?

Can I restart the cluster without –initial option?

It’s not something that’s tested but it will work. If you restart the data nose (without –initial) then it will load the disk copy of the data into RAM.

Nice article, since ndbcluster data is stored in RAM , is the data lost/preserved when the server is powered off and powered again ?

Thank you

There are periodic snapshots taken and writes are asynchronously checkpointed to disk so you have all but the last second or so of data on disk. Of course, the other node from the node group also has all of the data.

Hello ,

Thanks for really nice and very helpful article.

Assume we have a Linux on which MySQL daemon is running and some data are inserting into MySQL Server. therefore, if the connection between SQL node and data node have lost, the data will continue to inserting into MySQL tables?!

If the table in question is using the MySQL Cluster storage engine (

ENGINE = NDBwhen creating the table) then all of the data is stored in the data nodes and so if the mysqld loses contact with the data nodes then the table can not be read from or written to.Thanks. Great help.

Is there any solution to inserting data into MySQL server in order to prevent data losing until the connection restore again?!

The only thing I can think of is to start writing your data into an INNODB table – that is still stored locally in the mysqld (and so of course, can’t be seen by ay of the other mysqlds).

Hello,

I would like to know if we can install the three nodes (SQL, Data and MGMT) on the same server.

Here are the two scenarios I am currently evaluating:

In this scenario, we have 6 servers. The installation is done in a cloud environment AWS.

Availability zone 1 Availability zone 2

SQL Node 1 SQL Node 2

Data Node 1 — replication — Data Node 2

MGMT node 1 MGMT node 2

In this scenario, we have 2 servers. The installation is done in a cloud environment AWS.

Availability zone 1 Availability zone 2

SQL +Data + MGMT Nodes 1 SQL +Data + MGMT Nodes 2

1 server (= 1 instance EC2) 1 server (= 1 instance EC2)

I read that it was not recommended to install the nodes on the same server but I did not find an explanation on why it should not be done especially in the type of scenario above.

For information, I am not a DBA.

Thank you for your help.

You can run multiple nodes on the same server (assuming there are enough resources) but if you have just 2 servers then the whole cluster will shutdown if one of the servers is lost (as there must be at least half of the data nodes present in order to stay up).

To be sure, on one EC2 instance (assuming I have enough resources) I can install the SQL node + the Data node + the MGMT node.

On another EC2 instance (assuming I have enough resources) I can install the SQL node + the Data node + the MGMT node.

So I have two instances that are part of the same node group. My cluster is active / active.

If the first instance or one of the nodes (eg. Data node) installed on it crashes then I have no loss of service because instance 2 is still active. Right?

In the MySQL documentation, it is written that this type of installation is acceptable for a development environment but is not recommended for a production environment. I would like to understand why because I see no single point of failure.

Thanks again for your precious help.

Excellent article, which helped me understand why my cluster shuts down when losing 1 node group. I figured one surviving node group held all the data, and they replicated between each group. Wrong.

I realized after reading that I painted myself into a corner. I have put 2 ndb nodes from a single node group (#1) on one vmware server. If the vmware server goes down, I lose that entire node group (#1). The remaining node group (#0) residing on separate physical servers knows it’s lost both members of the node group #1, and therefore shuts down.

My question is, can I safely reassign the ndb nodes so that only one of the VMware hosted nodes is in group #1, and the other in #0? Or will attempting to reassign the ndb nodes to different node groups cause a big problem?

Thanks for any input you can provide.

Hi Andrew,

I can’t find any documentation on changing nodegroup assignments, and nothing but crickets over on the mysql-ndb IRC channel. Do you have any information on my question above?

Thank you

Thanks for your question but I’m afraid that I no longer work for Oracle (I’m now working for MongoDB) and so am not current on MySQL technology. I’d suggest checking the MySQL Cluster forum.

Thanks for your question but I’m afraid that I no longer work for Oracle (I’m now working for MongoDB) and so am not current on MySQL technology. I’d suggest checking the MySQL Cluster forum.