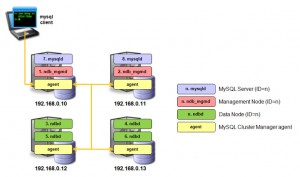

Oracle have just announced that MySQL Cluster Manager 1.2 is Generally Available. For anyone not familiar with MySQL Cluster Manager – it’s a command-line management tool that makes it simpler and safer to manage your MySQL Cluster deployment – use it to create, configure, start, stop, upgrade…. your cluster.

Oracle have just announced that MySQL Cluster Manager 1.2 is Generally Available. For anyone not familiar with MySQL Cluster Manager – it’s a command-line management tool that makes it simpler and safer to manage your MySQL Cluster deployment – use it to create, configure, start, stop, upgrade…. your cluster.

So what has changed since MCM 1.1 was released?

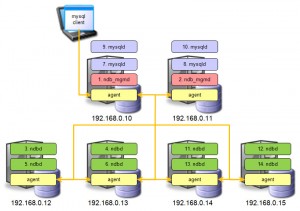

The first thing is that a lot of work has happened under the covers and it’s now faster, more robust and can manage larger clusters. Feature-wise you get the following (note that a couple of these were released early as part of post-GA versions of MCM 1.1):

- Automation of on-line backup and restore

- Single command to start MCM and a single-host Cluster

- Multiple clusters per site

- Single command to stop all of the MCM agents in a Cluster

- Provide more details in “show status” command

- Ability to restart “initial” the data nodes in order to wipe out the database ahead of a restore

A new version of the MySQL Cluster Manager white paper has been released that explains everything that you can do with it and also includes a tutorial for the key features; you can download it here.

Watch this video for a tutorial on using MySQL Cluster Manager, including the new features:

Using the new features

Single command to run MCM and then create and run a Cluster

A single-host cluster can very easily be created and run – an easy way to start experimenting with MySQL Cluster:

billy@black:~$ mcm/bin/mcmd –bootstrap

MySQL Cluster Manager 1.2.1 started

Connect to MySQL Cluster Manager by running "/home/billy/mcm-1.2.1-cluster-7.2.9_32-linux-rhel5-x86/bin/mcm" -a black.localdomain:1862

Configuring default cluster 'mycluster'...

Starting default cluster 'mycluster'...

Cluster 'mycluster' started successfully

ndb_mgmd black.localdomain:1186

ndbd black.localdomain

ndbd black.localdomain

mysqld black.localdomain:3306

mysqld black.localdomain:3307

ndbapi *

Connect to the database by running "/home/billy/mcm-1.2.1-cluster-7.2.9_32-linux-rhel5-x86/cluster/bin/mysql" -h black.localdomain -P 3306 -u root

You can then connect to MCM:

billy@black:~$ mcm/bin/mcm

Or access the database itself simply by running the regular mysql client.

Extra status information

When querying the status of the processes in a Cluster, you’re now also shown the package being used for each node:

mcm> show status --process mycluster; +--------+----------+------ +---------+-----------+---------+ | NodeId | Process | Host | Status | Nodegroup | Package | +--------+----------+-------+---------+-----------+---------+ | 49 | ndb_mgmd | black | running | | 7.2.9 | | 50 | ndb_mgmd | blue | running | | 7.2.9 | | 1 | ndbd | green | running | 0 | 7.2.9 | | 2 | ndbd | brown | running | 0 | 7.2.9 | | 3 | ndbd | green | running | 1 | 7.2.9 | | 4 | ndbd | brown | running | 1 | 7.2.9 | | 51 | mysqld | black | running | | 7.2.9 | | 52 | mysqld | blue | running | | 7.2.9 | +--------+----------+-------+---------+-----------+---------+

Simplified on-line backup & restore

MySQL Cluster supports on-line backups (and the subsequent restore of that data); MySQL Cluster Manager 1.2 simplifies the process.

The database can be backed up with a single command (which in turn makes every data node in the cluster backup their data):

mcm> backup cluster mycluster;

The list command can be used to identify what backups are available in the cluster:

mcm> list backups mycluster; +----------+--------+--------+----------------------+ | BackupId | NodeId | Host | Timestamp | +----------+--------+--------+----------------------+ | 1 | 1 | green | 2012-11-31T06:41:36Z | | 1 | 2 | brown | 2012-11-31T06:41:36Z | | 1 | 3 | green | 2012-11-31T06:41:36Z | | 1 | 4 | brown | 2012-11-31T06:41:36Z | | 1 | 5 | purple | 2012-11-31T06:41:36Z | | 1 | 6 | red | 2012-11-31T06:41:36Z | | 1 | 7 | purple | 2012-11-31T06:41:36Z | | 1 | 8 | red | 2012-11-31T06:41:36Z | +----------+--------+--------+----------------------+

You may then select which of these backups you want to restore by specifying the associated BackupId when invoking the restore command:

mcm> restore cluster -I 1 mycluster;

Note that if you need to empty the database of its existing contents before performing the restore then MCM 1.2 introduces the initial option to the start cluster command which will delete all data from all MySQL Cluster tables.

Stopping all MCM agents for a site

A single command will now stop all of the agents for your site:

mcm> stop agents mysite;

Getting started with MySQL Cluster Manager

You can fetch the MCM binaries from edelivery.oracle.com and then see how to use it in the MySQL Cluster Manager white paper.

Please try it out and let us know how you get on!