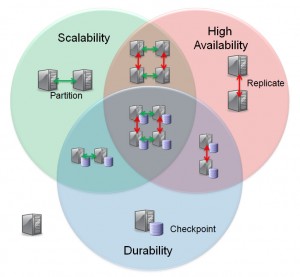

There is often confusion as to how it can be claimed that MySQL Cluster delivers in-memory performance while also providing durability (the “D” in ACID). This post explains how that can be achieved as well as how to mix and match scalability, High Availability and Durability.

As an aside, the user can specify specific MySQL Cluster tables or columns to be stored on disk rather than in memory – this is a solution for extra capacity but you don’t need to take this performance hit just to have the data persisted to disk. This post focuses on the in-memory approach.

There is a great deal of flexibility in how you deploy MySQL Cluster with in-memory data – allowing the user to decide which features they want to make use of.

The simplest (and least common) topology is represented by the server sitting outside of the circles in the diagram. The data is held purely in memory in a single data node and so if power is lost then so is the data. This is an option if you’re looking for an alternative to the MEMORY storage engine (and should deliver better write performance as well as more functionality). To implement this, your configuration file would look something like this:

config.ini (no Durability, Scalability or HA)

[ndbd default] NoOfReplicas=1 datadir=E:am233268DocumentsMySQL_ClusterMy_Clusterdata [ndbd] hostname=localhost [ndb_mgmd] hostname=localhost [mysqld] hostname=localhost

By setting NoOfReplicas to 1, you are indicating that data should not be duplicated on a second data node. By only having one [ndbd] section you are specifying that there should be only 1 data node.

To indicate that the data should not be persisted to disk, make the following change:

mysql> SET ndb_table_no_logging=1;

Once ndb_table_no_logging has been set to 1, any Cluster tables that are subsequently created will be purely in-memory (and hence the contents will be volatile).

Durability can be added as an option. In this case, the changes to the in-memory data is persisted to disk asynchronously (thus minimizing any increase in transaction latency). Persistent is implemented using 2 mechanisms in combination:

- Periodically a snapshot of the in-memory data in the data node is written to disk – this is referred to as a Local Checkpoint (LCP)

- Each change is written to a Redo log buffer and then periodically these buffers are flushed to a disk-based Redo log file – this is coordinated across all data nodes in the Cluster and is referred to as a Global Checkpoint (GCP)

This checkpointing to disk is enabled by default but if you’ve previously turned it off then you can turn it back on with:

mysql> SET ndb_table_no_logging=0;

Following this change, any new Cluster tables will be asynchronously persisted to disk. If you have existing, volatile MySQL Cluster tables then you can now make them persistent:

mysql> ALTER TABLE tab1 ENGINE=ndb;

High Availability can be implemented by including extra data node(s) in the Cluster and increasing the value of NoOfReplicas (2 is the normal value so that all data is held in 2 data nodes). The set (pair) of data nodes storing the same set of data is referred to as a node group. Data is synchronously replicated between the data nodes in the node group and so changes cannot be lost unless both data nodes fail at the same time. If the 2 data nodes making up a node group are run on different servers then the data can remain available for use even if one of the servers fails. The configuration file for single, 2 data node node group Cluster would look something like:

config.ini (HA but no scalability)

[ndbd default] NoOfReplicas=2 datadir=E:am233268DocumentsMySQL_ClusterMy_Clusterdata

[ndbd] hostname=192.168.0.1

[ndbd] hostname=192.168.0.2

[ndb_mgmd] hostname=192.168.0.3

[mysqld] hostname=192.168.0.1

[mysqld] hostname=192.168.0.2

[ndbd default] NoOfReplicas=1 datadir=E:am233268DocumentsMySQL_ClusterMy_Clusterdata

[ndbd] hostname=192.168.0.1

[ndbd] hostname=192.168.0.2

[ndb_mgmd] hostname=192.168.0.1

[mysqld] hostname=192.168.0.1

[mysqld] hostname=192.168.0.2

[ndbd default] NoOfReplicas=2 datadir=E:am233268DocumentsMySQL_ClusterMy_Clusterdata

[ndbd] hostname=192.168.0.1

[ndbd] hostname=192.168.0.2

[ndbd]

hostname=192.168.0.3

[ndbd]

hostname=192.168.0.4

[ndb_mgmd] hostname=192.168.0.5

[ndb_mgmd]

hostname=192.168.0.6

[mysqld] hostname=192.168.0.5

[mysqld] hostname=192.168.0.6