Windows Server 2008 R2 Failover Clustering

Oracle has announced support for running MySQL on Windows Server Failover Clustering (WSFC); with so many people developing and deploying MySQL on Windows, this offers a great option to add High Availability to MySQL deployments if you don’t want to go as far as deploying MySQL Cluster.

This post will give a brief overview of how to set things up but for all of the gory details a new white paper MySQL with Windows Server 2008 R2 Failover Clustering is available – please give me any feedback. I will also be presenting on this at a free webinar on Thursday 15th September (please register in advance) as well at an Oracle OpenWorld session in San Francisco on Tuesday 4th October (Tuesday, 01:15 PM, Marriott Marquis – Golden Gate C2) – a good opportunity to get more details and get your questions answered.

It sometimes surprises people just how much MySQL is used on Windows, here are a few of the reasons:

- Lower TCO

- 90% savings over Microsoft SQL Server

- If your a little skeptical about this then try it out for yourself with the MySQL TCO Savings Calculator

- Broad platform support

- No lock-in

- Windows, Linux, MacOS, Solaris

- Ease of use and administration

- < 5 mins to download, install & configure

- MySQL Enterprise Monitor & MySQL WorkBench

- Reliability

- Performance and scalability

- MySQL 5.5 delivered over 500% performance boost on Windows.

- Integration into Windows environment

- ADO.NET, ODBC & Microsoft Access Integration

- And now, support for Windows Server Failover Clustering!

Probably the most common form of High Availability for MySQL is MySQL (asynchronous or semi-synchronous replication) and the option for the highest levels of availability is

MySQL Cluster. We are in the process of rolling out a number of solutions that provide levels of availability somewhere in between MySQL Replication and MySQL Cluster; Oracle VM Template for MySQL Enterprise Edition was the first (

overview,

webinar replay,

white paper) and WSFC is the second.

Solution Overview

MySQL with Windows Failover Clustering requires at least 2 servers within the cluster together with some shared storage (for example FCAL SAN or iSCSI disks). For redundancy, 2 LANs should be used for the cluster to avoid a single point of failure and typically one would be reserved for the heartbeats between the cluster nodes.

MySQL with Windows Failover Clustering requires at least 2 servers within the cluster together with some shared storage (for example FCAL SAN or iSCSI disks). For redundancy, 2 LANs should be used for the cluster to avoid a single point of failure and typically one would be reserved for the heartbeats between the cluster nodes.

The MySQL binaries and data files are stored in the shared storage and Windows Failover Clustering ensures that at most one of the cluster nodes will access those files at any point in time (hence avoiding file corruptions).

Clients connect to the MySQL service through a Virtual IP Address (VIP) and so in the event of failover they experience a brief loss of connection but otherwise do not need to be aware that the failover has happened other than to handle the failure of any in-flight transactions.

Target Configuration

Target Configuration

This post will briefly step through how to set up and use a cluster and this diagrams shows how this is mapped onto physical hardware and network addresses for the lab used later in this post. In this case, iSCSI is used for the shared storage. Note that ideally there would be an extra subnet for the heartbeat connection between ws1 and ws3.

This is only intended to be an overview and the steps have been simplified refer to the white paper for more details on the steps.

Prerequisites

- MySQL 5.5 & InnoDB must be used for the database (note that MyISAM is not crash-safe and so failover may result in a corrupt database)

- Windows Server 2008 R2

- Redundant network connections between nodes and storage

- WSFC cluster validation must pass

- iSCSI or FCAL SAN should be used for the shared storage

Step 1 Configure iSCSI in software (optional)

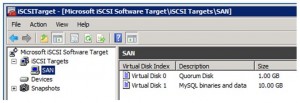

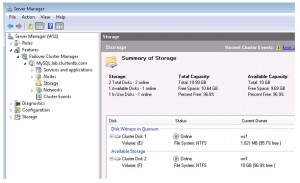

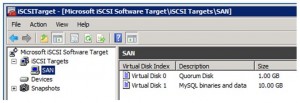

Create 2 clustered disks

This post does not attempt to describe how to configure a highly available, secure and performant SAN but in order to implement the subsequent steps shared storage is required and so in this step we look at one way of using software to provide iSCSI targets without any iSCSI/SAN hardware (just using the server’s internal disk). This is a reasonable option to experiment with but probably not what you’d want to deploy with for a HA application. If you already have shared storage set up then you can skip this step and use that instead.

As part of this process you’ll create at least two virtual disks within the iSCSI target; one for the quorum file and one for the MySQL binaries and data files. The quorum file is used by Windows Failover Clustering to avoid “split-brain” behaviour.

Step 2. Ensure Windows Failover Clustering is enabled

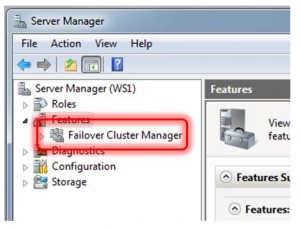

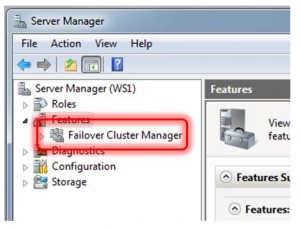

Ensure that WSFC is enabled

To confirm that Windows Failover Clustering is installed on ws1 and ws3, open the “Features” branch in the Server Manager tool and check if “Failover Cluster Manager” is present.

If Failover Clustering is not installed then it is very simple to add it. Select “Features” within the Service Manager and then click on “Add Features” and then select “Failover Clustering” and then “Next”.

Step 3. Install MySQL as a service on both servers

Install MySQL as a Windows Service

If MySQL is already installed as a service on both ws1 and ws3 then this step can be skipped.

The installation is very straight-forward using the MySQL Windows Installer and selecting the default options is fine.

Within the MySQL installation wizard, sticking with the defaults is fine for this exercise. When you reach the configuration step, check “Create Windows Service”.

The installation and configuration must be performed on both ws1 and ws2, if necessary.

Step 4. Migrate MySQL binaries & data to shared storage

If the MySQL Service is running on either ws1 or ws3 then stop it – open the Task Manager using ctrl-shift-escape, select the “Services” tab and then right-click on the MySQL service and choose “Stop Service”.

As the iSCSI disks were enabled on ws1 you can safely access them in order to copy across the MySQL binaries and data files to the shared disk.

Step 5. Create Windows Failover Cluster

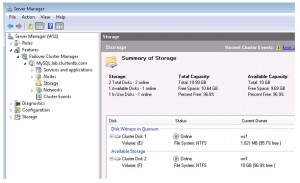

Create the Cluster (without MySQL)

From the Server Manager on either ws1 or ws3 navigate to “Features -> Failover Cluster Manager” and then select “Validate a Configuration”. When prompted enter ws1 as one name and then ws3 as the other.

In the “Testing Options” select “Run all tests” and continue. If the tests report any errors then these should be fixed before continuing.

Now that the system has been verified, select “Create a Cluster” and provide the same server names as used in the validation step. In this example, “MySQL” is provided as the “Cluster Name” and then the wizard goes on to create the cluster.

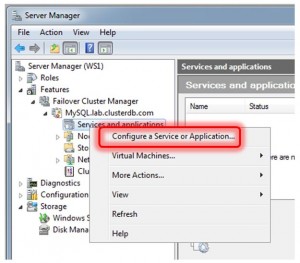

Step 6. Create Cluster of MySQL Servers within Windows Cluster

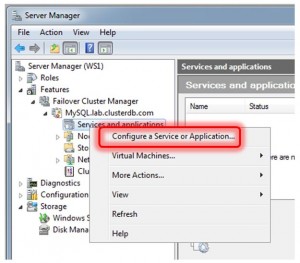

Cluster the MySQL Service

Adding the MySQL service to the new Cluster is very straight-forward. Right-click on “Services and applications” in the Server Manager tree and select “Configure a Service or Application…”. When requested by the subsequent wizard, select “Generic Service” from the list and then “MySQL” from the offered list of services. Our example name was “ClusteredMySQL”. Please choose an appropriate name for your cluster. The wizard will then offer the shared disk that has not already been established as the quorum disk for use with the Clustered service – make sure that it is selected.

Once the wizard finishes, it starts up the MySQL Service. Click on the “ClusteredMySQL” service branch to observe that the service is up and running. You should also make a note of the Virtual IP (VIP) assigned, in this case 192.168.2.18.

Step 7. Test the cluster

As described in Step 6, the VIP should be used to connect to the clustered MySQL service:

C: mysql –u root –h 192.168.2.18 –P3306 –pbob

From there create a database and populate some data.

mysql> CREATE DATABASE clusterdb;

mysql> USE clusterdb;

mysql> CREATE TABLE simples (id int not null primary key) ENGINE=innodb;

mysql> REPLACE INTO simples VALUES (1);

mysql> SELECT * FROM simples;

+----+

| id |

+----+

| 1 |

+----+

Migrate MySQL Service Across Cluster

The MySQL service was initially created on ws1 but it can be forced to migrate to ws3 by right-clicking on the service and selecting “Move this service or application to another node”.

As the MySQL data is held in the shared storage (which has also been migrated to ws3), it is still available and can still be accessed through the existing mysql client which is connected to the VIP:

mysql> select * from simples;

ERROR 2006 (HY000): MySQL server has gone away

No connection. Trying to reconnect...

Connection id: 1

Current database: clusterdb

+----+

| id |

+----+

| 1 |

+----+

Note the error shown above – the mysql client loses the connection to the MySQL service as part of the migration and so it automatically reconnects and complete the query. Any application using MySQL with Windows Failover Cluster should also expect to have to cope with these “glitches” in the connection.

Conclusion

More users develop and deploy and MySQL on Windows than any other single platform. Enhancements in MySQL 5.5 increased performance by over 5x compared to previous MySQL releases. With certification for Windows Server Failover Clustering, MySQL can now be deployed to support business critical workloads demanding high availability, enabling organizations to better meet demanding service levels while also reducing TCO and eliminating single vendor lock-in.

Please let me know how you get on by leaving comments on this post.

MySQL Cluster Manager 1.3.4 is now available to download from My Oracle Support and from the Oracle Software Delivery Cloud.

MySQL Cluster Manager 1.3.4 is now available to download from My Oracle Support and from the Oracle Software Delivery Cloud.