I figured that it was time to check out how to install, configure, run and use MySQL Cluster on Windows. To keep things simple, this first Cluster will all run on a single host but includes these nodes:

- 1 Management node (ndb_mgmd)

- 2 Data nodes (ndbd)

- 3 MySQL Server (API) nodes (mysqld)

Downloading and installing

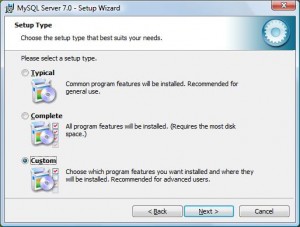

Browse to the Windows section of the MySQL Cluster 7.0 download page and download the installer (32 or 64 bit).

MySQL Cluster Windows Installer

Run the .msi file and choose the “Custom” option. Don’t worry about the fact that it’s branded as “MySQL Server 7.0” and that you’ll go on to see adverts for MySQL Enterprise – that’s just an artefact of how the installer was put together.

On the next screen, I decided to change the “Install to” directory to “c:mysql” – not essential but it saves some typing later.

Go ahead and install the software and then you’ll be asked if you want to configure the server – uncheck that as we’ll want to tailor the configuration so that it works with our Cluster.

There are a couple of changes you need to make to your Windows configuration before going any further:

- Add the new bin folder to your path (in my case “C:mysqlbin”)

- Make hidden files visible (needed in order to set up multiple MySQL Server processes on the same machine)

Configure and run the Cluster

Copy the contents of “C:ProgramDataMySQLMySQL Server 7.0data” to “C:ProgramDataMySQLMySQL Server 7.0data4”, “C:ProgramDataMySQLMySQL Server 7.0data5” and “C:ProgramDataMySQLMySQL Server 7.0data6”. Note that this assumes that you’ve already made hidden files visible. Each of these folders will be used by one of the mysqld processes.

Create the folder “c:mysqlcluster” and then create the following files there:

config.ini

[ndbd default]

noofreplicas=2

[ndbd]

hostname=localhost

id=2

[ndbd]

hostname=localhost

id=3

[ndb_mgmd]

id = 1

hostname=localhost

[mysqld]

id=4

hostname=localhost

[mysqld]

id=5

hostname=localhost

[mysqld]

id=6

hostname=localhost

my.4.cnf

[mysqld]

ndb-nodeid=4

ndbcluster

datadir="C:ProgramDataMySQLMySQL Server 7.0data4"

port=3306

server-id=3306

my.5.cnf

[mysqld]

ndb-nodeid=5

ndbcluster

datadir="C:ProgramDataMySQLMySQL Server 7.0data5"

port=3307

server-id=3307

my.6.cnf

[mysqld]

ndb-nodeid=6

ndbcluster

datadir="C:ProgramDataMySQLMySQL Server 7.0data6"

port=3308

server-id=3308

Those files configure the nodes that make up the Cluster.

From a command prompt window, launch the management node:

C:UsersAndrew>cd mysqlcluster

C:mysqlcluster>ndb_mgmd -f config.ini

2009-06-16 20:01:20 [MgmSrvr] INFO -- NDB Cluster Management Server. mysql-5.1.34 ndb-7.0.6

2009-06-16 20:01:20 [MgmSrvr] INFO -- The default config directory 'c:mysqlmysql-cluster' does not exist. Trying to create it...

2009-06-16 20:01:20 [MgmSrvr] INFO -- Sucessfully created config directory

2009-06-16 20:01:20 [MgmSrvr] INFO -- Reading cluster configuration from 'config.ini'

and then from another window, check that the cluster has been defined:

C:UsersAndrew>ndb_mgm

-- NDB Cluster -- Management Client --

ndb_mgm> show

Connected to Management Server at: localhost:1186

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=2 (not connected, accepting connect from localhost)

id=3 (not connected, accepting connect from localhost)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @localhost (mysql-5.1.34 ndb-7.0.6)

[mysqld(API)] 3 node(s)

id=4 (not connected, accepting connect from localhost)

id=5 (not connected, accepting connect from localhost)

id=6 (not connected, accepting connect from localhost)

Fire up 2 more command prompt windows and launch the 2 data nodes:

C:UsersAndrew>ndbd

2009-06-16 20:08:57 [ndbd] INFO -- Configuration fetched from 'localhost:118

6', generation: 1

2009-06-16 20:08:57 [ndbd] INFO -- Ndb started

NDBMT: non-mt

2009-06-16 20:08:57 [ndbd] INFO -- NDB Cluster -- DB node 2

2009-06-16 20:08:57 [ndbd] INFO -- mysql-5.1.34 ndb-7.0.6 --

2009-06-16 20:08:57 [ndbd] INFO -- Ndbd_mem_manager::init(1) min: 84Mb initi

al: 104Mb

Adding 104Mb to ZONE_LO (1,3327)

2009-06-16 20:08:57 [ndbd] INFO -- Start initiated (mysql-5.1.34 ndb-7.0.6)

WOPool::init(61, 9)

RWPool::init(22, 13)

RWPool::init(42, 18)

RWPool::init(62, 13)

Using 1 fragments per node

RWPool::init(c2, 18)

RWPool::init(e2, 14)

WOPool::init(41, 8 )

RWPool::init(82, 12)

RWPool::init(a2, 52)

WOPool::init(21, 5)

(repeat from another new window for the second data node).

After both data nodes (ndbd) have been launched, you should be able to see them through the management client:

ndb_mgm> show

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=2 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6, Nodegroup: 0, Master)

id=3 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6, Nodegroup: 0)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6)

[mysqld(API)] 3 node(s)

id=4 (not connected, accepting connect from localhost)

id=5 (not connected, accepting connect from localhost)

id=6 (not connected, accepting connect from localhost)

Finally, the 3 MySQL Server/API nodes should be lauched from 3 new windows:

C:UsersAndrew>cd mysqlcluster

C:mysqlcluster>mysqld --defaults-file=my.4.cnf

C:UsersAndrew>cd mysqlcluster

C:mysqlcluster>mysqld --defaults-file=my.5.cnf

C:UsersAndrew>cd mysqlcluster

C:mysqlcluster>mysqld --defaults-file=my.6.cnf

Now, just check that all of the Cluster nodes are now up and running from the management client…

ndb_mgm> show

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=2 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6, Nodegroup: 0, Master)

id=3 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6, Nodegroup: 0)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6)

[mysqld(API)] 3 node(s)

id=4 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6)

id=5 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6)

id=6 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6)

Using the Cluster

There are now 3 API nodes/MySQL Servers/mgmds running; all accessing the same data. Each of those nodes can be accessed by the mysql client using the ports that were configured in the my.X.cnf files. For example, we can access the first of those nodes (node 4) in the following way from (yet another) window:

C:UsersAndrew>mysql -h localhost -P 3306

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 2

Server version: 5.1.34-ndb-7.0.6-cluster-gpl MySQL Cluster Server (GPLType 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> use test;

Database changed

mysql> create table assets (name varchar(30) not null primary key, value int) engine=ndb;

Query OK, 0 rows affected (1.44 sec

mysql> insert into assets values ('car', 950);

Query OK, 1 row affected (0.00 sec

mysql> select * from assets;

+------+-------+

| name | value |

+------+-------+

| car | 950 |

+------+-------+

1 row in set (0.00 sec

mysql> insert into assets2 values ('car', 950);

Query OK, 1 row affected (0.00 sec)

To check that everything is working correctly, we can access the same database through another of the API nodes:

C:UsersAndrew>mysql -h localhost -P 3307

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 2

Server version: 5.1.34-ndb-7.0.6-cluster-gpl MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> use test;

Database changed

mysql> show tables;

+----------------+

| Tables_in_test |

+----------------+

| assets |

+----------------+

1 row in set (0.06 sec)

mysql> select * from assets;

+------+-------+

| name | value |

+------+-------+

| car | 950 |

+------+-------+

1 row in set (0.09 sec)

It’s important to note that the table (and its contents) of any table created using the ndb storage engine can be accessed through any of the API nodes but those created using other storage engines are local to each of the API nodes (MySQL Servers).

Your next steps

This is a very simple, contrived set up – in any sensible deployment, the nodes would be spread accross multiple physical hosts in the interests of performance and redundancy. You’d also set several more variables in the configuration files in order to size and tune your Cluster. Finally, you’d likely want to have some of these processes running as daemons or services rather than firing up so many windows.

It’s important to note that Windows is not a fully supported platform for MySQL Cluster. If you have an interest in deploying a production system on Windows then please contact me at andrew@clusterdb.com