If you weren’t able to attend Oracle OpenWorld or didn’t get chance to visit the MySQL demo booths then you can watch a recording of the demo here. The video gives a brief description of MySQL Cluster and then dives into a series of demos showing MySQL Cluster’s ability to cope with a number of events without losing service – including node failure, on-line upgrades and on-line horizontal scaling.

Archive for October 27, 2010

Glimpse of the future – massively improved JOIN performance for MySQL Cluster

A great chance to find out about the “SPJ” project that’s under way to improve the performance of complex table JOINs. A free webinar has been scheduled for Thursday, November 04 at 09:00 Pacific time (16:00 UK; 17:00 Central European Time) – just register for the webinar at mysql.com. Even if you can’t attend, by registering you’ll get an email telling you where to watch the replay shortly after the webinar.

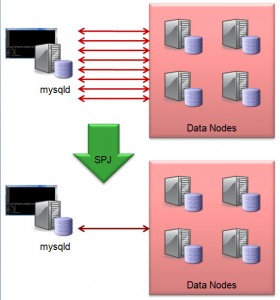

MySQL Cluster performance has always been extremely high and scalable when the work load is primarily primary key reads and write but complex JOINS (many tables in the JOIN and/or a large number of results from the first part of the query) have traditionally been much slower than when using other storage engines. Work is underway to address this and SPJ is the name we’ve been using.

Traditionally, JOINs have been performed as Nested Loop JOINs in the MySQL Server which is fine when all of the data is held there (e.g. MyISAM) but when the data is held externally (in Cluster’s case, in the data nodes) it can result in a massive amount of messaging. SPJ works by pushing the processing of JOINs down into the data nodes where they can be performed much more efficiently as the data is local.

As well as finding out about the implementation, you’ll also learn:

- What queries benefit -> how you might tweak you application to get the biggest benefits

- The kind of performance improvements you might expect to see

- How to try the (pre-GA!) software for yourself.

Note that (as always) Oracle reserves the right to alter the timing and/or existence of new product releases.

Tweet

New white paper – MySQL Replication – Enhancing Scalability and Availability with MySQL 5.5

A new white paper has been published that covers MySQL Replication – background information, how it works, how to use it and what’s new in MySQL 5.5. Simply register for the white paper at mysql.com and you’ll be sent your free copy.

The paper starts by covering the fundamental concepts behind replication such as the difference between synchronous and asynchronous replication and the idea behind semisynchronous replication.

It goes on to describe the common use-cases for replication – scaling out, high availability, geographic redundancy and offloading backups or analytics.

Various replication topologies are discussed from simple master-slave to multi-master rings.

As the title of the paper suggests, the paper covers the significant replication changes introduced in MySQL 5.5:

- Semisynchronous replication: Improved resilience by having master wait for slave to persist events.

- Slave fsync tuning & Automatic relay log recovery: Option to dictate when relay logs are written to disk rather than relying on default operating system behavior; set sync_relay_log=1 to ensure that no more than 1 statement or transaction is missing from the relay log after a crash. The slave can now recover from corrupted relay logs by requesting corrupt entries to be resent from the master. Three new options are introduced (sync-master-info, sync-relay-log and sync-relay-log-info)

- Replication Heartbeat: Automatically checks the status of the connection between the master and the slave(s), allowing a more precise failure detection mechanism. Can detect loss of connection within milliseconds (configurable). Avoid unnecessary relay log rotation when the master is idle.

- Per server replication filtering: When a server is removed from a replication ring, a surviving server can be selected to remove its outstanding replication messages once they’ve been applied by all servers.

- Precise Slave Type Conversions: Allows different types to be used on the master and slave, with automatic type promotion and demotion when using row-based replication (already possible with statement-based replication)

- Individual Log Flushing: Selectively flush server logs when using ‘FLUSH LOGS’ for greater control

- Safe logging of mixed transactions: Replicate transactions containing both InnoDB and MyISAM changes

To be better able to use replication, it helps to understand the basics about how MySQL Replication has been implemented – for example the roles of the binary and relay logs and so this is covered.

Perhaps the most useful sections are those that actually walk through using MySQL Replication – specifically these activities:

- Configuring, running and testing replication

- Migrating from traditional asynchronous replication to semisynchronous replication

- Administering & monitoring replication (including MySQL Enterprise Monitor)

- Failover and recovery

Replication is a little different when using MySQL Cluster; most significantly because you can have multiple MySQL Servers and other application nodes modifying the same database but also because the domains where MySQL Cluster has been used have required extreme High Availability and so there are Cluster-unique features such as active-active replication with conflict detection and resolution. This paper isn’t focussed on MySQL Cluster but a summary of these differences is included.

Tweet

MySQL Cluster 7.1.8 binaries released

The binary version for MySQL Cluster 7.1.8 has now been made available at http://www.mysql.com/downloads/cluster/

The binary version for MySQL Cluster 7.1.8 has now been made available at http://www.mysql.com/downloads/cluster/

A description of all of the changes (fixes) that have gone into MySQL Cluster 7.1.8 (compared to 7.1.5) can be found in the official MySQL Cluster documentation.

Tweet

Free webinar: Delivering Scalability and High Availability with MySQL 5.5 Replication Enhancements

MySQL 5.5 (currently at Release Candidate status) introduces a lot of enhancements to replication, making it even more robust and easy to manage. At 9:00 am PST (5:00 pm UK, 6pm CET) you have the chance to hear the details from the replication development manager (Dr Lars Thalmann) as well as get some of your replication questions answered.

As always, attendance is free but you must click here to register in advance.

Tweet

Get MySQL Replication up and running in 5 minutes

MySQL Replication is incredibly simple to get up and running and this (short) post steps you through it.

MySQL allows you to build up complex replication hierarchies, such as multi-master, chains of read slaves, backup databases at a remote site or any combination of these. This post focuses on a simple single master to single slave topology – the more complex solutions are built from this basic building block.

This post also makes the assumption that the 2 MySQL Servers have been installed but that there is no existing data in the master that needs to be copied to the slave – it’s not complex to add that extra requirement and it will be covered in a future post.

Server “black” (192.168.0.31) is to be our master and “blue” (192.168.0.34) the slave.

Step 1: Edit the configuration files & start the MySQL Servers

The first step in setting up replication involves editing the “my.cnf” file on the servers that will serve as the master and slave. A default is provided with the MySQL installation but in case there is already a production MySQL database running on these servers, we provide local configuration files “master.cnf” and “slave.cnf” that will be used when starting up the MySQL servers.

At a minimum we’ll want to add two options to the [mysqld] section of the master.cnf file:

- log-bin: in this example we choose black-bin.log

- server-id: in this example we choose 1. The server cannot act as a replication master unless binary logging is enabled. The server_id variable must be a positive integer value between 1 to 2^32

master.cnf:

[mysqld] server-id=1 log-bin=black-bin.log datadir=/home/billy/mysql/master/data innodb_flush_log_at_trx_commit=1 sync_binlog=1

Note: For the greatest possible durability and consistency in a replication setup using InnoDB with transactions, you should also specify the innodb_flush_log_at_trx_commit=1, sync_binlog=1 options.

Next, you’ll need to add the server-id option to the [mysqld] section of the slave’s slave.cnf file. The server-id value, like the master_id value, must be a positive integer between 1 to 2^32, It is also necessary that the ID of the slave be different from the ID of the master. If you are setting up multiple slaves, each one must have a unique server-id value that differs from that of the master and from each of the other slaves.

slave.cnf:

[mysqld]

server-id=2

relay-log-index=slave-relay-bin.index

relay-log=slave-relay-bin

datadir=/home/billy/mysql/slave/data

Now, start the MySQL servers using the service manager or directly from the command line if not being run as a service:

[billy@black ~]$ mysqld --defaults-file=/home/billy/mysql/master/master.cnf &

[billy@blue ~]$ mysqld --defaults-file=/home/billy/mysql/slave/slave.cnf&

Step 2: Create Replication User

Create an account on the master server that the slave server can use to connect. This account must be given the REPLICATION SLAVE privilege:

[billy@black ~]$ mysql -u root --prompt='master> ' master> CREATE USER repl_user@192.168.0.34; master> GRANT REPLICATION SLAVE ON *.* TO repl_user@192.168.0.34 IDENTIFIED BY 'billy';

Step 3: Initialize Replication

We are now ready to initialize replication on the slave; issue a CHANGE MASTER command:

slave> CHANGE MASTER TO MASTER_HOST='192.168.0.31',

-> MASTER_USER='repl_user',

-> MASTER_PASSWORD='billy',

-> MASTER_LOG_FILE='',

-> MASTER_LOG_POS=4;

Where:

- MASTER_HOST: the IP or hostname of the master server, in this example blue or 192.168.0.31

- MASTER_USER: this is the user we granted the REPLICATION SLAVE privilege to in Step 2, in this example, “repl_user”

- MASTER_PASSWORD: this is the password we assigned to ”rep_user” in Step 2

- MASTER_LOG_FILE: is an empty string (wouldn’t be empty if there were existing writes to be picked up from the master)

- MASTER_LOG_POS: is 4 (would likely be different if there were existing writes to be picked up from the master)

Finally, start replication on the slave:

slave> start slave;

Step 4: Basic Checks

Now we are ready to perform a basic check to ensure that replication is indeed working. In this example we insert a row of data into the “simples” table on the master server and then verify that these new rows materialize on the slave server:

master> create database clusterdb;

master> create table clusterdb.simples (id int not null primary key) engine=ndb;

master> insert into clusterdb.simples values (999),(1),(2),(3);

slave> select * from clusterdb.simples;

+-----+

| id |

+-----+

| 1 |

| 2 |

| 3 |

| 999 |

+-----+

Tweet