The native Memcached API for MySQL Cluster is now GA as part of MySQL Cluster 7.2

This post was first published in April 2011 when the first trial version of the Memcached API for MySQL Cluster was released; it was then up-versioned for the second MySQL Cluster 7.2 Development Milestone Release in October 2011. I’ve now refreshed the post based on the GA of MySQL Cluster 7.2 which includes the completed Memcache API.

There are a number of attributes of MySQL Cluster that make it ideal for lots of applications that are considering NoSQL data stores. Scaling out capacity and performance on commodity hardware, in-memory real-time performance (especially for simple access patterns), flexible schemas… sound familiar? In addition, MySQL Cluster adds transactional consistency and durability. In case that’s not enough, you can also simultaneously combine various NoSQL APIs with full-featured SQL – all working on the same data set. This post focuses on a new Memcached API that is now available to download, try out and deploy. This post steps through setting up Cluster with the Memcached API and then demonstrates how to read and write the same data through both Memcached and SQL (including for existing MySQL Cluster tables).

Download the community version from mysql.com or the commercial version from Oracle’s Software Delivery Cloud (note that there is not currently a Windows version).

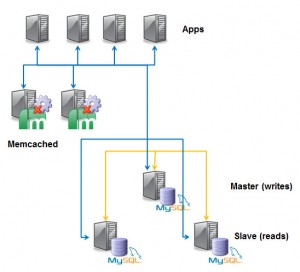

First of all a bit of background about Memcached. It has typically been used as a cache when the performance of the database of record (the persistent database) cannot keep up with application demand. When changing data, the application will push the change to the database, when reading data, the application first checks the Memached cache, if it is not there then the data is read from the database and copied into Memcached. If a Memcached instance fails or is restarted for maintenance reasons, the contents are lost and the application will need to start reading from the database again. Of course the database layer probably needs to be scaled as well so you send writes to the master and reads to the replication slaves.

This has become a classic architecture for web and other applications and the simple Memcached attribute-value API has become extremely popular amongst developers.

As an illustration of the simplicity of this API, the following example stores and then retrieves the string “Maidenhead” against the key “Test”:

telnet localhost 11211 set Test 0 0 10 Maidenhead! END

get Test VALUE Test 0 10 Maidenhead! END

Note that if we kill and restart the memcached server, the data is lost (as it was only held in RAM):

get Test

END

New options for using Memcached API with MySQL Cluster

What we’re doing with MySQL Cluster is to offer a bunch of new ways of using this API but with the benefits of MySQL Cluster. The solution has been designed to be very flexible, allowing the application architect to find a configuration that best fits their needs.

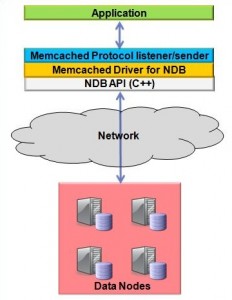

A quick diversion on how this is implemented. The application sends reads and writes to the memcached process (using the standard Memcached API). This in turn invokes the Memcached Driver for NDB (which is part of the same process) which in turn calls the NDB API for very quick access to the data held in MySQL Cluster’s data nodes (it’s the fastest way of accessing MySQL Cluster).

Because the data is now stored in MySQL Cluster, it is persistent and you can transparently scale out by adding more data nodes (this is an on-line operation).

Another important point is that the NDB API is already a commonly used, fully functional access method that the Memcached API can exploit. For example, if you make a change to a piece of data then the change will automatically be written to any MySQL Server that has its binary logging enabled which in turn means that the change can be replicated to a second site.

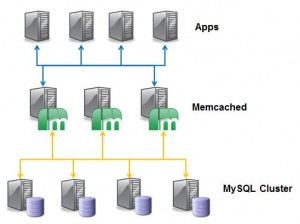

So the first (and probably simplest) architecture is to co-locate the Memcached API with the data nodes.

The applications can connect to any of the memcached API nodes – if one should fail just switch to another as it can access the exact same data instantly. As you add more data nodes you also add more memcached servers and so the data access/storage layer can scale out (until you hit the 48 data node limit).

Another simple option is to co-locate the Memcached API with the application. In this way, as you add more application nodes you also get more Memcached throughput. If you need more data storage capacity you can independently scale MySQL Cluster by adding more data nodes. One nice feature of this approach is that failures are handled very simply – if one App/Memcached machine should fail, all of the other applications just continue accessing their local Memcached API.

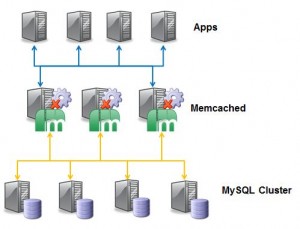

For maximum flexibility, you can have a separate Memcached layer so that the application, the Memcached API & MySQL Cluster can all be scaled independently.

In all of the examples so far, there has been a single source for the data (it’s all held in MySQL Cluster).

If you choose, you can still have all or some of the data cached within the memcached server (and specify whether that data should also be persisted in MySQL Cluster) – you choose how to treat different pieces of your data. If for example, you had some data that is written to and read from frequently then store it just in MySQL Cluster, if you have data that is written to rarely but read very often then you might choose to cache it in Memcached as well and if you have data that has a short lifetime and wouldn’t benefit from being stored in MySQL Cluster then only hold it in Memcached. The beauty is that you get to configure this on a per-key-prefix basis (through tables in MySQL Cluster) and that the application doesn’t have to care – it just uses the Memcached API and relies on the software to store data in the right place(s) and to keep everything in sync.

Of course if you want to access the same data through SQL then you’d make sure that it was configured to be stored in MySQL Cluster.

Enough of the theory, how to try it out…

Installing & configuarying the software

As this post is focused on API access to the data rather than testing High Availability, performance or scalability the Cluster can be kept extremely simple with all of the processes (nodes) running on a single server. The only thing to be careful of when you create your Cluster is to make sure that you define at least 5 API sections (e.g. [mysqld]) in your configuration file so you can access using SQL and 2 Memcached servers (each uses 2 connections) at the same time.

For further information on how to set up a single-host Cluster, refer to this post or just follow the next few steps.

Create a config.ini file for the Cluster configuration:

[ndb_mgmd] hostname=localhost datadir=/home/billy/my_cluster/ndb_data NodeId=1 [ndbd default] noofreplicas=2 datadir=/home/billy/my_cluster/ndb_data [ndbd] hostname=localhost NodeId=3 [ndbd] hostname=localhost NodeId=4 [mysqld] NodeId=50 [mysqld] NodeId=51 [mysqld] NodeId=52 [mysqld] NodeId=53 [mysqld] NodeId=54

and a my.cnf file for the MySQL server:

[mysqld] ndbcluster datadir=/home/billy/my_cluster/mysqld_data

Before starting the Cluster, install the standard databases for the MySQL Server (from wherever you have MySQL Cluster installed – typically /usr/local/mysql):

[billy@ws2 mysql]$ ./scripts/mysql_install_db --basedir=/usr/local/mysql --datadir=/home/billy/my_cluster/mysqld_data --user=billy

Start up the system

We are now ready to start up the Cluster processes:

[billy@ws2 my_cluster]$ ndb_mgmd -f conf/config.ini --initial --configdir=/home/billy/my_cluster/conf/ [billy@ws2 my_cluster]$ ndbd [billy@ws2 my_cluster]$ ndbd [billy@ws2 my_cluster]$ ndb_mgm -e show # Wait for data nodes to start [billy@ws2 my_cluster]$ mysqld --defaults-file=conf/my.cnf &

If your version doesn’t already have the ndbmemcache database installed then that should be your next step:

[billy@ws2 ~]$ mysql -h 127.0.0.1 -P3306 -u root < /usr/local/mysql/share/memcache-api/ndb_memcache_metadata.sql

After that, start the Memcached server (with the NDB driver activated):

[billy@ws2 ~]$ memcached -E /usr/local/mysql/lib/ndb_engine.so -e "connectstring=localhost:1186;role=db-only" -vv -c 20

Notice the “connectstring” – this allows the primary Cluster to be on a different machine to the Memcached API. Note that you can actually use the same Memcached server to access multiple Clusters – you configure this within the ndbmemcached database in the primary Cluster. In a production system you may want to include reconf=false amogst the -e parameters in order to stop configuration changes being applied to running Memcached servers (you’d need to restart those servers instead).

Try it out!

Next the fun bit – we can start testing it out:

[billy@ws2 ~]$ telnet localhost 11211 set maidenhead 0 0 3 SL6 STORED get maidenhead VALUE maidenhead 0 3 SL6 END

We can now check that the data really is stored in the database:

mysql> SELECT * FROM ndbmemcache.demo_table; +------------------+------------+-----------------+--------------+ | mkey | math_value | cas_value | string_value | +------------------+------------+-----------------+--------------+ | maidenhead | NULL | 263827761397761 | SL6 | +------------------+------------+-----------------+--------------+

Of course, you can also modify this data through SQL and immediately see the change through the Memcached API:

mysql> UPDATE ndbmemcache.demo_table SET string_value='sl6 4' WHERE mkey='maidenhead'; [billy@ws2 ~]$ telnet localhost 11211 get maidenhead VALUE maidenhead 0 5 SL6 4 END

By default, the normal limit of 14K per row still applies when using the Memcached API; however, the standard configuration treats any key-value pair with a key-pefix of “b:” differently and will allow the value to be up to 3 Mb (note the default limit imposed by the Memcached server is 1 Mb and so you’d also need to raise that). Internally the contents of this value will be split between 1 row in ndbmemcache.demo_table_large and one or more rows in ndbmemcache.external_values.

Note that this is completely schema-less, the application can keep on adding new key/value pairs and they will all get added to the default table. This may well be fine for prototyping or modest sized databases. As you can see this data can be accessed through SQL but there’s a good chance that you’ll want a richer schema on the SQL side or you’ll need to have the data in multiple tables for other reasons (for example you want to replicate just some of the data to a second Cluster for geographic redundancy or to InnoDB for report generation).

The next step is to create your own databases and tables (assuming that you don’t already have them) and then create the definitions for how the app can get at the data through the Memcached API. First let’s create a table that has a couple of columns that we’ll also want to make accessible through the Memcached API:

mysql> CREATE DATABASE clusterdb; USE clusterdb;

mysql> CREATE TABLE towns_tab (town VARCHAR(30) NOT NULL PRIMARY KEY,

zip VARCHAR(10), population INT, county VARCHAR(10)) ENGINE=NDB;

mysql> REPLACE INTO towns_tab VALUES ('Marlow', 'SL7', 14004, 'Berkshire');

Next we need to tell the NDB driver how to access this data through the Memcached API. Two ‘containers’ are created that identify the columns within our new table that will be exposed. We then define the key-prefixes that users of the Memcached API will use to indicate which piece of data (i.e. database/table/column) they are accessing:

mysql> USE ndbmemcache;

mysql> REPLACE INTO containers VALUES ('towns_cnt', 'clusterdb',

'towns_tab', 'town', 'zip', 0, NULL, NULL, NULL, NULL);

mysql> REPLACE INTO containers VALUES ('pop_cnt', 'clusterdb',

'towns_tab', 'town', 'population', 0, NULL, NULL, NULL, NULL);

mysql> SELECT * FROM containers;

+------------+-------------+------------------+-------------+----------------+-------+------------------+------------+--------------------+-----------------------------+

| name | db_schema | db_table | key_columns | value_columns | flags | increment_column | cas_column | expire_time_column | large_values_table |

+------------+-------------+------------------+-------------+----------------+-------+------------------+------------+--------------------+-----------------------------+

| demo_ext | ndbmemcache | demo_table_large | mkey | string_value | 0 | NULL | cas_value | NULL | ndbmemcache.external_values |

| towns_cnt | clusterdb | towns_tab | town | zip | 0 | NULL | NULL | NULL | NULL |

| demo_table | ndbmemcache | demo_table | mkey | string_value | 0 | math_value | cas_value | NULL | NULL |

| pop_cnt | clusterdb | towns_tab | town | population | 0 | NULL | NULL | NULL | NULL |

| demo_tabs | ndbmemcache | demo_table_tabs | mkey | val1,val2,val3 | flags | NULL | NULL | expire_time | NULL |

+------------+-------------+------------------+-------------+----------------+-------+------------------+------------+--------------------+-----------------------------+

mysql> REPLACE INTO key_prefixes VALUES (1, 'twn_pr:', 0,

'ndb-only', 'towns_cnt');

mysql> REPLACE INTO key_prefixes VALUES (1, 'pop_pr:', 0,

'ndb-only', 'pop_cnt');

mysql> SELECT * FROM key_prefixes;

+----------------+------------+------------+---------------+------------+

| server_role_id | key_prefix | cluster_id | policy | container |

+----------------+------------+------------+---------------+------------+

| 1 | pop_pr: | 0 | ndb-only | pop_cnt |

| 0 | t: | 0 | ndb-test | demo_tabs |

| 3 | | 0 | caching | demo_table |

| 0 | | 0 | ndb-test | demo_table |

| 0 | mc: | 0 | memcache-only | NULL |

| 1 | b: | 0 | ndb-only | demo_ext |

| 2 | | 0 | memcache-only | NULL |

| 1 | | 0 | ndb-only | demo_table |

| 0 | b: | 0 | ndb-test | demo_ext |

| 3 | t: | 0 | caching | demo_tabs |

| 1 | t: | 0 | ndb-only | demo_tabs |

| 4 | | 0 | ndb-test | demo_ext |

| 1 | twn_pr: | 0 | ndb-only | towns_cnt |

| 3 | b: | 0 | caching | demo_ext |

+----------------+------------+------------+---------------+------------+

At present it is necessary to restart the Memcached server in order to pick up the new key_prefix (and so you’d want to run multiple instances in order to maintain service):

[billy@ws2:~]$ memcached -E /usr/local/mysql/lib/ndb_engine.so -e "connectstring=localhost:1186;role=db-only" -vv -c 20

07-Feb-2012 11:22:29 GMT NDB Memcache 5.5.19-ndb-7.2.4 started [NDB 7.2.4; MySQL 5.5.19]

Contacting primary management server (localhost:1186) ...

Connected to "localhost:1186" as node id 51.

Retrieved 5 key prefixes for server role "db-only".

The default behavior is that:

GET uses NDB only

SET uses NDB only

DELETE uses NDB only.

The 4 explicitly defined key prefixes are "b:" (demo_table_large), "pop_pr:" (towns_tab),

"t:" (demo_table_tabs) and "twn_pr:" (towns_tab)

Now these columns (and the data already added through SQL) are accessible through the Memcached API:

[billy@ws2 ~]$ telnet localhost 11211 get twn_pr:Marlow VALUE twn_pr:Marlow 0 3 SL7 END set twn_pr:Maidenhead 0 0 3 SL6 STORED set pop_pr:Maidenhead 0 0 5 42827 STORED

and then we can check these changes through SQL:

mysql> SELECT * FROM clusterdb.towns_tab; +------------+------+------------+-----------+ | town | zip | population | county | +------------+------+------------+-----------+ | Maidenhead | SL6 | 42827 | NULL | | Marlow | SL7 | 14004 | Berkshire | +------------+------+------------+-----------+

One final test is to start a second memcached server that will access the same data. As everything is running on the same host, we need to have the second server listen on a different port:

[billy@ws2 ~]$ memcached -E /usr/local/mysql/lib/ndb_engine.so -e "connectstring=localhost:1186;role=db-only" -vv -c 20 -p 11212 -U 11212 [billy@ws2 ~]$ telnet localhost 11212 get twn_pr:Marlow VALUE twn_pr:Marlow 0 3 SL7 END

As mentioned before, there’s a wide range of ways of accessing the data in MySQL Cluster – both SQL and NoSQL. You’re free to mix and match these technologies – for example, a mission critical business application using SQL, a high-running web app using the Memcached API and a real-time application using the NDB API. And the best part is that they can all share the exact same data and they all provide the same HA infrastructure (for example synchronous replication and automatic failover within the Cluster and geographic replication to other clusters).

Finally, a reminder – please try this out and let us know what you think (or if you don’t have time to try it then let us now what you think anyway) by adding a comment to this post.

any idea if this will work with myisam and innodb? maybe working with handler interface of sql-mysql? no engine specific code?

it´s sounds like memcachedb+repcache(sync replication) (berkleydb database with memcache protocol), very nice! =)

This work is unique to Cluster but there is also an activity (and software to download) for InnoDB too. Take a look at http://dev.mysql.com/tech-resources/articles/nosql-to-mysql-with-memcached.html for an overview.

Andrew.

Hi,

I have a very weird behaviour with the binaries.

In almost all cases the connect (bin/memcached -E /usr/local/mysql/lib/ndb_engine.so -e “connectstring=localhost:1186;role=db-only” -vv -c 20 -p 11211 -u nobody) fails with:

13-Apr-2011 04:52:14 CEST NDB Memcache 5.1.51-ndb-7.2.0 started [NDB 7.2.0; MySQL 5.1.51]

Contacting primary management server (localhost:1186) …

Connected to “localhost:1186” as node id 22.

Retrieved 2 key prefixes for server role “db-only”.

The default behavior is that:

GET uses NDB only

SET uses NDB only

DELETE uses NDB only.

The 1 explicitly defined key prefix is “t:” (demo_table_tabs)

open_connections_to_all_clusters() failed

Failed to initialize instance. Error code: 255

But sometimes I can connect and this only because of an illegal connect string that makes the node id jump to the last in the config (in this case from 53 to 254)?

13-Apr-2011 04:31:01 CEST NDB Memcache 5.1.51-ndb-7.2.0 started [NDB 7.2.0; MySQL 5.1.51]

Contacting primary management server (localhost:1186) …

Connected to “localhost:1186” as node id 53.

Retrieved 4 key prefixes for server role “db-only”.

The default behavior is that:

GET uses NDB only

SET uses NDB only

DELETE uses NDB only.

The 3 explicitly defined key prefixes are “pop_pr:” (towns_tab), “t:” (demo_table_tabs) and “twn_pr:” (towns_tab)

Could not initialize handle to management server: Illegal connect string : !=ô4ÿ

Connected to “ÿÿÿÿ” as node id 254.

Server started with 4 threads.

Priming the pump …

done [5.056 sec].

Pipeline 0 attached to flex scheduler: config “b0,c1,p1”; 1 cluster.

Once connected everything works like a charm.

Any idea what is going on?

Mike – yes, there’s something odd there. For starters you should try to omit the “connectstring=localhost:1186” part from the -e config, since that’s the default.

jdd, thank you for your reply. omitting the connectstring doesn’t really change something.

I replaced the binary with the bzr export and now I can connect every time but still with this behaviour:

Contacting primary management server (localhost:1186) …

Connected to “localhost:1186” as node id 22.

…

Could not initialize handle to management server: Illegal connect string : x.x.x.x:

Connected to “pkµ-ÿ” as node id 254.

and the process also blocks the 2 api spots at ids 22 and 254:

id=22 @x.x.x.x (mysql-5.1.51 ndb-7.2.0)

id=254 @x.x.x.x (mysql-5.1.51 ndb-7.2.0)

though only one process running.

The garbled connect string is the reason for using 2 API slots: the first slot is for the “config-reader” connection, and the second is for the actual database operations. The ndb_engine is supposed to reuse the first connection when it sees that the two connections actually go to the same cluster, but clearly that code is buggy. I have a pretty good idea of where to find that bug, so sometime in the next week I will try to push a fix up to launchpad.

great, looking forward to it.

is there a mailing list that might be better suited to discuss issues like these?

Yes, cluster@lists.mysql.com is a good list to use for now.

We were recently asked the following question around data access and network hops – thought it would be useful to provide the response here:

Question

=========

About the memcached API, is there also some in memory cache/storage or every lookup results in disk access? Question is valid also for the network rountrips involved

Answer:

=======

The Memcached Api is a Memcached server, with both an Ndb ‘backend’ and the default Slab allocated hash ‘backend’. The Ndb backend uses NdbApi to read and write data (using network hops) from Cluster data nodes. The Memcached server can optionally cache results from Ndb backend queries in the normal Memcached cache. For tables with ‘disk based’ columns, Ndb data nodes have page caches and Write ahead logs so that not all reads updates require disk access. For tables with only ‘memory based’ columns, Ndb never requires disk access for reads, and only sequential writes to a write ahead log for updates. The Memcached server can be co-located with a data node to minimise network hops.

Hey there,

Great article, and amazingly interesting technology! Thanks!

Do I have it right that there is still a need for NDB Management nodes, and that there is still a requirement of AT LEAST 2 management and 2 data nodes are necessary for HA at the NDB level?

Cheers,

Tim Vaillancourt

Hi Tim,

the advice is to go with 2 management nodes (not running on the same hosts as the data nodes) for maximum availability in case 1 fails. If you have no running management node then the cluster continues to function but if you suffer network partitioning (half of your data nodes lose contact with the other half) then there is no arbitrator and so the Cluster would shut down.

Regards, Andrew.

Thank you so much for this article. I found it really informative and I managed to get a small cluster setup to test some use cases for my environment.

Has there been any discussion on if/when the mysql cluster 7.2 memcache branch will be integrated into a stable branch? Just curious if/when this is going to get completely adopted – I see there have been no new commits since May 2011.

Also, from some additional reading/testing rows > 8KB are not supported. I also noticed that BLOB column types are not supported. I believe memcached has an object limit of 1MB. It’d be interesting to see the limitation be bound by memcached rather than mysql. Any idea if there’s any research going on to achieve that?

Thanks again for the article!

Hi Matt,

unfortunately I don’t have any timing information that I can share.

Some of the restrictions imposed are based on the underlying Cluster limitations (for example, in Cluster 7.1 the row size is limited to 8 Kb – this rises to 14 Kb in Cluster 7.2 and so it should be possible to match that with the Memcached interface). BLOBs are supported by Cluster – do you have use cases where you’d want us to support it through the Memcached API?

Andrew.

I have a use case where each row is uniquely identified by multiple keys. In this case, how do configure containers table? How should I be testing get and set using telnet?

Joy

Hi Joy,

If you have extra unique indexes on a row, you should simply create a containers record where “key_column” contains the name of the indexed column. But this may not work properly in the current preview release (which is now six months old).

Or if you have a multi-part key, the idea is that you configure it as a list separated by commas in “key_columns” in the containers table. Then you send them to memcache (and receive them) as tab-separated values.

Comma separated list in key_columns doesn’t seem to be working. Could you please look at use case again?

Table schema goes as below:

+————–+————-+——+—–+———+——-+

| Field | Type | Null | Key | Default | Extra |

+————–+————-+——+—–+———+——-+

| Fromkey | varchar(15) | NO | MUL | NULL | |

| Tokey | varchar(39) | NO | PRI | NULL | |

| id | bigint(20) | NO | PRI | NULL | |

+————–+————-+——+—–+———+——-+

ndbmemcache container configuration below:

insert into containers values(‘multikeys’, ‘test’, ‘table1’, ‘Tokey,id’, ‘Fromkey’, 0, NULL, NULL, NULL);

insert into key_prefixes values(1,’mk:’,0,’ndb-only’,’multikeys’);

Tried to query from telnet:

get mk: 48669144470883968312317627353104498016 3839040898619750016

it didn’t return anything. Double checked with table data, there exists an entry.

Is there anything wrong I’m doing?

Thanks

Joy

Hi,

I got everything working but the only thing I need to do is to create the memcached database

like you mention here

memcached/storage/ndb/memcache/scripts/metadata.sql

where can I download this database create file

thanks and keep up the good work

Hi,

I got it from nightly development build mysql-cluster-gpl-7.2.2

thanks

I’m having a problem firing up memcached (1.6) with the ndb_engine.so plug-in… setup/build/commands/errors follow:

MySQL Cluster is running, with the ndbmemcache database.

RPMs used: MySQL-Cluster-[client|devel|server]-gpl-7.2.1-1.rhel5.x86_64.rpm

memcached build info: memcached-1.6.0_beta1

./configure –prefix=/usr/local/memcached-1.6 –with-libevent=/usr/local/lib –enable-64bit

(also tried without –enable-64-bit, same issue)

execution command:

/usr/local/memcached-1.6/bin/memcached -E /usr/lib64/ndb_engine.so -e “connectstring=mgmhost1,mgmhost2;role=db-only” -vv -c 20 -u nobody -p 11211

ISSUE:

The following output occurs, within about 2 seconds of execution, and it’s clearly exited.

26-Oct-2011 19:33:49 UTC NDB Memcache 5.5.15-ndb-7.2.1 started [NDB 7.2.1; MySQL 5.5.15]

Contacting primary management server (mgmhost1,mgmhost2) …

Connected to “mgmhost1,mgmhost2” as node id 8.

*** glibc detected *** /usr/local/memcached-1.6/bin/memcached: free(): invalid pointer: 0x00000030f1553b48 ***

======= Backtrace: =========

/lib64/libc.so.6[0x30f127245f]

/lib64/libc.so.6(cfree+0x4b)[0x30f12728bb]

/usr/lib64/ndb_engine.so(_ZN9TableSpecD1Ev+0x177)[0x7f0e96ae0d57]

/usr/lib64/ndb_engine.so(_ZN13Configuration21get_supported_versionEv+0x7d)[0x7f0e96ada35d]

/usr/lib64/ndb_engine.so(_ZN13Configuration17readConfigurationEv+0xd0)[0x7f0e96ada550]

/usr/lib64/ndb_engine.so(_Z18read_configurationP13Configuration+0x2b)[0x7f0e96ae19ab]

/usr/lib64/ndb_engine.so(get_config+0x13)[0x7f0e96ae1e33]

/usr/lib64/ndb_engine.so[0x7f0e96ae976e]

/usr/local/memcached-1.6/lib/memcached/libmemcached_utilities.so.0(init_engine+0xee)[0x7f0e970c4eee]

/usr/local/memcached-1.6/bin/memcached[0x409498]

/lib64/libc.so.6(__libc_start_main+0xf4)[0x30f121d994]

/usr/local/memcached-1.6/bin/memcached(parse_config+0x461)[0x402b19]

======= Memory map: ========

00400000-00421000 r-xp 00000000 ca:01 1639453 /usr/local/memcached-1.6/bin/memcached

00621000-00622000 rw-p 00021000 ca:01 1639453 /usr/local/memcached-1.6/bin/memcached

00622000-00625000 rw-p 00000000 00:00 0

00acc000-00b7b000 rw-p 00000000 00:00 0 [heap]

401a2000-401a3000 —p 00000000 00:00 0

401a3000-401c3000 rw-p 00000000 00:00 0

40860000-40861000 —p 00000000 00:00 0

40861000-40881000 rw-p 00000000 00:00 0

409a4000-409a5000 —p 00000000 00:00 0

409a5000-409c5000 rw-p 00000000 00:00 0

40bcf000-40bd0000 —p 00000000 00:00 0

40bd0000-413d0000 rw-p 00000000 00:00 0

41772000-41773000 —p 00000000 00:00 0

41773000-41793000 rw-p 00000000 00:00 0

41a9e000-41a9f000 —p 00000000 00:00 0

41a9f000-41abf000 rw-p 00000000 00:00 0

30f0e00000-30f0e1c000 r-xp 00000000 ca:01 1494461 /lib64/ld-2.5.so

30f101c000-30f101d000 r–p 0001c000 ca:01 1494461 /lib64/ld-2.5.so

30f101d000-30f101e000 rw-p 0001d000 ca:01 1494461 /lib64/ld-2.5.so

30f1200000-30f134e000 r-xp 00000000 ca:01 1494447 /lib64/libc-2.5.so

30f134e000-30f154e000 —p 0014e000 ca:01 1494447 /lib64/libc-2.5.so

30f154e000-30f1552000 r–p 0014e000 ca:01 1494447 /lib64/libc-2.5.so

30f1552000-30f1553000 rw-p 00152000 ca:01 1494447 /lib64/libc-2.5.so

30f1553000-30f1558000 rw-p 00000000 00:00 0

30f1600000-30f1602000 r-xp 00000000 ca:01 1494542 /lib64/libdl-2.5.so

30f1602000-30f1802000 —p 00002000 ca:01 1494542 /lib64/libdl-2.5.so

30f1802000-30f1803000 r–p 00002000 ca:01 1494542 /lib64/libdl-2.5.so

30f1803000-30f1804000 rw-p 00003000 ca:01 1494542 /lib64/libdl-2.5.so

30f1a00000-30f1a82000 r-xp 00000000 ca:01 1494529 /lib64/libm-2.5.so

30f1a82000-30f1c81000 —p 00082000 ca:01 1494529 /lib64/libm-2.5.so

30f1c81000-30f1c82000 r–p 00081000 ca:01 1494529 /lib64/libm-2.5.so

30f1c82000-30f1c83000 rw-p 00082000 ca:01 1494529 /lib64/libm-2.5.so

30f2200000-30f2215000 r-xp 00000000 ca:01 1494561 /lib64/libnsl-2.5.so

30f2215000-30f2414000 —p 00015000 ca:01 1494561 /lib64/libnsl-2.5.so

30f2414000-30f2415000 r–p 00014000 ca:01 1494561 /lib64/libnsl-2.5.so

30f2415000-30f2416000 rw-p 00015000 ca:01 1494561 /lib64/libnsl-2.5.so

30f2416000-30f2418000 rw-p 00000000 00:00 0

30f2600000-30f2616000 r-xp 00000000 ca:01 1494459 /lib64/libpthread-2.5.so

30f2616000-30f2815000 —p 00016000 ca:01 1494459 /lib64/libpthread-2.5.so

30f2815000-30f2816000 r–p 00015000 ca:01 1494459 /lib64/libpthread-2.5.so

30f2816000-30f2817000 rw-p 00016000 ca:01 1494459 /lib64/libpthread-2.5.so

30f2817000-30f281b000 rw-p 00000000 00:00 0

30f2e00000-30f2e07000 r-xp 00000000 ca:01 1494562 /lib64/librt-2.5.so

30f2e07000-30f3007000 —p 00007000 ca:01 1494562 /lib64/librt-2.5.so

30f3007000-30f3008000 r–p 00007000 ca:01 1494562 /lib64/librt-2.5.so

30f3008000-30f3009000 rw-p 00008000 ca:01 1494562 /lib64/librt-2.5.so

30f3200000-30f320d000 r-xp 00000000 ca:01 1494486 /lib64/libgcc_s-4.1.2-20080825.so.1

30f320d000-30f340d000 —p 0000d000 ca:01 1494486 /lib64/libgcc_s-4.1.2-20080825.so.1

30f340d000-30f340e000 rw-p 0000d000 ca:01 1494486 /lib64/libgcc_s-4.1.2-20080825.so.1

30f3a00000-30f3a11000 r-xp 00000000 ca:01 1494537 /lib64/libresolv-2.5.so

30f3a11000-30f3c11000 —p 00011000 ca:01 1494537 /lib64/libresolv-2.5.so

30f3c11000-30f3c12000 r–p 00011000 ca:01 1494537 /lib64/libresolv-2.5.so

30f3c12000-30f3c13000 rw-p 00012000 ca:01 1494537 /lib64/libresolv-2.5.so

30f3c13000-30f3c15000 rw-p 00000000 00:00 0

7f0e953cd000-7f0e96823000 rw-p 00000000 00:00 0

7f0e96823000-7f0e9682d000 r-xp 00000000 ca:01 1494540 /lib64/libnss_files-2.5.so

7f0e9682d000-7f0e96a2c000 —p 0000a000 ca:01 1494540 /lib64/libnss_files-2.5.so

7f0e96a2c000-7f0e96a2d000 r–p 00009000 ca:01 1494540 /lib64/libnss_files-2.5.so

7f0e96a2d000-7f0e96a2e000 rw-p 0000a000 ca:01 1494540 /lib64/libnss_files-2.5.so

7f0e96a2e000-7f0e96e18000 r-xp 00000000 ca:01 1426968 /usr/lib64/ndb_engine.so

7f0e96e18000-7f0e97018000 —p 003ea000 ca:01 1426968 /usr/lib64/ndb_engine.so

7f0e97018000-7f0e970ae000 rw-p 003ea000 ca:01 1426968 /usr/lib64/ndb_engine.so

7f0e970ae000-7f0e970ba000 rw-p 00000000 00:00 0

7f0e970c3000-7f0e970c8000 r-xp 00000000 ca:01 1639457 /usr/local/memcached-1.6/lib/memcached/libmemcached_utilities.so.0.0.0

7f0e970c8000-7f0e972c7000 —p 00005000 ca:01 1639457 /usr/local/memcached-1.6/lib/memcached/libmemcached_utilities.so.0.0.0

7f0e972c7000-7f0e972c8000 rw-p 00004000 ca:01 1639457 /usr/local/memcached-1.6/lib/memcached/libmemcached_utilities.so.0.0.0Aborted

Host info:

CentOS 5.7

Linux hostname 2.6.32.12-rscloud #26 SMP Mon May 17 12:35:34 UTC 2010 x86_64 x86_64 x86_64 GNU/Linux

Thanks for any help!

Please ignore my previous comment — OR, link to this forum post: http://forums.mysql.com/read.php?25,491203,491217#msg-491217 There was a bug (primarily mismatch of memcached & libevent versions).

In what version of memcached is -E a valid flag ?

I always get :

memcached: invalid option — ‘E’

Hi Jeremy,

You should be using the version on Memcached that comes with MySQL cluster. This is based on version 1.6 of Memcached which adds the ability to add your own storage plugins (using the -E option).

Regards, Andrew.

Ah ha, that was it, thanks.

I’m onto the next thing to diagnose now :

Contacting primary management server (localhost:1186) …

FAILED.

Could not connect to NDB. Shutting down.

Segmentation fault

Though :

ndb_mgm -e show

Gives :

Connected to Management Server at: localhost:1186

Cluster Configuration

———————

[ndbd(NDB)] 2 node(s)

id=3 @127.0.0.1 (mysql-5.5.19 ndb-7.2.4, Nodegroup: 0, Master)

id=4 @127.0.0.1 (mysql-5.5.19 ndb-7.2.4, Nodegroup: 0)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @127.0.0.1 (mysql-5.5.19 ndb-7.2.4)

[mysqld(API)] 1 node(s)

id=50 @127.0.0.1 (mysql-5.5.19 ndb-7.2.4)

Hi Jeremy,

Add a second [mysqld] section to your config.ini and then restart your cluster – the plugin needs 2 connections.

Andrew.

Hi,

I’ve created a following table:

CREATE TABLE `samples` (

`id` int(11) NOT NULL auto_increment,

`descr` varchar(255) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=NDB DEFAULT CHARSET=utf8;

I’ve also defined a container and a key-prefix. Everything works, but every time when I want to add a new value I have to give a key explicitly, for example (assuming that I already have four records in a table):

add s:5 0 0 3

abc

After that, I get a duplice key error message when I want to add a new record with this query:

insert into samples (descr) values (‘test’);

How do I have to use a memcached API to benefit from having an auto_increment id column in my table?

Kind regards,

Kamil

hi

while starting the memcached from ./mysql/bin directory with -e and -E option faceing a ERROR that “could not able to connect to management node” but i gave ip address properly…

later i installed memcached tar file and lib memcached then while starting memcached server it will not support -e an -E option…but without that its started…but there is no interaction with ndbmemcache database table…is -e and -E option is mandatary…

can any one help meout in finding the solution to this..

Hi Deepak,

make sure that you use the memcached that comes with MySQL Cluster 7.2 rather than downloading your own version.

Andrew.

This was a really helpful walkthrough. Especially the bit about mapping memcache key prefixes. Thanks!

Hi

I tried to acces the Blob data columns(from mysql cluster) which i have assigned as value column for memcached for NDB cluster after configuring the container using get and set command of memcached.

it gives me following error :

Unsupported column type: img

>51 CLIENT_ERROR value too big

Here “img” is a columns with type BLOB and it is configured as a value column and one of the key column “image_id” for memcached-NDB cluster.

Any one help me to solve this problem?

Here’s the short answer: if you either use the prefix “b:” at the beginning of the key, or you start memcached with the -e role=large option, then this should work better. For the longer answer, see http://mysqlblog.lenoxway.net/index.php?/archives/19-Storing-long-values-with-NDB-and-Memcache.html

[…] more information on Memcached or MySQL you can read this great blog of clusterdb, he explains it really well, […]

Thank you for the excelent article.

I just installed the whole set on my Macbook Pro 2008, running Snow Leopard 64 bit, readapt my C++ application to run using the cluster and it is working like magic.

I had to play a bit with the ./configure parameters, $PATH, otool and DYLD_LIBRARY_PATH, but using your tips it worked like a charm.

I also installed libmemcached, mysql++, germand, etc. and everything is working just fine.

I would like to know if you have more documentation about the cluster parameters, especially on the memcached. I really need to increase the memory limits for the memcached server (at least 2Gb) and for the values (at least 4Mb), but I’m finding some problemas and the message that the new parameters are incompatible with the OLD ones (/usr/local/bin/memcached -I 4m -m 2048 -p 11211 -d).

I’m also geting this error using the “memdump” tool from the libmemcached, and “gdb-ing” it I found out that is a problem realated to memory allocation on the Server-side.

Thanks again and best regards,

Paulo Lellis

Hi Paulo,

you can find general information on configuring MySQL Cluster from http://dev.mysql.com/doc/refman/5.5/en/mysql-cluster-configuration.html.

Documentation specifically on using the Memcached driver for Cluster’s NDB API can be found at http://dev.mysql.com/doc/refman/5.5/en/mysql-cluster-ndbmemcache.html

Regards, Andrew.

Thanks for the infomation, Andrew.

Do you know if there’s any way of using any other char as column delimiter, as in the “demo_table_tabs” example, using the comma “,” as separator?

I mean, my application is already using the “:” as column separator and the adoption of comma “,” is an issue since it deals with monetary amounts (in english, decimal sep is “.”, thousand is “,”/ in latin-languages is the opposite, imagine the risk).

I checked the documentation but couldn’t find anything related.

Nevertheless, my app uses the “.” as key separator and using the command line option ‘-D=”:”‘ worked just fine.

Thanks again,

Paulo

How to change memcached allocated RAM size from 64 to custom one? as -m option doesn’t work with memcached provided with 7.2.5

Also can we use distributed functionality of memcached with this memcached?

Hi,

I am facing problem with ” open_connections_to_all_clusters() failed “.

My execution is below

# memcached -E /usr/lib64/ndb_engine.so -e “connectstring=10.160.141.144:1186;role=db-only” -vv -c 20 -u root -p 11211

18-Jul-2012 08:22:24 EDT NDB Memcache 5.5.22-ndb-7.2.6 started [NDB 7.2.6; MySQL 5.5.22]

Contacting primary management server (10.160.141.144:1186) …

Connected to “10.160.141.144:1186” as node id 6.

Retrieved 3 key prefixes for server role “db-only”.

The default behavior is that:

GET uses NDB only

SET uses NDB only

DELETE uses NDB only.

The 2 explicitly defined key prefixes are “b:” (demo_table_large) and “t:” (demo_table_tabs)

open_connections_to_all_clusters() failed

Failed to initialize instance. Error code: 255

Management node:

——————

ndb_mgm> show

Cluster Configuration

———————

[ndbd(NDB)] 2 node(s)

id=2 @10.168.46.42 (mysql-5.5.22 ndb-7.2.6, Nodegroup: 0)

id=3 @10.160.253.24 (mysql-5.5.22 ndb-7.2.6, Nodegroup: 0, Master)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @10.160.141.144 (mysql-5.5.22 ndb-7.2.6)

[mysqld(API)] 4 node(s)

id=4 @10.168.46.42 (mysql-5.5.22 ndb-7.2.6)

id=5 @10.160.253.24 (mysql-5.5.22 ndb-7.2.6)

id=6 (not connected, accepting connect from any host)

id=7 (not connected, accepting connect from any host)

2 API’s are free for Memcached to connect cluster management node. but i am facing open_connections_to_all_clusters() failed still.

am i doing wrong anywhere? please help me on this.

thanks in advance.

Hi Muralidhar,

This is unusual. Can you show us what’s in your ndbmemcache.ndb_clusters table, and also try to start up with ‘debug=true’?

Thanks,

JD

HI JDD,

Thanks for your reply.

Here is the table output

————————-

mysql> select * from ndbmemcache.ndb_clusters;

+————+———————————–+————–+

| cluster_id | ndb_connectstring | microsec_rtt |

+————+———————————–+————–+

| 0 | nodeid=4;host=10.160.141.144:1186 | 250 |

+————+———————————–+————–+

1 row in set (0.00 sec)

Note: DataNode and SQL Node are on same box.

Here is the Debug output

—————————

# memcached -E /usr/lib64/ndb_engine.so -e “connectstring=10.160.141.144:1186;role=db-only;debug=true” -vv -c 20 -u root -p 11211

main –> ndb_initialize()

main –> connect_to_primary_cluster()

19-Jul-2012 01:16:29 EDT NDB Memcache 5.5.22-ndb-7.2.6 started [NDB 7.2.6; MySQL 5.5.22]

Contacting primary management server (10.160.141.144:1186) …

main –> ClusterConnectionPool::connect()

Connected to “10.160.141.144:1186” as node id 6.

main –> Configuration::fetch_meta_record()

main get_supported_version(): 1.2

main –> config_v1::read_configuration()

main get_server_role_id(): Name: “db-only” — ID: 1

main –> config_v1::get_policies()

main get_policies(): ndb-test: get-2 set-2 del-2 flush-2 addr-0x982bed0

main get_policies(): caching-with-local-deletes: get-3 set-3 del-1 flush-1 addr-0x9860f90

main get_policies(): ndb-read-only: get-2 set-4 del-4 flush-1 addr-0x98539c0

main get_policies(): ndb-only: get-2 set-2 del-2 flush-1 addr-0x98661b0

main get_policies(): caching: get-3 set-3 del-3 flush-1 addr-0x9827580

main get_policies(): memcache-only: get-1 set-1 del-1 flush-1 addr-0x982c1e0

main –> config_v1::get_connections()

main –> store_connection_pool_for_cluster()

main get_connections(): [0]: { 0 => “nodeid=4;host=10.160.141.144:1186” [rtt: 250]}

main get_connections(): clusters: 1

main –> config_v1::get_prefixes()

main QueryPlan(): Using Index: PRIMARY on Table: key_prefixes [SCAN]

main get_container_record(): “demo_table” found in database (demo_table).

main get_container_record(): “demo_ext” found in database (demo_table_large).

main get_container_record(): “demo_tabs” found in database (demo_table_tabs).

main –> config_v1::log_signon()

main set_initial_cas(): Sign On GCI: 0x13ffd0000000f | Node Id: [6] 0x60000000 | Engine bit: 0x1000000000

main set_initial_cas(): Initial CAS: 11258657081655296 0x27ffb060000000

main –> config_v1 destructor()

Retrieved 3 key prefixes for server role “db-only”.

The default behavior is that:

GET uses NDB only

SET uses NDB only

DELETE uses NDB only.

The 2 explicitly defined key prefixes are “b:” (demo_table_large) and “t:” (demo_table_tabs)

main –> Configuration::openAllConnections()

main –> ClusterConnectionPool::connect()

open_connections_to_all_clusters() failed

main –> ndb_destroy()

Failed to initialize instance. Error code: 255

Please let me know if you need any command output.

thanks

Why isn’t there an increment_column set for the role large ?

I am trying to use such architecture for large scale sessions storage and I’m in need for large object storage, beside the link at mysqlblog.lenoxway.net is down :/

I altered the schema of the demo_table_large to include a math_value column and updated the increment_column to include the new column name, after getting the following error when incrementing a key.

NDB INCR/DECR is not allowed for this key.

>70 SERVER_ERROR failed to store item

However, although the error is now gone and the field is being used yet it looks like memcached is not dealing with it normally even though records gets inserted there, the value keeps going high and never resets back to NULL which is not the case with the db-role where the math_value gets reset back to NULL after usage, I am still digging into the cause behind that though there isn’t too much info with the limitations and caveats of large object storage in MySQL Cluster + Memcached.

I also tried searching the web for a cached version of the blog post without hope.

Think you can help in that ? 🙂

Thanks!

The lenoxway site is indeed not the most stable, but I think it’s up again.

After changing the schema, you should either restart memcached (which is probably easiest, in a non-production environment) or trigger an online reconfiguration.

In order to do an INCR operation, memcached has to check for three things: that the container has a math column, that reads are allowed, and that writes are allowed. The “NDB INCR/DECR is not allowed” message means some part of this test failed.

I have already did verified that and was able to get the INCR operation to work fine as I mentioned, however, my main problems is the behavior now of the role large is not as same as db-only role. It looks like there’re some race condition letting the memcache client (PHP memcache module in my case) fail to detect that it created the session record the first time and keeps inserting and re-inserting it on and on again and again, that was not the case with the stock memcached as well as the db-role mode. The memcache PHP module creates a session-id.lock key and keeps it with values between 0 and 1, now after switching to the large role this key keeps incremented for so long and the page keeps stalled when executing for long as well, the records ends up with high values.

I am not sure what is exactly the root cause, I am still debugging though it is a very hectic process but what I am very sure from is, its not something from the module itself but from the new architecture.

Thanks!

hi

I met a problem.When I test memcahed with memslap(eg:/usr/local/libmemcached-1.0.8/bin/memslap –server=192.168.1.204:11211 –execute-number=5s –test=get –concurrency=20), the memcached server is not stable ,like errors

clients/execute.cc:73 Failure on read((269963072) SERVER HAS FAILED AND IS DISABLED UNTIL TIMED RETRY, host: 192.168.1.204:11211 -> libmemcached/get.cc:314) of Xx8OtiRdS9cEwleBFs3YjOSOGd77by2ywAMhlhIDrmJfXxrnpmBPAfhQ9oacnSbbepGNXRSEDntlccOQMbjZFOzGCPsrtRIOHbng

memslap SERVER ERROR, out of memory

another question :can I use the arch in production?can it work well under 1.5W read per second?

Please help

thanks very much

I’m trying to get large values to work, but the mysqlblog.lenoxway.net appears down, and there isn’t any Google/Wayback Machine archive for the post about it.

I can set values fine, but reading them returns an empty string.

Team,

I need to help to use memcache api with mysql cluster.

Also let us now where should i run this command.

memcached -E /usr/lib64/ndb_engine.so -e “connectstring=192.168.13.240:1186;role=db-only;debug=true” -vv -u root

Thanks

Ashok

I’ve been looking and have found no info on what data types are (not) supported. I’m mapping to a TEXT column which apparently is not supported through the memcache API. I’m using “5.6.15-ndb-7.3.4-cluster-gpl MySQL Cluster Community Server”. according to innodb-memcached-internals:

* value_columns: Must be mapped to a CHAR, VARCHAR, or BLOB column. There is no length restriction and the value can be NULL.

(from https://dev.mysql.com/doc/refman/5.6/en/innodb-memcached-internals.html)

What are the supported mappings?

I found something at the bottom of the README:

————————————————————————-

LIMITATIONS

————————————————————————-

“SET” and “ENUM” columns rely on metadata which is only properly available to

the MySQL server. While they may work in some situations, they are not

fully supported. Bitfields are not supported at all. TEXT and BLOB columns

are also not supported, though BLOB-like large values can be stored in an

external values table, as described above.

Also found this post:

http://mysqlblog.lenoxway.net/blog/2012/03/30/Storing-long-values-with-NDB-and-Memcache/

Hello. Please help me to solve this problem:

[root@localhost ~]# mysql -u root ndb_initialize()

main –> connect_to_primary_cluster()

16-Jul-2014 19:37:54 MSK NDB Memcache 5.6.17-ndb-7.3.5 started [NDB 7.3.5; MySQL 5.6.17]

Contacting primary management server (localhost:1186) …

main –> ClusterConnectionPool::connect()

Connected to “localhost:1186” as node id 54.

main –> Configuration::fetch_meta_record()

main –> Configuration::fetch_meta_record()

main –> Configuration::fetch_meta_record()

main –> Configuration::fetch_meta_record()

Failed to read configuration — shutting down.

(Did you run ndb_memcache_metadata.sql?)

main –> ndb_destroy()

Failed to initialize instance. Error code: 255

As you can see, i have loaded metadata, but memcached doesn’t work.

Sorry, it must be something like this:

[root@localhost ~]# mysql -u root ndb_initialize()

main –> connect_to_primary_cluster()

16-Jul-2014 19:36:46 MSK NDB Memcache 5.6.17-ndb-7.3.5 started [NDB 7.3.5; MySQL 5.6.17]

Contacting primary management server (localhost:1186) …

main –> ClusterConnectionPool::connect()

Connected to “localhost:1186” as node id 54.

main –> Configuration::fetch_meta_record()

main –> Configuration::fetch_meta_record()

main –> Configuration::fetch_meta_record()

main –> Configuration::fetch_meta_record()

Failed to read configuration — shutting down.

(Did you run ndb_memcache_metadata.sql?)

main –> ndb_destroy()

Failed to initialize instance. Error code: 255

There is something wrong with parsing but i hope you understand me.

[…] now i can tryout the example from link http://www.clusterdb.com/mysql-cluster/scalabale-persistent-ha-nosql-memcache-storage-using-mysql-cl… […]