This post builds upon the earlier article (Creating a simple Cluster on a single LINUX host) which explained how to install and run a Cluster where all of the nodes run on the same physical host.

The single host solution is not great for a real deployment – MySQL Cluster is designed to provide a High Availability (HA) solution by synchronously replicating data between data nodes – if all of the data nodes run on a single host that that machine is a single point of failure.

This article demonstrates how to split the nodes between hosts; the configuration will still be fairly simple, using just 2 machines but it should be obvious how to extend it to more.

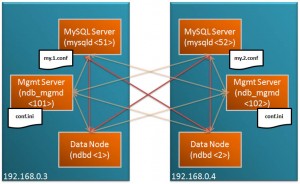

This new Cluster will be split across 2 machines, with all functions duplicated on each machine as shown in the diagram.

Downloading and installing

In the following example, host “ws1” has the IP Address 192.168.0.3 and “ws2” has 192.168.0.4.

Browse to the MySQL Cluster LINUX download page at mysql.com and download the correct version (32 or 64 bit) and store it in the desired directory (in my case, /home/billy/mysql) on both hosts and then extract and rename the new folder to something easier to work with…

[billy@ws1 mysql]$ tar xvf mysql-cluster-gpl-7.0.6-linux-x86_64-glibc23.tar.gz [billy@ws1 mysql]$ mv mysql-cluster-gpl-7.0.6-linux-x86_64-glibc23 7_0_6 [billy@ws2 mysql]$ tar xvf mysql-cluster-gpl-7.0.6-linux-x86_64-glibc23.tar.gz [billy@ws2 mysql]$ mv mysql-cluster-gpl-7.0.6-linux-x86_64-glibc23 7_0_6

Setup the files that will be needed for the MySQL Server processes (mysqld) to run correctly on each host…

[billy@ws1 mysql]$ cd 7_0_6 [billy@ws1 7_0_6]$ scripts/mysql_install_db --basedir=/home/billy/mysql/7_0_6 --datadir=/home/billy/mysql/7_0_6/data [billy@ws2 mysql]$ cd 7_0_6 [billy@ws2 7_0_6]$ scripts/mysql_install_db --basedir=/home/billy/mysql/7_0_6 --datadir=/home/billy/mysql/7_0_6/data

Configure and run the Cluster

Create a sub-directory called “conf” on each host and create the following 3 files:

config.ini – note that this file is used by the management nodes and as we’re going to run an ndb_mgmd process on each host, this is created on both ws1 and ws2

[ndbd default] noofreplicas=2 [ndbd] hostname=192.168.0.3 id=1 [ndbd] hostname=192.168.0.4 id=2 [ndb_mgmd] id = 101 hostname=192.168.0.3 [ndb_mgmd] id = 102 hostname=192.168.0.4 [mysqld] id=51 hostname=192.168.0.3 [mysqld] id=52 hostname=192.168.0.4

my.1.conf – to be used by the MySQL Server on ws1 and so store on ws1

[mysqld]

ndb-nodeid=51

ndbcluster

datadir=/home/billy/mysql/7_0_6/data

basedir=/home/billy/mysql/7_0_6

port=3306

server-id=51

log-bin

my.2.conf – to be used by the MySQL Server on ws2 and so store on ws2

[mysqld]

ndb-nodeid=52

ndbcluster

datadir=/home/billy/mysql/7_0_6/data

basedir=/home/billy/mysql/7_0_6

port=3306

server-id=52

log-bin

Those files configure the nodes that make up the Cluster. From a command prompt window, launch the management nodes:

[billy@ws1 7_0_6]$ bin/ndb_mgmd --initial -f conf/config.ini --configdir=/home/billy/mysql/7_0_6/conf [billy@ws2 7_0_6]$ bin/ndb_mgmd --initial -f conf/config.ini --configdir=/home/billy/mysql/7_0_6/conf

Check that the management nodes are up and running:

[billy@ws1 7_0_6]$ bin/ndb_mgm ndb_mgm> show Connected to Management Server at: localhost:1186 Cluster Configuration --------------------- [ndbd(NDB)] 2 node(s) id=1 (not connected, accepting connect from 192.168.0.3) id=2 (not connected, accepting connect from 192.168.0.4) [ndb_mgmd(MGM)] 2 node(s) id=101 @192.168.0.3 (mysql-5.1.34 ndb-7.0.6) id=102 (not connected, accepting connect from 192.168.0.4) [mysqld(API)] 2 node(s) id=51 (not connected, accepting connect from 192.168.0.3) id=52 (not connected, accepting connect from 192.168.0.4) ndb_mgm> quit [billy@ws1 7_0_6]$ bin/ndb_mgm -c 192.168.0.4:1186 ndb_mgm> show Connected to Management Server at: 192.168.0.4:1186 Cluster Configuration --------------------- [ndbd(NDB)] 2 node(s) id=1 (not connected, accepting connect from 192.168.0.3) id=2 (not connected, accepting connect from 192.168.0.4) [ndb_mgmd(MGM)] 2 node(s) id=101 (not connected, accepting connect from 192.168.0.3) id=102 @192.168.0.4 (mysql-5.1.34 ndb-7.0.6) [mysqld(API)] 2 node(s) id=51 (not connected, accepting connect from 192.168.0.3) id=52 (not connected, accepting connect from 192.168.0.4) ndb_mgm> quit

and then start the 2 data nodes (ndbd) and 2 MySQL API/Server nodes (mysqld) and then check that they’re all up and running:

[billy@ws1 7_0_6]$ bin/ndbd --initial -c localhost:1186

2009-06-17 13:05:47 [ndbd] INFO -- Configuration fetched from 'localhost:1186', generation: 1

[billy@ws2 7_0_6]$ bin/ndbd --initial -c localhost:1186

2009-06-17 13:05:51 [ndbd] INFO -- Configuration fetched from 'localhost:1186', generation: 1

[billy@ws1 7_0_6]$ bin/mysqld --defaults-file=conf/my.1.conf&

[billy@ws2 7_0_6]$ bin/mysqld --defaults-file=conf/my.2.conf&

[billy@ws1 7_0_6]$ bin/ndb_mgm

-- NDB Cluster -- Management Client --

ndb_mgm> show

Connected to Management Server at: localhost:1186

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=1 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6, Nodegroup: 0, Master)

id=2 @192.168.0.4 (mysql-5.1.34 ndb-7.0.6, Nodegroup: 0)

[ndb_mgmd(MGM)] 2 node(s)

id=101 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6)

id=102 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6)

[mysqld(API)] 2 node(s)

id=51 @192.168.0.3 (mysql-5.1.34 ndb-7.0.6)

id=52 @192.168.0.4 (mysql-5.1.34 ndb-7.0.6)

ndb_mgm> quit

Using the Cluster

There are now 2 API nodes/MySQL Servers/mysqlds running on the 2 different hosts; both accessing the same data. Each of those nodes can be accessed by the mysql client using the hostname and ports that were configured in the my.X.cnf files. For example, we can access the first of those nodes (node 51) in the following way (each API node is accessed using the host and port number in its associate my.X.cnf file:

[billy@ws1 7_0_6]$ bin/mysql -h localhost -P 3306

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 4

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> use test;

Database changed

mysql> create table assets (name varchar(30) not null primary key,

-> value int) engine=ndb;

Query OK, 0 rows affected (0.99 sec)

mysql> insert into assets values ('Car','1900');

Query OK, 1 row affected (0.03 sec)

mysql> select * from assets;

+------+-------+

| name | value |

+------+-------+

| Car | 1900 |

+------+-------+

1 row in set (0.00 sec)

mysql> quit

Bye

If you see “ERROR 1130 (HY000): Host ‘192.168.0.3’ is not allowed to connect to this MySQL server” then you need to set up the privileges (repeat on each host) – in a real system, you’d likely want tighter security that this:

[billy@ws1 7_0_6]$ bin/mysql -u root Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 3 Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL) Type 'help;' or 'h' for help. Type 'c' to clear the current input statement. mysql> GRANT ALL ON *.* TO ''@'localhost'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL ON *.* TO ''@'192.168.0.4'; Query OK, 0 rows affected (0.00 sec) mysql> quit Bye [billy@ws2 7_0_6]$ bin/mysql -u root Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 8 Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL) Type 'help;' or 'h' for help. Type 'c' to clear the current input statement. mysql> GRANT ALL ON *.* TO ''@'localhost'; Query OK, 0 rows affected (0.01 sec) mysql> GRANT ALL ON *.* TO ''@'192.168.0.3'; Query OK, 0 rows affected (0.00 sec) mysql> quit Bye

Note that as this table is using the ndb (MySQL Cluster) storage engine, the data is actually held in the data nodes rather than in the SQL node and so we can access the exact same data from the the other SQL nodes:

[billy@ws1 7_0_6]$ bin/mysql -h 192.168.0.4 -P 3306

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 10

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> use test;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from assets;

+------+-------+

| name | value |

+------+-------+

| Car | 1900 |

+------+-------+

1 row in set (0.01 sec)

mysql> quit

Bye

Your next steps

This is still a fairly simple, contrived set up. Hopefully it’s clear how additional data or SQL nodes could be added and in a larger deployment you may well decide to run the management and SQL nodes on different hosts to the data nodes (in fact, when starting up the management nodes there is a warning message suggesting you deploy them elsewhere!).

To move the management node to a 3rd, independent physical host (and a 4th one if you want 2 management nodes for redundancy -a future article will explain when you might want to do that), just change the IP address in the [ndb_mgmd] section of config.ini and then run the ndb_mgmd executable on that new host. Note that the management node consumes very few resources and so can share that host with other functions/applications (e.g. SQL Server nodes but not data nodes).

You’d also set several more variables in the configuration files in order to size and tune your Cluster.

In this article, I used 2 LINUX hosts but you could extend the Windows example introduced in My first Cluster running on Windows in exactly the same way.