This post builds upon the earlier article (Creating a simple Cluster on a single LINUX host) which explained how to install and run a Cluster where all of the nodes run on the same physical host.

The single host solution is not great for a real deployment – MySQL Cluster is designed to provide a High Availability (HA) solution by synchronously replicating data between data nodes – if all of the data nodes run on a single host that that machine is a single point of failure.

This article demonstrates how to split the nodes between hosts; the configuration will still be fairly simple, using just 2 machines but it should be obvious how to extend it to more.

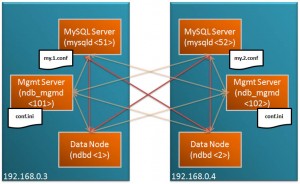

This new Cluster will be split across 2 machines, with all functions duplicated on each machine as shown in the diagram.

Downloading and installing

In the following example, host “ws1” has the IP Address 192.168.0.3 and “ws2” has 192.168.0.4.

Browse to the MySQL Cluster LINUX download page at mysql.com and download the correct version (32 or 64 bit) and store it in the desired directory (in my case, /home/billy/mysql) on both hosts and then extract and rename the new folder to something easier to work with…

[billy@ws1 mysql]$ tar xvf mysql-cluster-gpl-7.0.6-linux-x86_64-glibc23.tar.gz [billy@ws1 mysql]$ mv mysql-cluster-gpl-7.0.6-linux-x86_64-glibc23 7_0_6 [billy@ws2 mysql]$ tar xvf mysql-cluster-gpl-7.0.6-linux-x86_64-glibc23.tar.gz [billy@ws2 mysql]$ mv mysql-cluster-gpl-7.0.6-linux-x86_64-glibc23 7_0_6

Setup the files that will be needed for the MySQL Server processes (mysqld) to run correctly on each host…

[billy@ws1 mysql]$ cd 7_0_6 [billy@ws1 7_0_6]$ scripts/mysql_install_db --basedir=/home/billy/mysql/7_0_6 --datadir=/home/billy/mysql/7_0_6/data [billy@ws2 mysql]$ cd 7_0_6 [billy@ws2 7_0_6]$ scripts/mysql_install_db --basedir=/home/billy/mysql/7_0_6 --datadir=/home/billy/mysql/7_0_6/data

Configure and run the Cluster

Create a sub-directory called “conf” on each host and create the following 3 files:

config.ini – note that this file is used by the management nodes and as we’re going to run an ndb_mgmd process on each host, this is created on both ws1 and ws2

[ndbd default] noofreplicas=2 [ndbd] hostname=192.168.0.3 id=1 [ndbd] hostname=192.168.0.4 id=2 [ndb_mgmd] id = 101 hostname=192.168.0.3 [ndb_mgmd] id = 102 hostname=192.168.0.4 [mysqld] id=51 hostname=192.168.0.3 [mysqld] id=52 hostname=192.168.0.4

my.1.conf – to be used by the MySQL Server on ws1 and so store on ws1

[mysqld]

ndb-nodeid=51

ndbcluster

datadir=/home/billy/mysql/7_0_6/data

basedir=/home/billy/mysql/7_0_6

port=3306

server-id=51

log-bin

my.2.conf – to be used by the MySQL Server on ws2 and so store on ws2

[mysqld]

ndb-nodeid=52

ndbcluster

datadir=/home/billy/mysql/7_0_6/data

basedir=/home/billy/mysql/7_0_6

port=3306

server-id=52

log-bin

Those files configure the nodes that make up the Cluster. From a command prompt window, launch the management nodes:

[billy@ws1 7_0_6]$ bin/ndb_mgmd --initial -f conf/config.ini --configdir=/home/billy/mysql/7_0_6/conf [billy@ws2 7_0_6]$ bin/ndb_mgmd --initial -f conf/config.ini --configdir=/home/billy/mysql/7_0_6/conf

Check that the management nodes are up and running:

[billy@ws1 7_0_6]$ bin/ndb_mgm ndb_mgm> show Connected to Management Server at: localhost:1186 Cluster Configuration --------------------- [ndbd(NDB)] 2 node(s) id=1 (not connected, accepting connect from 192.168.0.3) id=2 (not connected, accepting connect from 192.168.0.4) [ndb_mgmd(MGM)] 2 node(s) id=101 @192.168.0.3 (mysql-5.1.34 ndb-7.0.6) id=102 (not connected, accepting connect from 192.168.0.4) [mysqld(API)] 2 node(s) id=51 (not connected, accepting connect from 192.168.0.3) id=52 (not connected, accepting connect from 192.168.0.4) ndb_mgm> quit [billy@ws1 7_0_6]$ bin/ndb_mgm -c 192.168.0.4:1186 ndb_mgm> show Connected to Management Server at: 192.168.0.4:1186 Cluster Configuration --------------------- [ndbd(NDB)] 2 node(s) id=1 (not connected, accepting connect from 192.168.0.3) id=2 (not connected, accepting connect from 192.168.0.4) [ndb_mgmd(MGM)] 2 node(s) id=101 (not connected, accepting connect from 192.168.0.3) id=102 @192.168.0.4 (mysql-5.1.34 ndb-7.0.6) [mysqld(API)] 2 node(s) id=51 (not connected, accepting connect from 192.168.0.3) id=52 (not connected, accepting connect from 192.168.0.4) ndb_mgm> quit

and then start the 2 data nodes (ndbd) and 2 MySQL API/Server nodes (mysqld) and then check that they’re all up and running:

[billy@ws1 7_0_6]$ bin/ndbd --initial -c localhost:1186

2009-06-17 13:05:47 [ndbd] INFO -- Configuration fetched from 'localhost:1186', generation: 1

[billy@ws2 7_0_6]$ bin/ndbd --initial -c localhost:1186

2009-06-17 13:05:51 [ndbd] INFO -- Configuration fetched from 'localhost:1186', generation: 1

[billy@ws1 7_0_6]$ bin/mysqld --defaults-file=conf/my.1.conf&

[billy@ws2 7_0_6]$ bin/mysqld --defaults-file=conf/my.2.conf&

[billy@ws1 7_0_6]$ bin/ndb_mgm

-- NDB Cluster -- Management Client --

ndb_mgm> show

Connected to Management Server at: localhost:1186

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=1 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6, Nodegroup: 0, Master)

id=2 @192.168.0.4 (mysql-5.1.34 ndb-7.0.6, Nodegroup: 0)

[ndb_mgmd(MGM)] 2 node(s)

id=101 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6)

id=102 @127.0.0.1 (mysql-5.1.34 ndb-7.0.6)

[mysqld(API)] 2 node(s)

id=51 @192.168.0.3 (mysql-5.1.34 ndb-7.0.6)

id=52 @192.168.0.4 (mysql-5.1.34 ndb-7.0.6)

ndb_mgm> quit

Using the Cluster

There are now 2 API nodes/MySQL Servers/mysqlds running on the 2 different hosts; both accessing the same data. Each of those nodes can be accessed by the mysql client using the hostname and ports that were configured in the my.X.cnf files. For example, we can access the first of those nodes (node 51) in the following way (each API node is accessed using the host and port number in its associate my.X.cnf file:

[billy@ws1 7_0_6]$ bin/mysql -h localhost -P 3306

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 4

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> use test;

Database changed

mysql> create table assets (name varchar(30) not null primary key,

-> value int) engine=ndb;

Query OK, 0 rows affected (0.99 sec)

mysql> insert into assets values ('Car','1900');

Query OK, 1 row affected (0.03 sec)

mysql> select * from assets;

+------+-------+

| name | value |

+------+-------+

| Car | 1900 |

+------+-------+

1 row in set (0.00 sec)

mysql> quit

Bye

If you see “ERROR 1130 (HY000): Host ‘192.168.0.3’ is not allowed to connect to this MySQL server” then you need to set up the privileges (repeat on each host) – in a real system, you’d likely want tighter security that this:

[billy@ws1 7_0_6]$ bin/mysql -u root Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 3 Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL) Type 'help;' or 'h' for help. Type 'c' to clear the current input statement. mysql> GRANT ALL ON *.* TO ''@'localhost'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL ON *.* TO ''@'192.168.0.4'; Query OK, 0 rows affected (0.00 sec) mysql> quit Bye [billy@ws2 7_0_6]$ bin/mysql -u root Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 8 Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL) Type 'help;' or 'h' for help. Type 'c' to clear the current input statement. mysql> GRANT ALL ON *.* TO ''@'localhost'; Query OK, 0 rows affected (0.01 sec) mysql> GRANT ALL ON *.* TO ''@'192.168.0.3'; Query OK, 0 rows affected (0.00 sec) mysql> quit Bye

Note that as this table is using the ndb (MySQL Cluster) storage engine, the data is actually held in the data nodes rather than in the SQL node and so we can access the exact same data from the the other SQL nodes:

[billy@ws1 7_0_6]$ bin/mysql -h 192.168.0.4 -P 3306

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 10

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> use test;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from assets;

+------+-------+

| name | value |

+------+-------+

| Car | 1900 |

+------+-------+

1 row in set (0.01 sec)

mysql> quit

Bye

Your next steps

This is still a fairly simple, contrived set up. Hopefully it’s clear how additional data or SQL nodes could be added and in a larger deployment you may well decide to run the management and SQL nodes on different hosts to the data nodes (in fact, when starting up the management nodes there is a warning message suggesting you deploy them elsewhere!).

To move the management node to a 3rd, independent physical host (and a 4th one if you want 2 management nodes for redundancy -a future article will explain when you might want to do that), just change the IP address in the [ndb_mgmd] section of config.ini and then run the ndb_mgmd executable on that new host. Note that the management node consumes very few resources and so can share that host with other functions/applications (e.g. SQL Server nodes but not data nodes).

You’d also set several more variables in the configuration files in order to size and tune your Cluster.

In this article, I used 2 LINUX hosts but you could extend the Windows example introduced in My first Cluster running on Windows in exactly the same way.

[…] physical hosts in the interests of performance and redundancy (take a look at the new article (Deploying MySQL Cluster over multiple host) to see how to do that). You’d also set several more variables in the configuration files in […]

[…] will focus on setting up the replication, you can refer to Deploying MySQL Cluster over multiple hosts for the steps to configure and run each MySQL Cluster […]

What about error:

Can’t connect to local mysql server through socket ‘/tmp/mysql.sock’ ?

That implies that the mysqld is using a non-standard location for its socket. A couple of options:

connect using the IP Address/port number: mysql -h 192.168.0.4 -P 3306 (where the port number is defined in the defaults file that you specify when starting mysqld)

When you start the mysqld process, it should tell you the location it’s using for the socket; you can then specify that location using the “mysql -S” option

Specify where you want the socket in the defaults file you use when starting mysqld by including “socket=/tmp/mysql.sock” which is where the mysql is looking by default (obviously, each mysqld on the same host would need to use a different socket

Regards, Andrew.

Hi,

This setup works perfectly for with out errors

Regards,

Hari

Hi

I want to used 3 hosts in mysql-cluster.

2 node for ndbd, and 1 node for ndb_mgmnd,

can you show me the tutorial

thanks

Just a quick note to say “Thank you”. This post was extremely helpful in understanding the basics of how each piece of the MySQL cluster works together. Thank you for taking the time to share this with the community.

DO I create the my.1.cnf and my.2.cnf on both servers?

If so, I get the following error:

-bash-3.2# bin/ndb_mgmd –initial -f conf/config.ini –configdir=/usr/local/mysql-cluster/conf

2010-04-21 13:39:16 [MgmtSrvr] INFO — NDB Cluster Management Server. mysql-5.1.41 ndb-7.0.13

2010-04-21 13:39:16 [MgmtSrvr] INFO — Reading cluster configuration from ‘conf/config.ini’

2010-04-21 13:39:16 [MgmtSrvr] WARNING — at line 26: Cluster configuration warning:

arbitrator with id 101 and db node with id 1 on same host 194.145.200.17

arbitrator with id 102 and db node with id 2 on same host 194.145.200.171

Running arbitrator on the same host as a database node may

cause complete cluster shutdown in case of host failure.

2010-04-21 13:39:17 [MgmtSrvr] INFO — Reading cluster configuration from ‘conf/config.ini’

2010-04-21 13:39:17 [MgmtSrvr] WARNING — at line 26: Cluster configuration warning:

arbitrator with id 101 and db node with id 1 on same host 194.145.200.17

arbitrator with id 102 and db node with id 2 on same host 194.145.200.171

Running arbitrator on the same host as a database node may

cause complete cluster shutdown in case of host failure.

server1=194.145.200.17

server2=194.145.200.171

What could be wrong?

Hi Michiel,

there should be just one instance of each my.*.cnf file and each my.*.cnf file should be used with just one mysqld process. It looks like you’re trying to run 2 mysqld processes who have the same id defined in their my.*.cnf file.

Regards, Andrew.

[…] A series of quick-start guides are now available to get you up and running with MySQL Cluster in as little time as possible; they are available for LINUX/Mac OS X, Windows and Solaris. The configuration is intentionally a simple one – 2 data nodes, 1 management node and 1 MySQL Server. Once you have this up and running, your next experiment may be to extend this over multiple hosts. […]

Hi Andrew,

Will it be necessary for the data nodes(say 2), management nodes(2) and the mysqld(2) nodes to be deployed in different machines (total of 6 machines)? Or just 4(2 data+mysqld nodes + 2 management nodes)?

Would you think implementing MySQL cluster in a virtualized(Xen) environment good? My current setup would have two physical servers (8GB available each), no SAN, each machine hosting three VMs (VM for manager, VM for data+sqld #1, VM for data+sqld #2). Totaling 6 VMs overall.

Manuel,

the minimum fault-tolerant configuration is 3 servers – the reason for this is described in http://www.clusterdb.com/mysql-cluster/mysql-cluster-fault-tolerance-impact-of-deployment-decisions/

MySQL Cluster is not tested on XEN and you may run into technical issues. The support position is that if you find an error that is specific to running on XEN (or other VMs) then you need to look to the VM provider to provide a fix.

Andrew.

[…] I prefer installing MySQL Cluster from the tar ball as it gives me more control (for example, openSUSE is using MySQL Cluster 7.0 but I prefer to use […]

Hello

I did exactly what u said here .

After creating the config.ini and my.conf file

when i try to start management node i get the error

./ndb_mgmd: unknown option ‘–ndbcluster’

Can you help me

Thanks

It worked thanks

Is it possible to have mysql node on windows and datanodes on linux?

Hi Epico,

Yes – you could have MySQL Servers on Windows with Data Nodes on LINUX. The restriction to watch out for is that the MySQL Servers (and any other NDB API client) needs to be running on an architecture with the same endienness as the data nodes.

Andrew.

I found this very helpfull. I was able to get the cluster setup and working but at 18,080 rows in one of my tables. I get a table full error.

I am using 3 linux boxes running Ubuntu 9. and each box has 4gb of ram. Any help resolving this is greatly appreciated.

Thanks

Hi Tamer,

Great to hear that you’re up and running.

Did you set the DataMemory parameter in your config.ini? If not then the default is 80Mbytes and you may have hit that: http://dev.mysql.com/doc/refman/5.1/en/mysql-cluster-ndbd-definition.html#ndbparam-ndbd-datamemory

To check, run “ALL REPORT MEMORY” from within the ndb_mgm tool.

You may need to increase IndexMemory too.

Once you’ve edited your config.ini file, shut down the ndb_mgmd process and then start it again with the –initial option. Then restart each of your data nodes in turn (wait for the 1st data node to come back up before restarting the next one to avoid an outage) – do *not* use the –initial option when starting the data nodes unless you want to remove all of your data.

Andrew.

Great post got it working right away. I was hoping you could explain why the cluster warns that running the management server on the same host as the data node is not advised? I did some googling and couldn’t find a definitive answer.

Thanks!

somecallmemike,

The reason why the management node(s) shouldn’t be co-located with the data nodes is that by default one of the management nodes acts as an arbitrator in the case that one set of data nodes become isolated from the rest. I work through several use cases of how arbitration works and the impact of where you run the management node in this post.

Regards, Andrew.

I’ve set up my MySQL-Cluster as you expected (adding some additional variables), it started fine, but:

root@zm2:/etc/mysql# ndb_mgm

— NDB Cluster — Management Client —

ndb_mgm> show

Connected to Management Server at: localhost:1186

Cluster Configuration

———————

[ndbd(NDB)] 2 node(s)

id=1 @192.168.180.44 (mysql-5.1.39 ndb-7.0.9, Nodegroup: 0, Master)

id=2 @127.0.0.1 (mysql-5.1.39 ndb-7.0.9, Nodegroup: 0)

[ndb_mgmd(MGM)] 2 node(s)

id=101 @127.0.0.1 (mysql-5.1.39 ndb-7.0.9)

id=102 @127.0.0.1 (mysql-5.1.39 ndb-7.0.9)

[mysqld(API)] 2 node(s)

id=51 (not connected, accepting connect from 192.168.180.44)

id=52 (not connected, accepting connect from 192.168.180.45)

ndb_mgm>

mysqld is running. And I have configured:

[mysqld]

ndb-nodeid = 51

server-id = 51

ndb-cluster

ndb-connectstring = 192.168.180.44

[mysql_cluster]

ndb-connectstring = 192.168.180.44

Since there is no error message and I couldn’t find anything — why doesn’t connect mysqld to the management daemon?

A little bit lost …

Thomas, the first thing I would do is to replace 127.0.0.1 with the real IP address (192.168.180.??). 127.0.0.1 only makes sense on that machine whereas the configuration data needs to be shared and interpreted on all hosts in the Cluster.

Please let me know how you get on.

Regards, Andrew.

I’m a newbie, jeje, but i found your post very clear, thanks a lot. I’m going to use it for my very first distributed architecture proyect (I’m a student). Hope everything goes well.

Hi Andrew ,

I have done to try .

Fist time clustering runing well , but after restart my window and runing again the second server mgm error “failed to report event to event log, error: 1502

can you give sugestion .

Thanks you .

IKetutG

IKetut,

to get more information, if you were using the start /B approach then try without it (you’ll need more windows to cope with the number of processes). After that check the log files that are created – there should be some clues there.

Regards, Andrew.

very very good article…each n everything is mentioned in a clear way…helpful for the beginers….

thanks…

Neel

It worked like charm.

Thanks.

I have configure everything as indicated in the post but I keep getting this message.

MySQL Cluster Management Server mysql-5.1.56 ndb-7.0.30

2012-03-13 17:50:15 [MgmtSrvr] WARNING — at line 27: Cluster configuration warning:

arbitrator with id 101 and db node with id 1 on same host 196.168.1.189

arbitrator with id 102 and db node with id 2 on same host 196.168.1.187

Running arbitrator on the same host as a database node may

cause complete cluster shutdown in case of host failure.

ruto@rutoc:~/mysql/7_0_6$

My.1.cnf exist in one machine and my.2.cnf exist on another machine while config.ini exist in both.What might be the problem? am using ubuntu 11.10.

In order to have a fault-tolerant system, you need to make sure that your management node runs on a different host to your data nodes. If you you co-locate them then it will work but if that host fails then your Cluster may shut down. See http://www.clusterdb.com/mysql-cluster/mysql-cluster-fault-tolerance-impact-of-deployment-decisions/ for more details.

Regards, Andrew.

I have Some error when i run

bin/mysqld –defaults-file=conf/my.2.conf&

And see this report.

[root@localhost cluster]# 120619 1:52:55 [ERROR] Can’t find messagefile ‘/var/tmp/cluser/share/english/errmsg.sys’

120619 1:52:55 [Warning] Can’t create test file /var/tmp/cluser/data/localhost.lower-test

120619 1:52:55 [Warning] Can’t create test file /var/tmp/cluser/data/localhost.lower-test

bin/mysqld: Can’t change dir to ‘/var/tmp/cluser/data/’ (Errcode: 2)

Plz help me fix it.

Quang,

Are you sure that you ran mysql-installdb for the datadir’s that you’re using for your mysqlds?

Andrew.

Andrew,

I installed mysql-5.5.20 ndb-7.2.5 but the data is not replicating from API to nodes or within nodes

ndb_mgm> show

Cluster Configuration

———————

[ndbd(NDB)] 2 node(s)

id=2 @10.100.100.203 (mysql-5.5.20 ndb-7.2.5, Nodegroup: 0, Master)

id=3 @10.100.100.204 (mysql-5.5.20 ndb-7.2.5, Nodegroup: 0)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @10.100.100.206 (mysql-5.5.20 ndb-7.2.5)

[mysqld(API)] 1 node(s)

id=4 @10.100.100.205 (mysql-5.5.20 ndb-7.2.5)

ndb_mgm> 4 status

Node 4: connected (Version 7.2.5)

ndb_mgm>

created a table in API (10.100.100.205)

mysql> use test;

mysql> CREATE TABLE `test` ( `id` int(11) DEFAULT NULL ) ENGINE=ndbcluster DEFAULT CHARSET=latin1;

Query OK, 0 rows affected (0.08 sec)

but the table is not showing up in any of the nodes.

Balaji,

what makes you think that the data hasn’t been written to the data nodes? The normal way you’d check that it has would be reading the same data through another MySQL Server (mysqld) that is part of the same cluster. Judging by the output from ndb_mgm, you only have a single mysqld.

My bet is that the data is in fact being written to both data nodes – I see no reason to suspect otherwise.

Regards, Andrew.

Andrew,

I was trying to understand where the data is stored in the datanodes, so I did the following

1. Created a test table in test database

2. I see this table is stored here in SQL node

[root@slave05 test]# pwd

/usr/local/mysql/data/test

[root@slave05 test]# ls -ltr

total 12

-rw-rw—- 1 mysql mysql 0 Jun 25 10:33 test.ndb

-rw-rw—- 1 mysql mysql 8556 Jun 25 10:33 test.frm

3. Then I wanted to find out if this table is replicated to data nodes. But it isn’t.

[root@slave03 test]# pwd

/usr/local/mysql/data/test

[root@slave03 test]# ls -ltr

total 0

[root@slave03 test]#

[root@slave04 test]# pwd

/usr/local/mysql/data/test

[root@slave03 test]# ls -ltr

total 0

[root@slave03 test]#

slave05 is SQL node

slave03 and slave04 are data node

How/where to check if the data is written on data node?.

What you’re seeing on the MySQL servers is the data dictionary rather than the data itself. On the data nodes, there isn’t a different file for each table.

HI Andrew,

Very well written article.

I have 3 offices all connecting to a single database, on a server at my site.

Internet is very slow, so am looking to set up a server in each office, with replication. I assume the best way for everyone to have the same data is to use a cluster with master-master replication.

I will be using ubuntu, running on a VM.

What is the minimum number of machines you would recommend (3 or 4)?

Using asynchronous replication between the sites is the way to go if the connection between them isn’t fast and reliable. If you want to use MySQL Cluster then the minimum fault tolerant configuration for each site/cluster is 3 machines (the management node should run on a different machine than the data nodes or else it can result in the cluster shutting down in the event of network partitioning).

Regards, Andrew.

Hi Andrew,

Its a great article.

I have setup a mysql cluster using 7.0.31 release

I created the table test.assets as given above.

Here is what I did and the cluster is losing all the data;

1. Shutdown data node 1

2. Added the new rows

3. Made the node 1 up and data is in sync

4. Now shutdown data node 2

5. Added new rows;

6. After 2 minutes, shutdown the entire cluster using shutdown command.

7. Now when I make all nodes up, there is no data found including our assets table.

Entire data created is lost. Is that a known issue?

Thanks

Sugunakar

sugun, just to check – did you restart the data nodes with the –initial option? If so, that would explain it as this informs the data nodes to throw away all of their data.

Andrew.

Thanks Andrew, yeah you are right, I was using the initial option with data nodes.

Thanks for your help.

Regards

sugun

First of All, thanks alot for your effort.

Secondly, I had setup accordingly but mix with chown -R umysql data command and after restart i m getting this

120813 13:25:22 [Note] Plugin ‘FEDERATED’ is disabled. 120813 13:25:57 [Note] Starting Cluster Binlog Thread 120813 13:25:57 InnoDB: The InnoDB memory heap is disabled

Hi msq,

I don’t think that those messages are an issue – if you google them they seem to be harmless (and not connected to the NDB storage engine). Is your setup running?

Note that for a High Availability solution, the ndb_mgmd(s) shouldn’t be on the same hosts as the data nodes.

Andrew.

ndb_mgm> show

Cluster Configuration

———————

[ndbd(NDB)] 2 node(s)

id=1 @127.0.0.1 (mysql-5.5.25 ndb-7.2.7, Nodegroup: 0, Master)

id=2 @192.168.1.42 (mysql-5.5.25 ndb-7.2.7, Nodegroup: 0)

[ndb_mgmd(MGM)] 2 node(s)

id=101 @192.168.1.40 (mysql-5.5.25 ndb-7.2.7)

id=102 @192.168.1.42 (mysql-5.5.25 ndb-7.2.7)

[mysqld(API)] 2 node(s)

id=51 (not connected, accepting connect from 192.168.1.40)

id=52 (not connected, accepting connect from 192.168.1.42)

ndb_mgm> quit

[mysqlu@Store01p 727]$ bin/mysqld –defaults-file=conf/my.1.conf&

[1] 5121

[mysqlu@Store01p 727]$ 120813 16:41:34 [Note] Plugin ‘FEDERATED’ is disabled.

Thanks a lot for your reply.

I have got through these. basically my mysqld was already running on Node2 and upon shuting it manually got able to start mysqld.

First: Please tell me in which way we should power off these two nodes (commands wise please).

secondly, incase of power outage, in whet manner we should start them. (commands please)

thirdly, I want all of them(ndb_mgm, ndb, mysqld) on both to be auto on. what should I do?

Fourthly, I have setup a production env based upon your this multi host artilce. that is succesfull and up running but i want to back up their (both) cluster configs and in quick restoreable format. Could u please help me or any step by step doc?

msq,

mysqlds can be stopped by issuing the command “mysqladmin -u root -h 127.0.0.1 -P3306 shutdown” and can be started with “mysqld –defaults-file=xxxxx”. The rest of the Cluster can be stopped with “ndb_mgm -e shutdown”. You can safely start ndb_mgmds with the –initial option every time but if you do that for data nodes then all of your data will be deleted.

You can use the infrastructure offered by your OS to automate starting and stopping the processes (for example by placing entries in the /etc/init.d directory on Linux.

To back up your configuration data, copy your config.ini and my.cnf files. You can use the backup command within ndb_mgm to backup the data held in NDB tables and run regular MySQL backup commands for any other (i.e. InnoDB or MyISAM) tables. You can restore NDB tables using the ndb_restore command. These are documented in the MySQL Cluster documentation.

So nice of you for your answer, Now things are quit clear to me and special thanks for your being in tuch with your this blog which really helping others.

Well, a bit more is requested about following two points requested.

secondly, incase of power outage, in what manner we should start them.

thirdly, I want all of them(ndb_mgm, ndb, mysqld) on both to be auto on. what should I do? I have centos 5.4 x86_64 and mysql Cluster 7.2.7. which entries or files needed to be place?

atlast btw i have seen three very beautifull kids with u now own ur web. they are very nice.

So nice of you for your answer, Now things are quit clear to me and special thanks for your being in tuch with your this blog which really helping others.

Well, a bit more is requested about following two points.

secondly, incase of power outage, in what manner we should start them.

thirdly, I want all of them(ndb_mgm, ndb, mysqld) on both to be auto on. what should I do? I have centos 5.4 x86_64 and mysql Cluster 7.2.7. which entries or files needed to be place?

atlast btw i have seen three very beautifull kids with u now own ur web. they are very nice.

One another issue is.

I hv created a db “abc” on Node A using

create database abc; and on Node B db created automatically but when i restores backup on it using bin/mysql -u root -P 3306 -p abc < /home/myuser/abcDump.sql, it restores all tables to Node A including data inside it but no tables found on Node B.

should i use mdbcluster with restore command or what syntax?

Sorry for disturbing u again

Hi andrew,

I configured MGMT node and mysqld on single host. Data nodes are on two diff machines.

MGMT and data nodes are doing fine. I can get their status via ndb_mgm. But mysqld is not coming up. My steps are listed below:

on mgmt box, I just the scripts/mysql_install_db script and then configued a my.conf file.

scripts/mysql_install_db –basedir=/home/sugun/mysql/7_0_31 –datadir=/home/sugun/mysql/7_0_31/data

config.ini:

————–

[ndbd default]

noofreplicas=2

[ndbd]

hostname=192.168.56.2

Nodeid=1

[ndbd]

hostname=192.168.56.3

Nodeid=2

[ndb_mgmd]

Nodeid = 106

hostname=192.168.56.7

[mysqld]

Nodeid=55

hostname=192.168.56.7

My.conf file contents:

———

[mysqld]

ndb-nodeid=55

ndbcluster

datadir=/home/sugun/mysql/7_0_31/data

basedir=/home/sugun/mysql/7_0_31

port=3306

server-id=55

log-bin

When started the mysqld on its failing to start. Could you please help me out

$ bin/mysqld –defaults-file=conf/my.conf&

Below is the error message:

[sugun@ndb_mgr1 7_0_31]$ 120816 7:27:51 [Warning] No argument was provided to –log-bin, and –log-bin-index was not used; so replication may break when this MySQL server acts as a master and has his hostname changed!! Please use ‘–log-bin=ndb_mgr1-bin’ to avoid this problem.

120816 7:27:51 [Note] Plugin ‘FEDERATED’ is disabled.

bin/mysqld: Table ‘mysql.plugin’ doesn’t exist

120816 7:27:51 [ERROR] Can’t open the mysql.plugin table. Please run mysql_upgrade to create it.

120816 7:27:51 InnoDB: Initializing buffer pool, size = 8.0M

120816 7:27:51 InnoDB: Completed initialization of buffer pool

InnoDB: Log scan progressed past the checkpoint lsn 0 37356

120816 7:27:51 InnoDB: Database was not shut down normally!

InnoDB: Starting crash recovery.

InnoDB: Reading tablespace information from the .ibd files…

InnoDB: Restoring possible half-written data pages from the doublewrite

InnoDB: buffer…

InnoDB: Doing recovery: scanned up to log sequence number 0 44233

120816 7:27:51 InnoDB: Starting an apply batch of log records to the database…

InnoDB: Progress in percents: 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99

InnoDB: Apply batch completed

120816 7:27:52 InnoDB: Started; log sequence number 0 44233

120816 7:27:52 [Note] NDB: NodeID is 55, management server ‘192.168.56.7:1186’

120816 7:27:52 [Note] NDB[0]: NodeID: 55, all storage nodes connected

120816 7:27:52 [Note] Starting Cluster Binlog Thread

120816 7:27:52 [Note] Recovering after a crash using ndb_mgr1-bin

120816 7:27:52 [Note] Starting crash recovery…

120816 7:27:52 [Note] Crash recovery finished.

120816 7:27:52 [ERROR] Fatal error: Can’t open and lock privilege tables: Table ‘mysql.host’ doesn’t exist

Thanks

Sugun

Segun,

1st thing – if at all possible use a newer version of MySQL (7.2).

2nd thing – make sure that you use the Cluster basedir for mysql_install_db and then the mysqld from that basedir (rather than a non-Cluster mysqld or one that came with a different version of Cluster).

Andrew.

MSQ,

Please check the backup file and ensure that each table is having an extension like engine=ndbcluster.

If you open your backup file, you will see lot of lines as given below:

create table xxxx engine=’InnoDB’;

You have to change that entry to engine=’ndb’;

Then all tables will be created in the cluster.

I hv created a db “abc” on Node A using

create database abc; and on Node B db created automatically but when i restores backup on it using bin/mysql -u root -P 3306 -p abc < /home/myuser/abcDump.sql, it restores all tables to Node A including data inside it but no tables found on Node B.

should i use mdbcluster with restore command or what syntax?

msq – for MySQL Cluster, your best bet for backups is to use the online backup command within ndb_mgm and the retire using ndb_restore (note that you’ll need an unused [ndbpai] or [mysqld] section in your config.ini file for ndb_restore to connect to.

Andrew.

Thank you very much SUGUN AND ANDREW i have successfully rstored the databases on one and get on the second node.

There is one issue, i have setup the cluster accord to this article but when one of my mgm node got down (which show master in normal ruuning) the other one could not get online untill i manual online the master.. IS it possible that one will run in case of down of other one automatically

Is this possible to have one node available in case of down of other node automatically

[root@Store01p 727i686]# bin/ndb_mgm -e show

Connected to Management Server at: localhost:1186

Cluster Configuration

———————

[ndbd(NDB)] 2 node(s)

id=1 @127.0.0.1 (mysql-5.5.25 ndb-7.2.7, starting, Nodegroup: 0)

id=2 @192.168.1.42 (mysql-5.5.25 ndb-7.2.7, starting, Nodegroup: 0)

[ndb_mgmd(MGM)] 2 node(s)

id=101 @192.168.1.41 (mysql-5.5.25 ndb-7.2.7)

id=102 @192.168.1.42 (mysql-5.5.25 ndb-7.2.7)

[mysqld(API)] 2 node(s)

id=51 (not connected, accepting connect from 192.168.1.41)

id=52 (not connected, accepting connect from 192.168.1.42)

wwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwww

1:

Please tell y this is hang on starting…

I have checked it by other user aswell but its still ??

Please help

When ever I issue command bin/mysqld –defaults-file=conf/my.2.conf&

I Got This output—

Neither mdbd is going out of “starting” nor this response understandable

==========================

121124 4:27:07 [Warning] No argument was provided to –log-bin, and –log-bin-index was not used; so replication may break when this MySQL server acts as a master and has his hostname changed!! Please use ‘–log-b in=Store02p-bin’ to avoid this problem.

121124 4:27:07 [Note] Plugin ‘FEDERATED’ is disabled.

121124 4:27:07 InnoDB: The InnoDB memory heap is disabled

121124 4:27:07 InnoDB: Mutexes and rw_locks use InnoDB’s own implementation

121124 4:27:07 InnoDB: Compressed tables use zlib 1.2.3

121124 4:27:07 InnoDB: Using Linux native AIO

121124 4:27:07 InnoDB: Initializing buffer pool, size = 128.0M

121124 4:27:07 InnoDB: Completed initialization of buffer pool

121124 4:27:07 InnoDB: highest supported file format is Barracuda.

121124 4:27:07 InnoDB: Waiting for the background threads to start

121124 4:27:08 InnoDB: 1.1.8 started; log sequence number 1595675

121124 4:27:43 [Note] Starting Cluster Binlog Thread

121124 4:27:43 [Note] Recovering after a crash using Store02p-bin

121124 4:27:43 [Note] Starting crash recovery…

121124 4:27:43 [Note] Crash recovery finished.

121124 4:27:43 [Note] Server hostname (bind-address): ‘0.0.0.0’; port: 5000

121124 4:27:43 [Note] – ‘0.0.0.0’ resolves to ‘0.0.0.0’;

121124 4:27:43 [Note] Server socket created on IP: ‘0.0.0.0’.

121124 4:27:43 [Note] Event Scheduler: Loaded 0 events

121124 4:27:43 [Note] bin/mysqld: ready for connections.

Version: ‘5.5.25a-ndb-7.2.7-gpl-log’ socket: ‘/tmp/mysql.sock’ port: 5000 MyS QL Cluster Community Server (GPL)

[admin@Store02p 727i686]$ 121124 4:28:13 [Warning] NDB : Tables not available after 30 seconds. Consider increasing –ndb-wait-setup value

fairsimple – you need to figure out why the data nodes aren’t starting correctly. Check the cluster logs – should be in the ndb_mgmd’s datadir and the ndbds’s logs – should be in their datadirs.

Inwhich dir do u think in following:

wwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwww

Connected to Management Server at: localhost:1186

Cluster Configuration

———————

[ndbd(NDB)] 2 node(s)

id=1 @127.0.0.1 (mysql-5.5.25 ndb-7.2.7, starting, Nodegroup: 0)

id=2 @192.168.1.42 (mysql-5.5.25 ndb-7.2.7, starting, Nodegroup: 0)

[ndb_mgmd(MGM)] 2 node(s)

id=101 @192.168.1.41 (mysql-5.5.25 ndb-7.2.7)

id=102 @192.168.1.42 (mysql-5.5.25 ndb-7.2.7)

[mysqld(API)] 2 node(s)

id=51 (not connected, accepting connect from 192.168.1.41)

id=52 (not connected, accepting connect from 192.168.1.42)

[root@Store01p 727i686]# ls -la

total 544

drwxrwxrwx 15 admin root 4096 Nov 24 06:33 .

drwxrwxrwx 3 admin root 4096 Nov 24 02:22 ..

drwxr-xr-x 2 admin root 4096 Nov 24 04:55 bin

drwxr-xr-x 2 admin root 4096 Nov 24 06:34 conf

-rw-r–r– 1 admin root 17987 Jul 13 05:27 COPYING

drwxr-xr-x 6 admin root 4096 Nov 24 06:30 data

drwxr-xr-x 2 admin root 4096 Nov 24 02:12 docs

drwxr-xr-x 4 admin root 4096 Nov 24 02:12 include

-rw-r–r– 1 admin root 7604 Jul 13 05:27 INSTALL-BINARY

drwxr-xr-x 3 admin root 4096 Nov 24 02:11 lib

drwxr-xr-x 4 admin root 4096 Nov 24 02:12 man

drwxr-xr-x 10 admin root 4096 Nov 24 02:12 mysql-test

-rw-r–r– 1 admin root 416548 Nov 24 06:51 ndb_101_cluster.log

-rw-r–r– 1 admin root 536 Nov 24 06:34 ndb_101_out.log

-rw-r–r– 1 root root 4 Nov 24 06:33 ndb_101.pid

drwxr-x— 2 admin root 4096 Nov 24 02:38 ndb_1_fs

-rw-r–r– 1 admin root 26065 Nov 24 06:35 ndb_1_out.log

-rw-r–r– 1 admin root 4 Nov 24 06:35 ndb_1.pid

-rw-r–r– 1 admin root 2552 Jul 13 05:27 README

drwxr-xr-x 2 admin root 4096 Nov 24 02:12 scripts

drwxr-xr-x 29 admin root 4096 Nov 24 02:12 share

drwxr-xr-x 4 admin root 4096 Nov 24 02:11 sql-bench

drwxr-xr-x 2 admin root 4096 Nov 24 02:12 support-files

Secondly,

1:

Why its showning me “127.0.0.1” ?

id=1 @127.0.0.1 (mysql-5.5.25 ndb-7.2.7, starting, Nodegroup: 0)

2:

Do I have to setup IP in etc/hosts ?

Please help Its urgent—- I have been working with it for 4 days—-or if u could share skype id for some time so we can talk

Here are the logs on cluster on Node A same are on Node B

wwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwww

2012-11-24 07:49:37 [MgmtSrvr] INFO — Node 1: Initial start, waiting for 2 to connect, nodes [ all: 1 and 2 connected: 1 no-wait: ]

2012-11-24 07:49:39 [MgmtSrvr] INFO — Node 2: Initial start, waiting for 1 to connect, nodes [ all: 1 and 2 connected: 2 no-wait: ]

2012-11-24 07:49:40 [MgmtSrvr] INFO — Node 1: Initial start, waiting for 2 to connect, nodes [ all: 1 and 2 connected: 1 no-wait: ]

2012-11-24 07:49:42 [MgmtSrvr] INFO — Node 2: Initial start, waiting for 1 to connect, nodes [ all: 1 and 2 connected: 2 no-wait: ]

2012-11-24 07:49:43 [MgmtSrvr] INFO — Node 1: Initial start, waiting for 2 to connect, nodes [ all: 1 and 2 connected: 1 no-wait: ]

2012-11-24 07:49:45 [MgmtSrvr] INFO — Node 2: Initial start, waiting for 1 to connect, nodes [ all: 1 and 2 connected: 2 no-wait: ]

2012-11-24 07:49:46 [MgmtSrvr] INFO — Node 1: Initial start, waiting for 2 to connect, nodes [ all: 1 and 2 connected: 1 no-wait: ]

Still Same Output

wwwwwwwwwwwwwwwwwwwwwww

Connected to Management Server at: localhost:1186

Cluster Configuration

———————

[ndbd(NDB)] 2 node(s)

id=1 @192.168.1.41 (mysql-5.5.25 ndb-7.2.7, starting, Nodegroup: 0)

id=2 @192.168.1.42 (mysql-5.5.25 ndb-7.2.7, starting, Nodegroup: 0)

[ndb_mgmd(MGM)] 2 node(s)

id=101 @192.168.1.41 (mysql-5.5.25 ndb-7.2.7)

id=102 @192.168.1.42 (mysql-5.5.25 ndb-7.2.7)

[mysqld(API)] 2 node(s)

id=51 (not connected, accepting connect from 192.168.1.41)

id=52 (not connected, accepting connect from 192.168.1.42)

No need of getting tension Andrew:;;;

I have solved it my self and finally got through it Now the things are working fine…

Its some where firewall issue though I have allowed 1186 and 5000 3306 but it wasnt working..

Just stoped the fw and its connected…Is there any other port hidden which should be permitted?

fairsimple – ports also need to be open for the data nodes to connect to each other and for the mysqlds to connect to the data nodes. Take a look at http://dev.mysql.com/doc/refman/5.5/en/mysql-cluster-ndbd-definition.html#ndbparam-ndbd-serverport to configure the ports used by the mysqlds to connect to the data nodes. Of course, you also have the ports that the mysqld allows clients to connect on (3306 by default).

Andrew.

do u refer any step by step cluster administration tutorials?

Any Db conversion tutorials?

Specially:

If one have mgm server on third node should he use that mgm’s Ip to auto failover and HA?

Hi Andrew

What about HA in your this article or if someone wants to have auto failover and have mgmd on third node which IP or setts he should do for web apps to use HA of mysql cluster

do u have any idea?

Hi

What about HA in your this article or if someone wants to have auto failover and have mgmd on third node which IP or setts he should do for web apps to use HA of mysql cluster

Hi,

having read this tutorial, I wonder why putting three nodes (mgmt,db,sql) on a single host in two host cluster is so problematic in terms of HA? In fact, we have all nodes doubled.Is it any technical reason that mgmt node cannot coexist with db node on the same host “Note that the management node consumes very few resources and so can share that host with other functions/applications (e.g. SQL Server nodes but not data nodes).” so that it affects HA? I have powerful-enough machine to host all of them.All FAQs simply are simply reapeating that one needs at least 4 hosts for full redundancy but don’t explain why..Will be grateful if someone could shed some light on it.

Thanks in advance.

p0is0n,

Take a look at http://www.clusterdb.com/mysql-cluster/mysql-cluster-fault-tolerance-impact-of-deployment-decisions/ for an explanation.

Andrew.

Andrew,

thanks for help.

From what I understood from this example, colocation of mgmt and data nodes implies that in case of a failure of the entire host with active arbitrator, service will be lost because it will end up with inactive arbitrator on the remaining node. Does that mean that failure of the arbitrator (that is:mgmd) does not effect in electing a new one?Not sure what is the difference between this scenario and another -when we have 2 hosts with mgmt nodes and the active arbitrator host fails entirely. I guess in both cases the new arbitrator election should happen. Pls advice. Thanks

p0i0n,

if you lost the host running the arbitrator and half of the data nodes then that doesn’t leave you with a quorum to elect a new arbitrator and so the only safe thing to do is shut down.

There should be no problem running the ndb_mgmd on Windows and everything else on Linux.

Andrew.

And one more thing – is it possible to have mysql+db nodes on linux hosts and management nodes on windows hosts? Will it work flawlessly and is supported configuration?Unfortunately, if I had to spare some resources for managements on external hosts, these would have to be windows boxes :/

Hi Andrew,

I’ve run into an issue in our environment, we are using mysql cluster manager to manage our data nodes.

It seems that the data nodes (running ndbmtd) assign random local ports, this is causing issues as our database tier is firewalled. Is there a way to force the multi threaded engine to specific ports?

Nick

Hi Nick,

take a look at http://dev.mysql.com/doc/refman/5.5/en/mysql-cluster-ndbd-definition.html#ndbparam-ndbd-serverport

Andrew.

i hv setup a two node cluster using ur article and migrated 04 database of volume 300MB each (each Server memory hv 8GB ) but hve two questions

1: each time I want to make any update in database its repsonce is deadly slow. y is this and how can overcome this?

2: tacking backup using “start backup” in ndb_mgm> but what if we have to restore some of files from that backup? how can i do this?

fairsimply,

as you’d expect, there are many reasons for why a database operation may be slow – depending on what your application is doing, what the schema looks like and how the database is configured. A good place to start would be this performance white paper.

Restores are performed using the ndb_restore command standalone command and not part of ndb_mgm.

Andrew.

Hello,

Thanks to this post, I successfully managed to have a working MySQL cluster, that’s great !

I now have a little question : I have two SQL nodes, so data can be accessed independently from mysql-1 or mysql-2.

Do you know if any MySQL connector (I’m thinking of PHP, for example) is able to handle two or more MySQL servers ?

I would like my application to be able to transparently load-balance its connections to MySQL, and also switch to the second MySQL node if the first fails.

Is it included in the MySQL connectors, so I would only have to specify something like : connect=mysql-1,mysql-2, or do I have to implement it myself ?

The ability to connect to multiple MySQL Servers is dependent on the connector (for example, I know that it’s supported by Connector/J). For PHP connectors, I’d suggest posting the question to http://forums.mysql.com/list.php?52

Andrew.

What ethernet links one would need to deploy 2 node ( 2 x dbd/2x sqld, that is: 1xdbd/1xmysqld on the same host) in master-master/master-slave config when used in CRM system (mostly textual data) in 25/50/100 concurrent users scenario?

Would 100Mbps for 25 users and 1000Mbps for 50/1000 users respectively be enough or should I forget the whole idea withouth 10Gbps link?

Could someone tell from their experience?

Thanks a lot.

Sire,

of course it’s going to be dependent on the application load but on the face of it the number of users you specify seems pretty low and so I think you could start with the networking you propose and see how it performs.

Andrew.

Andrew,

ok, that is understandable and before deployment one cannot really be precise with the estimation, however I can say that data transfers would be small text chunks, so probably equal to or even less than typical “web usage” scenario /but no flash or other heavy objects-only graphics will probably be those of GUI/.

However, in terms of cluster consistency- is there any minimum interconnect speed/max latency that breaks it or makes it? Should 1Gbps suffice for 50-100 concurrent users?

While 1Gbps infrastructure has pretty modest costs, a need to upgrade to 10Gbps may be costly.

Sire,

The speed of the connection required is dependent on the volume of data that’s being read and (especially) written. As you say, your requirements seem modest and so 1G may well be enough. Regarding latency of the network, the most sensitive area is the connection between data nodes – we recommend that you aim to have this less than 20ms (should be easy for a LAN, the reccommendation is really aimed as multi-data-center Clusters) but even then you can adjust the heartbeat settings to make the Cluster more tolerant of higher latencies.

Andrew.

Hi Andrew,

I am facing a problem while starting mysqld using

bin/mysqld –defaults-file=conf/my.1.conf&

OUTPUT goes like:

131013 5:24:57 [ERROR] Fatal error: Please read “Security” section of the manual to find out how to run mysqld as root!

131013 5:24:57 [ERROR] Aborting

131013 5:24:57 [Note] bin/mysqld: Shutdown complete

Please suggest I will be very thankful to you.

Thanks,

Achal

Vijay,

it looks like you’re attempting to run the mysqld while logged in as root – that’s blocked for security reasons, try running it as a normal user.

Andrew.

hi Andrew,

as u suggested in previous post, this time i am doing this with normal user but facing errors from the very starting,

[billy@cls1 mysql]$ scripts/mysql_install_db –basedir=/home/billy/mysql/ –datadir=/home/billy/mysql/data/

chown: changing ownership of `/home/billy/mysql/data/’: Operation not permitted

Cannot change ownership of the database directories to the ‘mysql’

user. Check that you have the necessary permissions and try again.

chmod mysql:mysql -R mysql done as i have renamed my extracted folder to mysql.

Please help me,

eagerly waiting for your positive responce.

Thanks,

Achal

Hi Achal,

where it’s neccessary to grant permissions, you need to be logged in as a user able to grant those permissions. Where you’re trying to run chmod, chown or similar commands you need the required authority – for example run them as root. The only root restricion is that when you run mysqld, you must *not* be the root user.

Regards, Andrew.

sure Andrew, will try the same and if error occurs will update you and

Thanks for helping me.

Achal

Hello I have an environment with 2 NDB MGM and 2 SQL when I start mysql does not connect at MGM in the log that failed to allocate nodeid is api at 10.139.139.94. Returned error: ‘No free node id found for mysqld (API). the ndbd starts and connects to MGM without error. ‘So that mysqld does not connect.

Could you please share the contents of your config.ini file?

Andrew.

Hi Admin ,

while running ndbd command on one of mysql server node ,below error i am facing :

[root@mysqlcluster2 bin]# ndbd

2015-03-28 22:55:19 [ndbd] INFO — Angel connected to ‘192.168.163.132:1186’

2015-03-28 22:55:19 [ndbd] ERROR — Failed to allocate nodeid, error: ‘Error: Could not alloc node id at 192.168.163.132 port 1186: Connection done from wrong host ip 192.168.163.129.’

[root@mysqlcluster2 bin]#

Can you please help me on this ? And also can you share your mail id ,so that i will share all config file …

Looks like you’re trying to run the ndbd process from a host that has an IP address (192.168.163.129) that isn’t included in an [ndbd] section in the config.ini file.

hello,

when i run bin/mysqld –defaults-file=conf/my.2.conf& , i am getting following errors.

1] 12618

ise@ise6:~/7_0_6$ 2015-04-18 12:14:24 0 [Warning] TIMESTAMP with implicit DEFAULT value is deprecated. Please use –explicit_defaults_for_timestamp server option (see documentation for more details).

2015-04-18 12:14:24 12618 [Warning] Can’t create test file /home/ise/7_0_6/data/ise6.lower-test

2015-04-18 12:14:24 12618 [Warning] Can’t create test file /home/ise/7_0_6/data/ise6.lower-test

2015-04-18 12:14:24 12618 [Warning] Buffered warning: Changed limits: max_open_files: 1024 (requested 5000)

2015-04-18 12:14:24 12618 [Warning] Buffered warning: Changed limits: table_cache: 431 (requested 2000)

2015-04-18 12:14:24 12618 [Warning] No argument was provided to –log-bin, and –log-bin-index was not used; so replication may break when this MySQL server acts as a master and has his hostname changed!! Please use ‘–log-bin=ise6-bin’ to avoid this problem.

bin/mysqld: File ‘./ise6-bin.index’ not found (Errcode: 13 – Permission denied)

2015-04-18 12:14:24 12618 [ERROR] Aborting

2015-04-18 12:14:24 12618 [Note] Binlog end

2015-04-18 12:14:24 12618 [Note] bin/mysqld: Shutdown complete

can u please help me with this?

It looks like the account that your starting the mysqld process with doesn’t have write permissions for the datadir that you’ve define..

/home/ise/7_0_6/data/.Andrew.

Thank you sir, the error has been resolved . now i am facing new issue wherein when i run bin/mysql -h 10.2.0.173 -P 3306 i am getting following error :

ERROR 1130 (HY000): Host ‘10.2.0.173’ is not allowed to connect to this MySQL server. please help me with this.

Thanks in advance.

Hi,

I had set up the cluster succesfully but am unable to succeed in connecting it with a jdbc driver.

When am giving

GRANT ALL PRIVILEGES ON *.* TO ‘root’@’%’ IDENTIFIED BY ‘password’;

its giving me the error

ERROR 1045 (28000): Access denied for user ‘root’@’localhost’ (using password: YES)

Any help?

Thanks in advance:)

You’re probably doing this from a user/host that doesn’t have permissions to grant permissions. Have you logged in as root?

Andrew.

Hi

I am getting the following error in the first step itself

scripts/mysql_install_db –basedir=/home/linux/mysql7 –datadir=/home/linux/mysql7/data

WARNING: The host ‘linux06

mongo1.simplify360.in’ could not be looked up with /home/linux/mysql7/bin/resolveip.

This probably means that your libc libraries are not 100 % compatible

with this binary MySQL version. The MySQL daemon, mysqld, should work

normally with the exception that host name resolving will not work.

This means that you should use IP addresses instead of hostnames

when specifying MySQL privileges !

Installing MySQL system tables…2015-05-06 15:06:21 0 [Warning] Using unique option prefix key_buffer instead of key_buffer_size is deprecated and will be removed in a future release. Please use the full name instead.

2015-05-06 15:06:21 0 [Warning] TIMESTAMP with implicit DEFAULT value is deprecated. Please use –explicit_defaults_for_timestamp server option (see documentation for more details).

2015-05-06 15:06:21 0 [Note] /home/linux/mysql7/bin/mysqld (mysqld 5.6.24-ndb-7.3.9-cluster-gpl) starting as process 2186 …

2015-05-06 15:06:21 2186 [ERROR] Can’t find messagefile ‘/usr/share/mysql/errmsg.sys’

Hi Andrew, I am getting the following error when I try to run this command.

bin/mysqld –defaults-file=conf/my.1.conf&

2015-05-06 16:42:06 0 [Warning] TIMESTAMP with implicit DEFAULT value is deprecated. Please use –explicit_defaults_for_timestamp server option (see documentation for more details).

2015-05-06 16:42:06 0 [Note] bin/mysqld (mysqld 5.6.24-ndb-7.3.9-cluster-gpl-log) starting as process 3542 …

2015-05-06 16:42:06 3542 [ERROR] Fatal error: Please read “Security” section of the manual to find out how to run mysqld as root!

2015-05-06 16:42:06 3542 [ERROR] Aborting

2015-05-06 16:42:06 3542 [Note] Binlog end

2015-05-06 16:42:06 3542 [Note] bin/mysqld: Shutdown complete

Can you please help on this.

Hi I finished almost to the end above mentioned two issues are resolved, but I am getting different type of error while executing the following command.

Command: bin/mysqld –defaults-file=/home/linux/mysql7/conf/my.1.conf –user=root&

Output:

bin/mysqld: File ‘mongo1-bin.000001’ not found (Errcode: 2 – No such file or directory)

2015-05-06 18:50:52 5362 [ERROR] Failed to open log (file ‘mongo1-bin.000001’, errno 2)

2015-05-06 18:50:52 5362 [ERROR] Could not open log file

2015-05-06 18:50:52 5362 [ERROR] Can’t init tc log

2015-05-06 18:50:52 5362 [ERROR] Aborting

Please help on this.

Can some one please help me on this ??

Presumably, you’re defining the name of the binlog file as

mongo1-binin yourmy.1.cnffile – have you checked that the directory you give is valid and that this user has read/write permissions for it?Andrew, thanks I solved all of the issues and able to run perfectly.

How want to access this remotely, what need to be changed to access the db remotely to create table, update and other operations?

You can connect from a remote host like this:

mysql -h:3306 . If your told that you don’t have permissions then issue aGRANTstatement on the target machine to allow remote connections from your remote machine… https://dev.mysql.com/doc/refman/5.6/en/grant.htmlAndrew.

I am getting some strange error, can you please help. Data is not getting replicated to another data node.

2015-06-19 23:28:17 23064 [Warning] NDB: Could not acquire global schema lock (4009)Cluster Failure

I’m afraid that I’m not directly involved with MySQL at the moment and so I’d suggest posting this question to the MySQL Cluster forum.

Hi Andrew!

Can you give me some ideas about load balancing between sql nodes.

Thank you so much! It’s so good if you can show or post some links about this topic 🙂

Some of the MySQL connectors (e.g. for JDBC or PHP) have this built in – best to check the documentation for your connector.

Hello Andrew;

thank you very much first off for the awesome site and all the help it provides.

i am busy deploying a mysql cluster to two servers and i have gotten the error as follows:

Use of uninitialized value in chown at /usr/local/mysql/scripts/mysql_install_db line 705.

2015-08-21 09:59:37 2771 [ERROR] Fatal error: Can’t change to run as user ‘mysql’ ; Please check that the user exists!

do you perhaps know where i have gone wrong.

i basically installed fresh Centos 6.6 downloaded the mysql-cluster tar from mysql unzip it into an install directory and then started the mysql autoinstaller put in the directives and hit deploy and start cluster.

would reply appreciate your help.

thanks again.

Thanks for your question. I’m no longer directly involved with MySQL (I’m now with MongoDB) and so I’d suggest that you seek help from http://forums.mysql.com/.

Best Regards, Andrew.

hi Andrew…

getting this

[root@pre-sv-wifi-msdp-idm1 mysql-cluster]# mysqld –defaults-file=my.1.conf&

[1] 21504

[root@pre-sv-wifi-msdp-idm1 mysql-cluster]# 2015-12-23 03:12:30 0 [Warning] TIMESTAMP with implicit DEFAULT value is deprecated. Please use –explicit_defaults_for_timestamp server option (see documentation for more details).

2015-12-23 03:12:30 0 [Note] mysqld (mysqld 5.6.24-log) starting as process 21504 …

2015-12-23 03:12:30 21504 [ERROR] Can’t find messagefile ‘/var/lib/mysql-cluster/datadir=/var/lib/mysql/share/mysql/errmsg.sys’

2015-12-23 03:12:30 21504 [Warning] Can’t create test file /var/lib/mysql-cluster/datadir=/var/lib/mysql/datadir=/var/lib/mysql/pre-sv-wifi-msdp-idm1.lower-test

2015-12-23 03:12:30 21504 [Warning] Can’t create test file /var/lib/mysql-cluster/datadir=/var/lib/mysql/datadir=/var/lib/mysql/pre-sv-wifi-msdp-idm1.lower-test

mysqld: Can’t change dir to ‘/var/lib/mysql-cluster/datadir=/var/lib/mysql/datadir=/var/lib/mysql/’ (Errcode: 2 – No such file or directory)

2015-12-23 03:12:30 21504 [ERROR] Aborting

2015-12-23 03:12:30 21504 [Note] Binlog end

2015-12-23 03:12:30 21504 [Note]

[1]+ Exit 1 mysqld –defaults-file=my.1.conf

[root@pre-sv-wifi-msdp-idm1 mysql-cluster]#

So how do I connect to both of the MYSQL server processes? Shouldn’t I be able to connect to both, so that if one goes down my application or whatever continues to work.

You can include both MySQL Servers in the connect string that you provide to your connector.

Question Regarding MySQL Cluster Ports

Hello,

I am on Linux platform with MySQL NDB 5.7. I am trying to monitor all traffic related to MySQL clustering – between data nodes, management node and sql nodes. To that end, I used netstat to list all open ports listening on my machine before starting MySQL cluster. Then, I started MySQL cluster and ran netstat again. I assumed that the ports that were listening the second time around, but not the first time, were related to MySQL clustering.

But there are two problems with this. First, there could be ports opened by other processes between the two netstat runs. Second, MySQL might open other ports after I ran the netstat command the second time.

What is the best way to go about finding all ports being used by MySQL for clustering purposes?

I believe ephemeral ports are picked dynamically, so perhaps if I knew all the MySQL clustering related processes that would be running, I can figure out every port that they are using. Pointers will be very welcome.

scripts/mysql_install_db –basedir=/var/lib/mysql/ –datadir=/var/lib/mysql/data/

FATAL ERROR: Could not find my-default.cnf

Why i got this error in the beggining of your document?

Thanks for your question but I’m afraid that I no longer work for Oracle (I’m now working for MongoDB) and so am not current on MySQL technology. I’d suggest checking the MySQL Cluster forum.

Hi how can I change localhost:8081 to other ip Address?

Thanks for your question but I’m afraid that I no longer work for Oracle (I’m now working for MongoDB) and so am not current on MySQL technology. I’d suggest checking the MySQL Cluster forum.