This post looks at the aggregation enhancements being introduced in MongoDB 3.2 – most notably $lookup which implements left-outer equi-joins in the MongoDB Aggregation Framework. The material was originally published in a MongoDB blog series.

It starts with an introduction to analyzing data with MongoDB. We then explain why joins are sometimes useful for MongoDB – in spite of the strengths of the document model – and how developers have been working without them. It then works through examples of building aggregation pipelines – including using the operators added in MongoDB 3.2. After that, we look at how geolocation data can be included as well as what to do when you reach the limit of what can be done using a single pipeline – including adding wrapper code. Finally, there’s a summary of some of the limitations of the Aggregation Framework and reasons why you might supplement it with a full visualization solution such as Tableau together with MongoDB’s Connector for BI (Business Intelligence) – also new in MongoDB 3.2.

Disclaimer

MongoDB’s product plans are for informational purposes only. MongoDB’s plans may change and you should not rely on them for delivery of a specific feature at a specific time.

Real-Time Analytics and Search

With the emergence of new data sources such as social media, mobile applications and sensor-equipped “Internet of Things” networks, organizations can extend analytics to deliver real-time insight and discovery into such areas as operational performance, customer satisfaction, and competitor behavior.

Time to value is everything. For example, having access to real-time customer sentiment or fleet tracking is of little benefit unless the data can be analyzed and reported in real-time.

MongoDB 3.2 aims to extend the options for performing analytics on the live, operational database – ensuring that answers are delivered quickly, and reflect current data. Work that would previously have needed to be done on the client side can now be performed by the database – freeing the developer to focus on new features.

The Case for Joins

MongoDB’s document data model is flexible and provides developers many options in terms of modeling their data. Most of the time all the data for a record tends to be located in a single document. For the operational application, accessing data is simple, high performance, and easy to scale with this approach.

When it comes to analytics and reporting, however, it is possible that the data you need to access spans multiple collections. This is illustrated in Figure 1, where the _id field of multiple documents from the products collection is included in a document from the orders collection. For a query to analyze orders and details about their associated products, it must fetch the order document from the orders collection and then use the embedded references to read multiple documents from the products collection. Prior to MongoDB 3.2, this work is implemented in application code. However, this adds complexity to the application and requires multiple round trips to the database, which can impact performance.

Figure 1: Application-Layer simulation of joins between documents

MongoDB 3.2 introduces the $lookup operator that can now be included as a stage in an aggregation pipeline. With this approach, the work of combining data from the orders and products collections is implemented within the database, and as part of a broader aggregation pipeline that performs other processing in a single query. As a result, there is less work to code in the application, and fewer round trips to the database. You can think about $lookup as equivalent to a left outer equi-join.

Aside – What is a Left Outer Equi-Join?

A left outer equi-join produces a result set that contains data for all documents from the left table (collection) together with data from the right table (collection) for documents where there is a match with documents from the left table (collection). This is illustrated in Figure 2.

Figure 2: Left-Outer join between collections

MongoDB’s Aggregation Framework

The Aggregation Framework is a pipeline for data aggregation modeled on the concept of data processing pipelines. Documents enter a multi-stage pipeline that transforms the documents into aggregated results. The pipeline consists of stages; each stage transforms the documents as they pass through.

In general, each successive stage reduces the volume of data; removing information that isn’t needed and combining other data to produce summarized results.

Figure 3 shows a conceptual model for the Aggregation Framework pipeline. This is what’s happening at each stage:

- On the left-hand side/start of the pipeline is the original collection contents – each record (document) containing a number of shapes (keys), each with a particular color (value)

- The

$match stage filters out any documents that don’t contain a red diamond

- The

$project stage adds a new “square” attribute with a value computed from the value (color) of the snowflake and triangle attributes

- The

$lookup stage (new in 3.2 – more details later) performs a left-outer join with another collection, with the star being the comparison key. This creates new documents which contain everything from the previous stage but augmented with data from any document from the second collection containing a matching colored star (i.e., the blue and yellow stars had matching “lookup” values, whereas the red star had none).

- Finally, the

$group stage groups the data by the color of the square and produces statistics (sum, average and standard deviation) for each group.

Figure 3: MongoDB Aggregation Framework pipeline

This is the full set of aggregation stages:

-

$match – Filter documents

-

$geoNear – Sort documents based on geographic proximity

-

$project – Reshape documents (remove or rename keys or add new data based on calculations on the existing data)

-

$lookup – Coming in 3.2 – Left-outer joins

-

$unwind – Expand documents (for example create multiple documents where each contains one element from an array from the original document)

-

$group – Summarize documents

-

$sample – Randomly selects a subset of documents

-

$sort – Order documents

-

$skip – Jump over a number of documents

-

$limit – Limit number of documents

-

$redact – Restrict sensitive content from documents

-

$out – Coming in 3.2* – store the results in a new collection

The details can be found in the documentation.

New Aggregation Operators in MongoDB 3.2

There are operators used within each stage and this set is being extended in MongoDB 3.2 to include:

- Array operations

-

$slice, $arrayElemAt, $concatArrays, $isArray, $filter, $min, $max, $avg and $sum (some of these were previously available in a $group stage but not in $project

- Standard Deviations

-

$stdDevSamp (based on a sample) and $stdDevPop (based on the complete population)

- Square Root

- Absolute (make +ve) value

- Rounding numbers

- Logarithms

- Raise to power

- Natural Exponent

Further details on these new operators can be found in the MongoDB 3.2 Release Notes.

$lookup – Left Outer Equi-Joins

Figure 4 illustrates the syntax for performing the join:

-

leftCollection is the collection that the aggregation is being performed on and is the left collection in the join

-

from identifies the collection that it will be joined with – the right collection (rightCollection in this case)

-

localField specifies the key from the original/left collection – leftVal

-

foreignField specifies the key from the right collection – rightVal

-

as indicates that the data from the right collection should be embedded within the resulting documents as an array called embeddedData

Figure 4: $lookup – Left-Outer Joins for MongoDB

In the follow-on blogs in this series, you’ll see how the data from a home sales collection (containing details of each home sale, including the property’s postal code) is joined with data from a postal code collection (containing postal codes and their geographical location). This produces documents that contain the original home sale information augmented with the coordinates of the property. In this case, the “homesales” collection is the left-collection and “postcodes” the right-collection; the “postcode” field from each collection is the localField which is matched with the foreignField.

Worked Examples

The Data Set

The examples use two data sets, the first being a comprehensive set of home sale data for a town and the second being a mapping from postal codes to geospatial locations for that same town.

For those interested, the imported data sets needed some cleaning up to make this walkthrough more useful – the steps are described here.

The two data sets can be checked using the mongo shell:

db.homeSales.findOne()

{

"_id": ObjectId("56005dd980c3678b19792b7f"),

"amount": 9000,

"date": ISODate("1996-09-19T00:00:00Z"),

"address": {

"nameOrNumber": 25,

"street": "NORFOLK PARK COTTAGES",

"town": "MAIDENHEAD",

"county": "WINDSOR AND MAIDENHEAD",

"postcode": "SL6 7DR"

}

}

db.postcodes.findOne()

{

"_id": ObjectId("5600521e50fa77da54dfc0d2"),

"postcode": "SL6 0AA",

"location": {

"type": "Point",

"coordinates": [

51.525605,

-0.700974

]

}

}

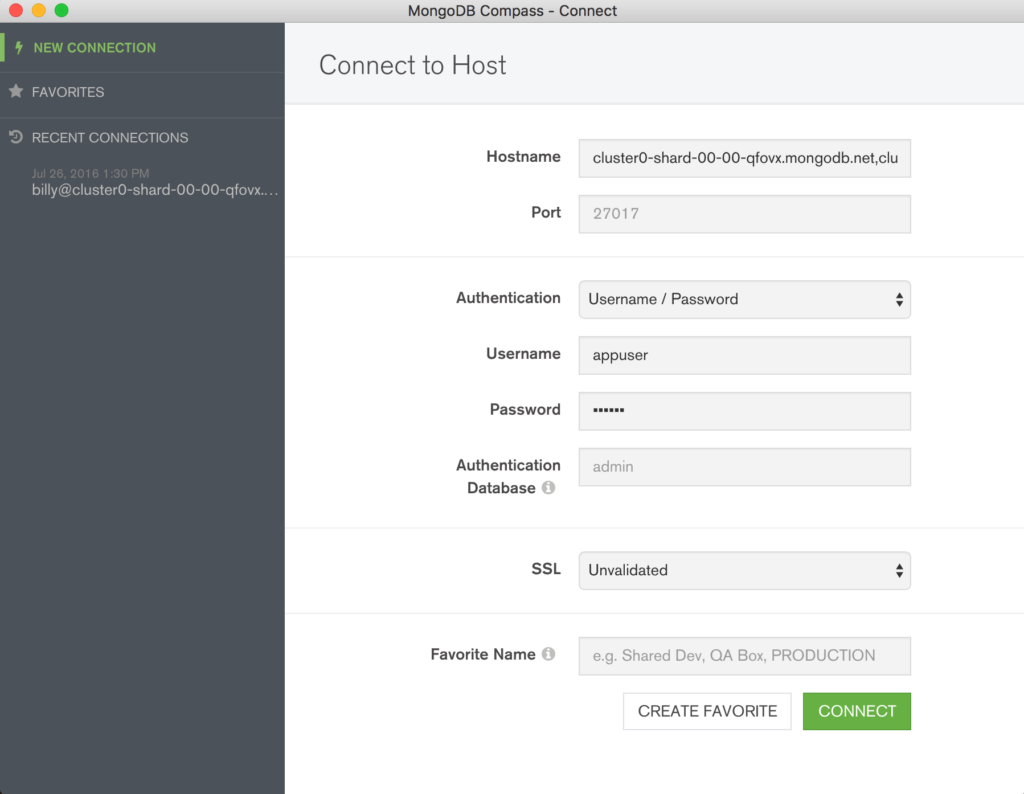

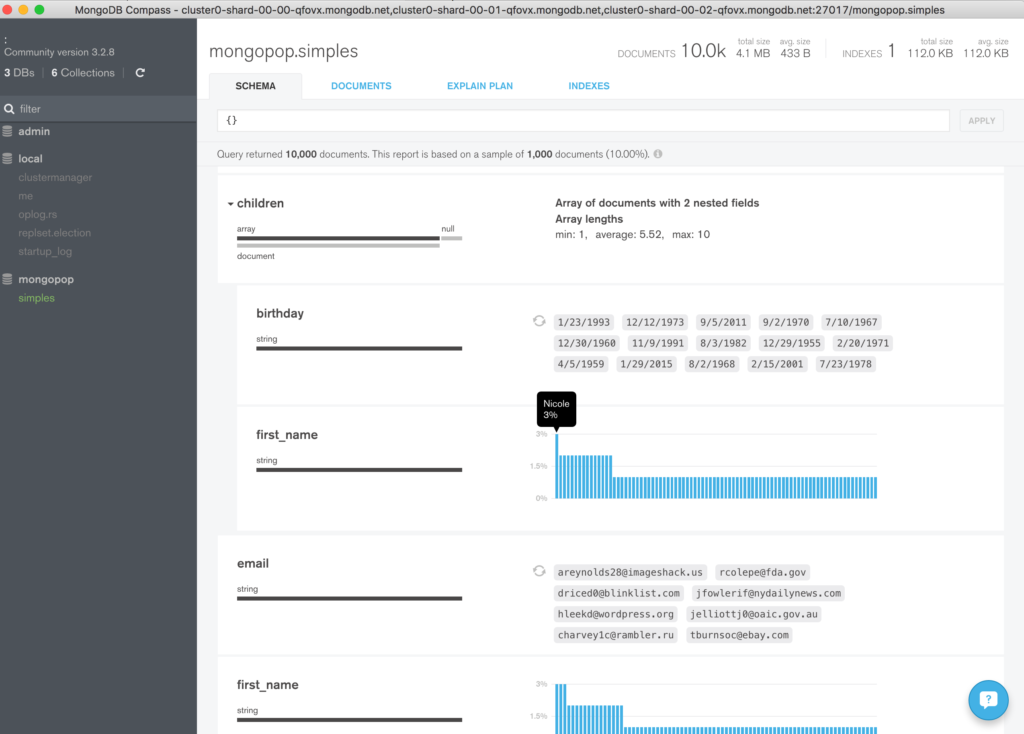

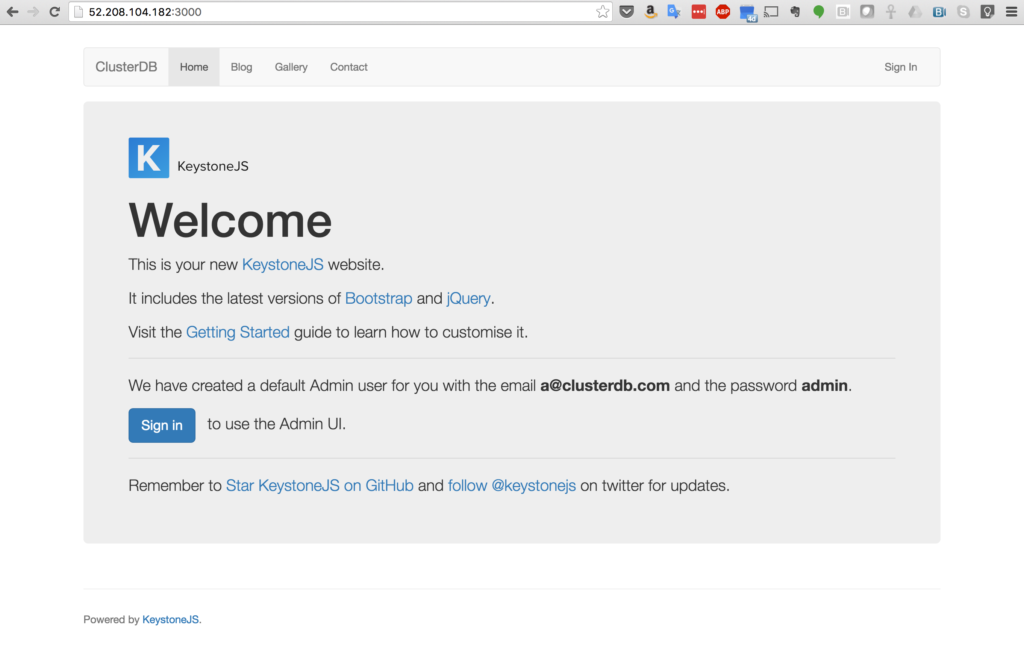

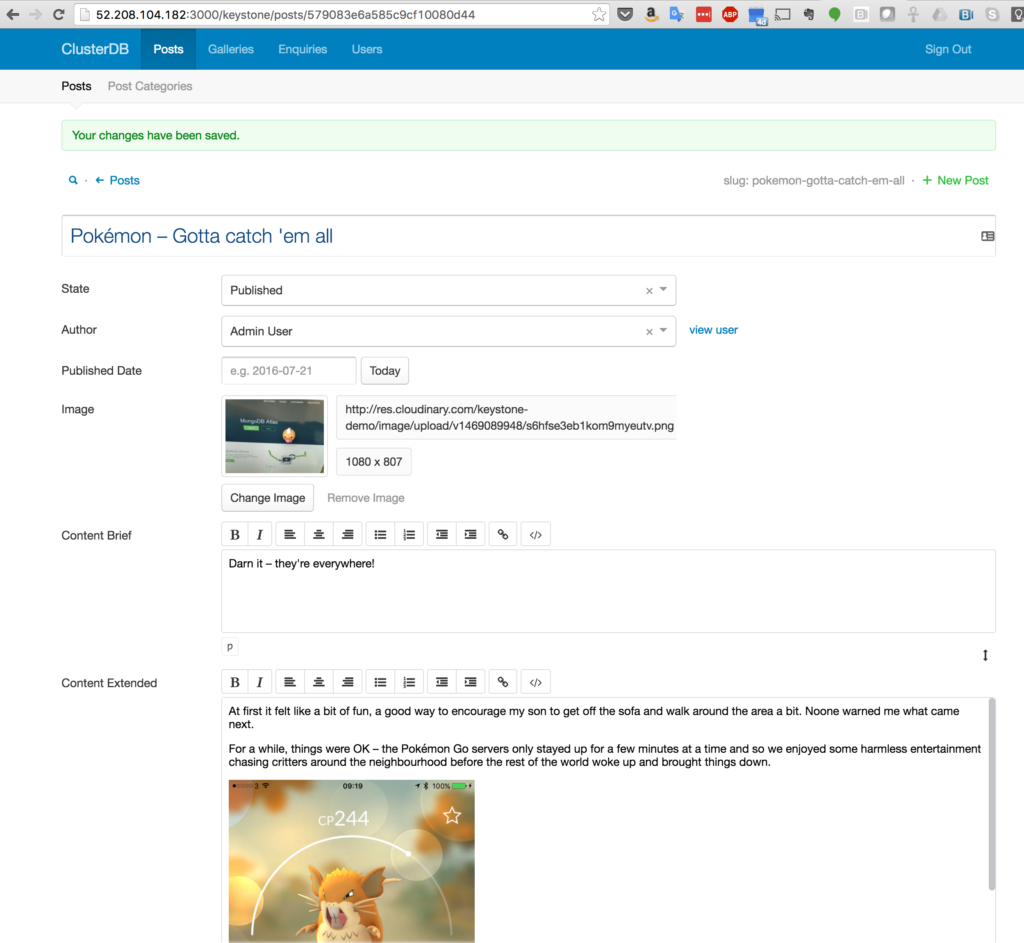

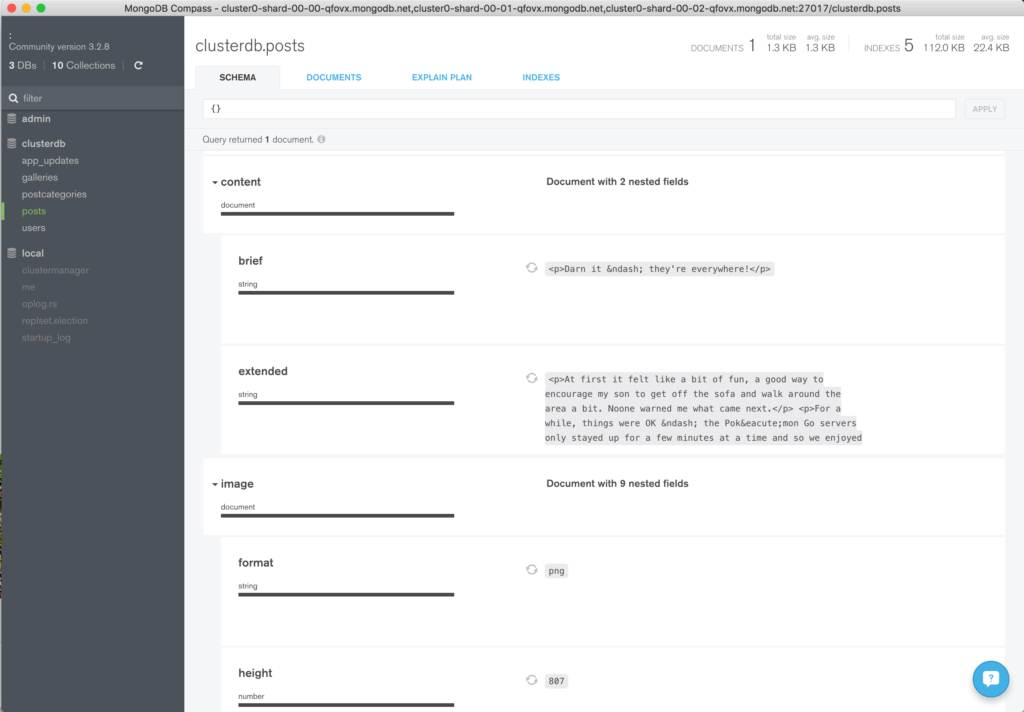

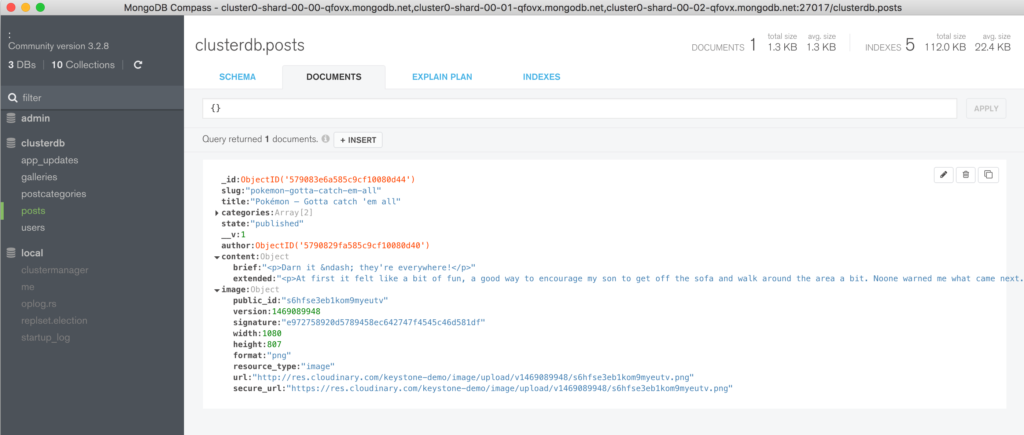

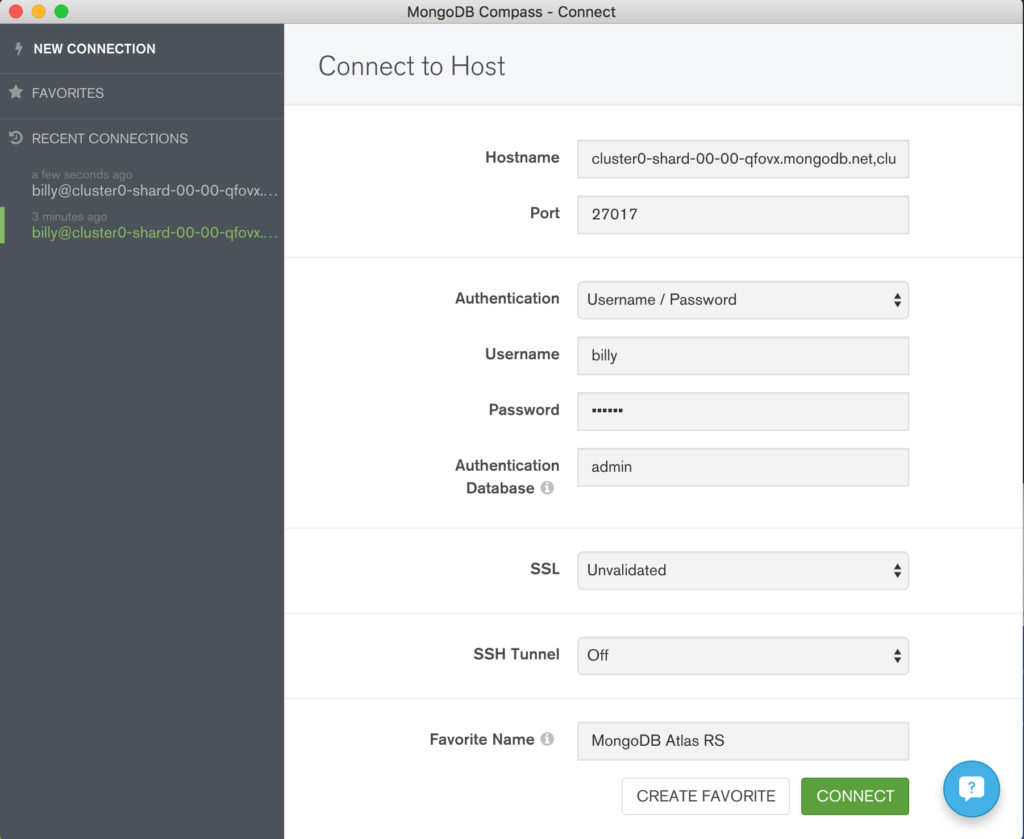

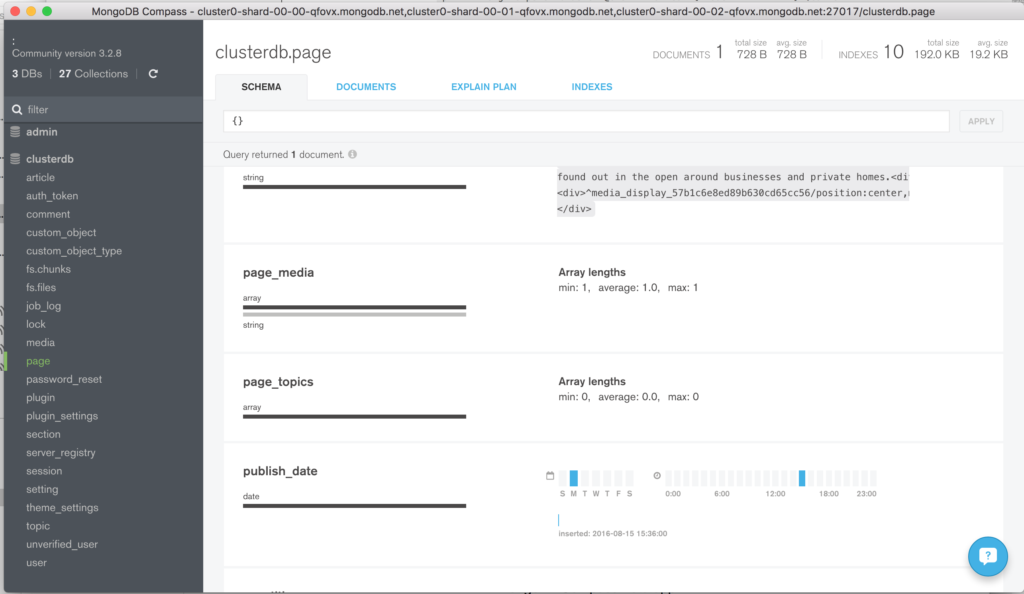

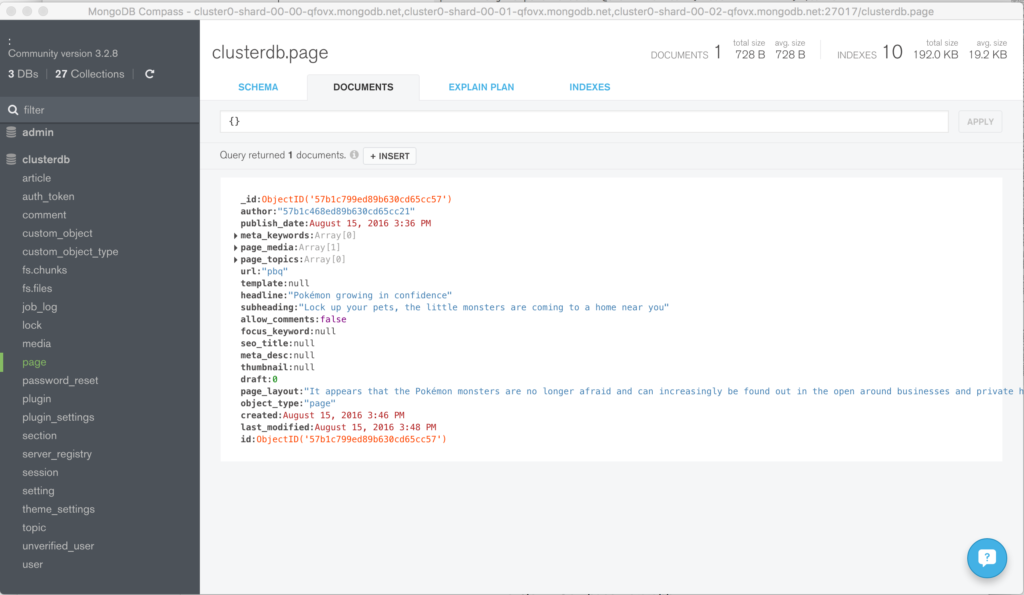

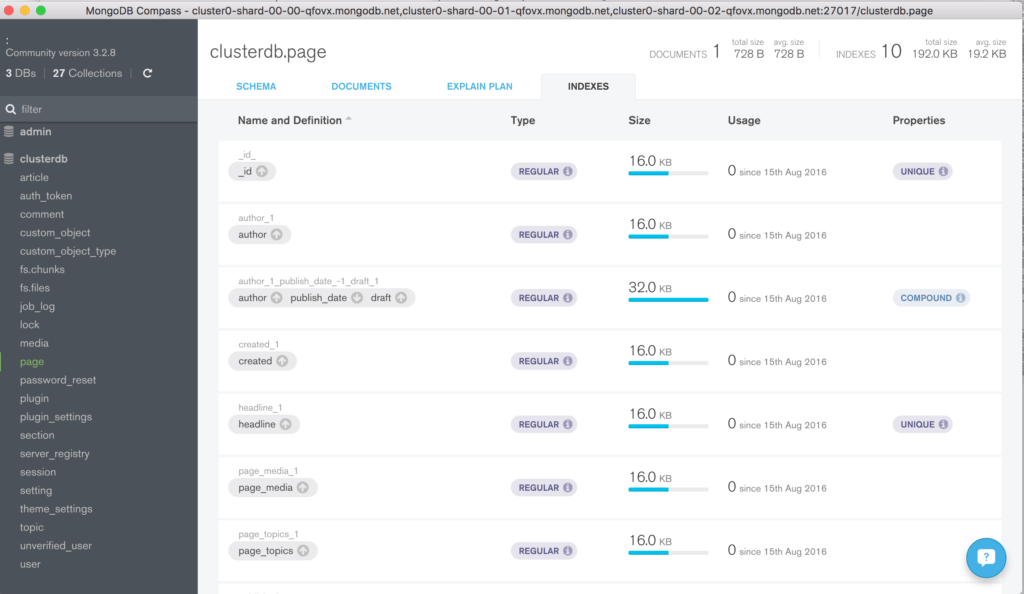

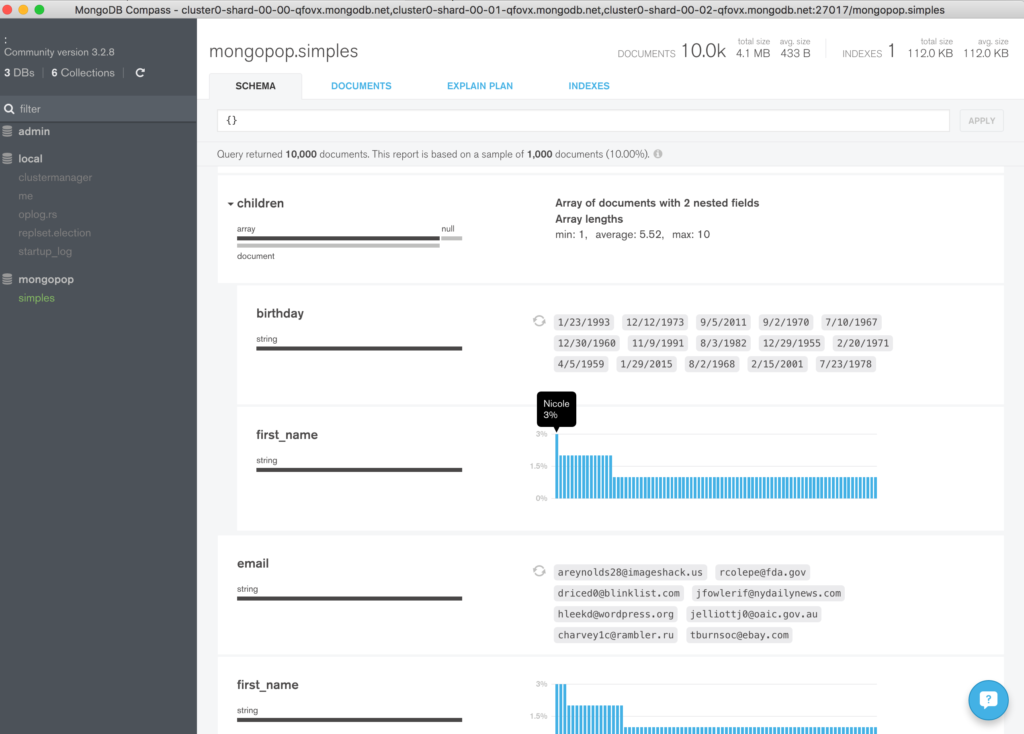

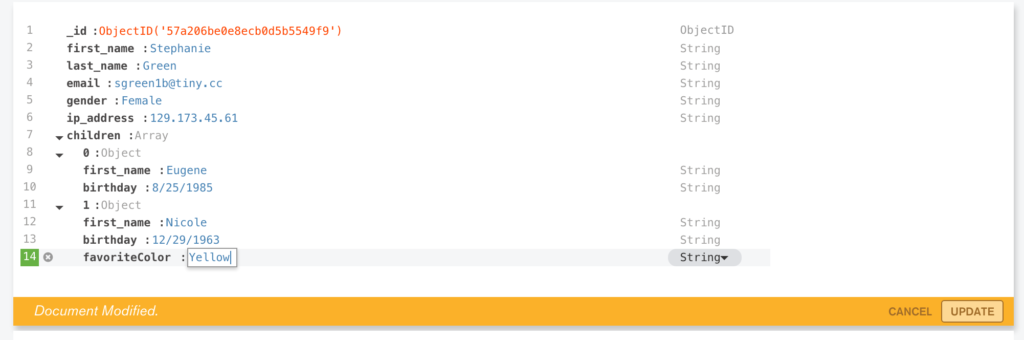

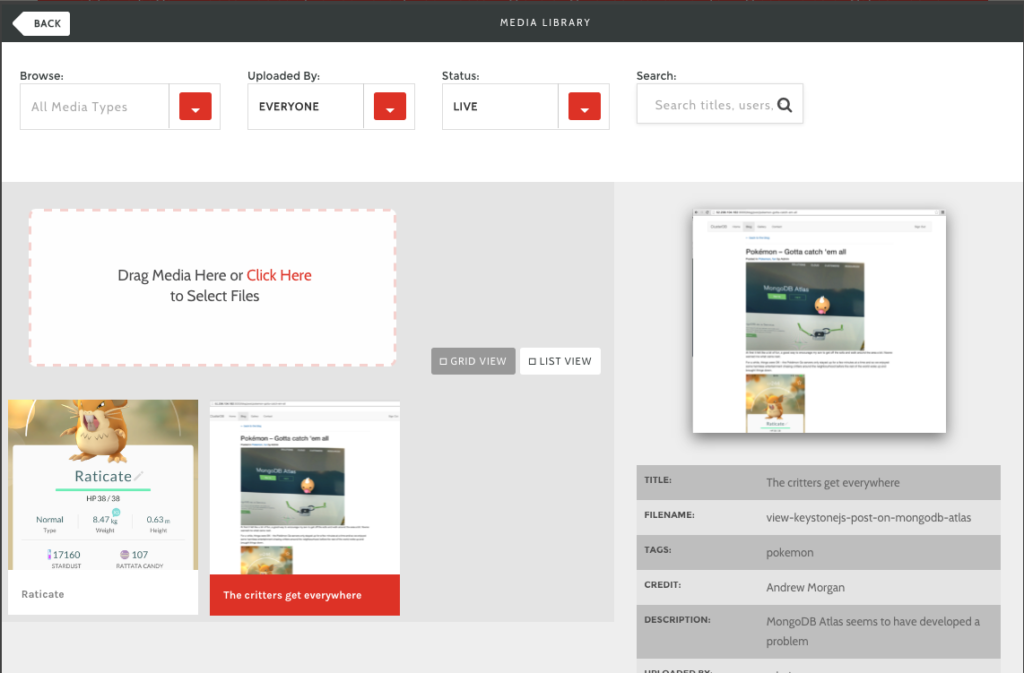

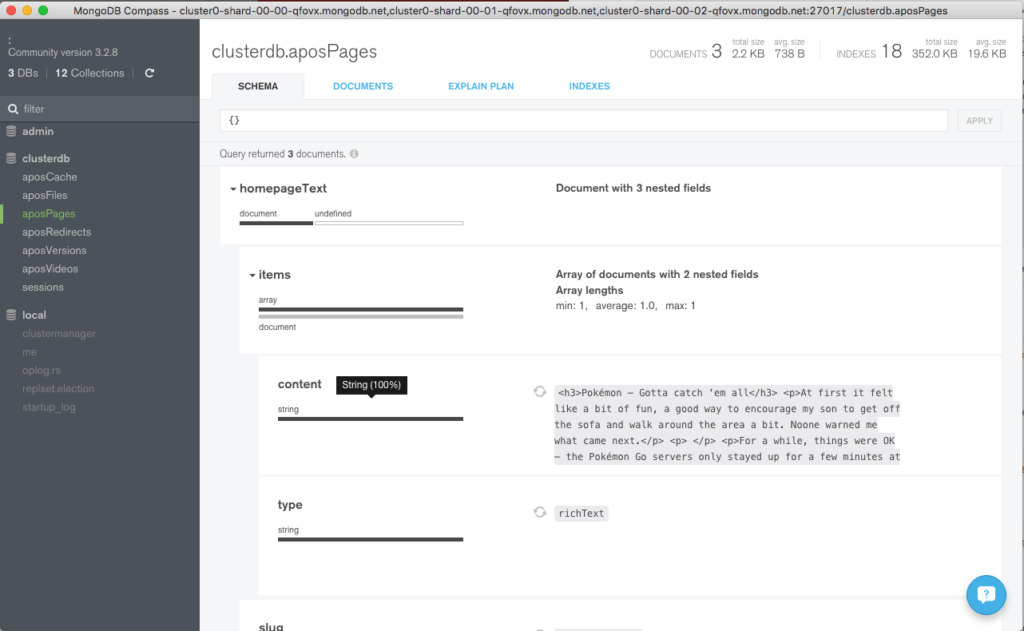

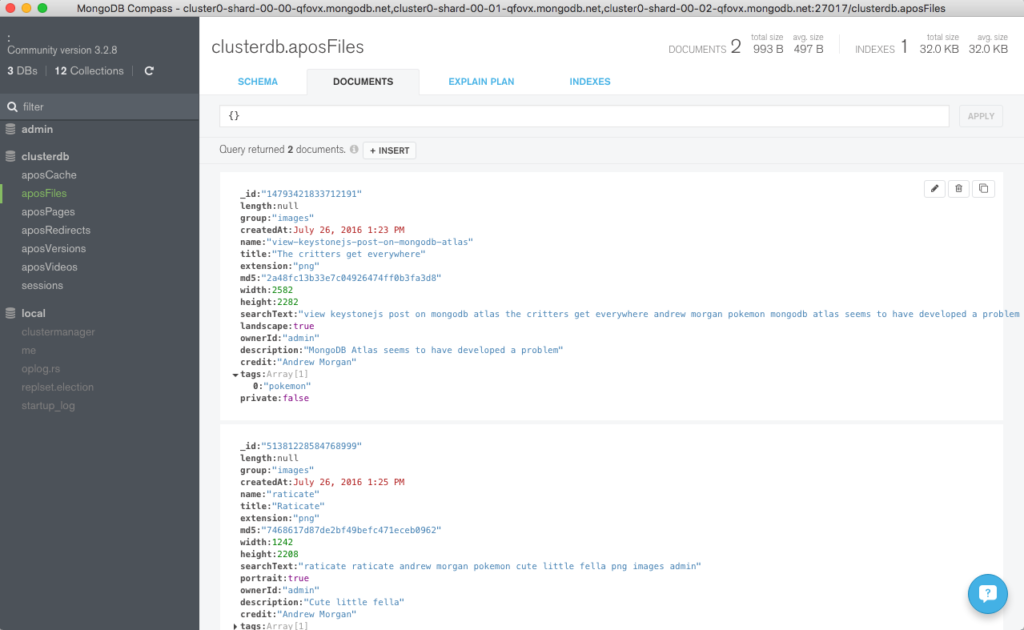

An even better option to understand the contents of these collections is to use MongoDB Compass (to be released with MongoDB 3.2). Figure 1 shows an overview of the homeSales collection and Figure 2 delves into its address sub-document.

These datasets (the homeSales and postcodes collections) can be downloaded here.

Figure 1: MongoDB Compass View of the homeSales Collection

Figure 2: Viewing Sub-Documents With MongoDB Compass

Building the First Pipeline

As the error messages from complex pipelines aren’t always very specific, it makes sense to start with a simple pipeline and then check the results before moving onto the next.

As a collection’s indexes are only used for the beginning stages in the pipeline (before any transformations are performed), it is often sensible to reduce the result set as much as possible with a $match stage to filter out any unnecessary documents. Ideally, the match would be against the sharding key so that fewer shards need to be included. For the first pipeline stage the cheaper property sales are going to excluded and so it would help to have a secondary index on the amount key:

db.homeSales.createIndex({amount: 1})

The first stage in the pipeline then filters out any sales of less than £3,000,000:

db.homeSales.aggregate([

{$match: {

amount: {$gte:3000000}}

}

])

The results can then be inspected to understand what will flow into the next stage in the pipeline:

{

{

"_id": ObjectId("56005dda80c3678b19799e52"),

"amount": 3000000,

"date": ISODate("2012-04-19T00:00:00Z"),

"address": {

"nameOrNumber": "TEMPLE FERRY PLACE",

"street": "MILL LANE",

"town": "MAIDENHEAD",

"county": "WINDSOR AND MAIDENHEAD",

"postcode": "SL6 5ND"

}

},

...

{

"_id": ObjectId("56005dda80c3678b19799e5c"),

"amount": 5425000,

"date": ISODate("1999-03-15T00:00:00Z"),

"address": {

"nameOrNumber": "2 - 3",

"street": "THE SWITCHBACK",

"town": "MAIDENHEAD",

"county": "WINDSOR AND MAIDENHEAD",

"postcode": "SL6 7RJ"

}

}

In the next stage, a left-outer join is performed – using $lookup – to find documents from the postcodes collection with a matching postcode so that the geographic location can be determined:

db.homeSales.aggregate([

{$match: {

amount: {$gte:3000000}}

},

{$lookup: {

from: "postcodes",

localField: "address.postcode",

foreignField: "postcode",

as: "postcode_docs"}

}

])

Which yields these results:

{

{

"_id": ObjectId("56005dda80c3678b19799e52"),

"amount": 3000000,

"date": ISODate("2012-04-19T00:00:00Z"),

"address": {

"nameOrNumber": "TEMPLE FERRY PLACE",

"street": "MILL LANE",

"town": "MAIDENHEAD",

"county": "WINDSOR AND MAIDENHEAD",

"postcode": "SL6 5ND"

},

"postcode_docs": [

{

"_id": ObjectId("560053e280c3678b1978b293"),

"postcode": "SL6 5ND",

"location": {

"type": "Point",

"coordinates": [

51.549516,

-0.80702

]

}

}

]

},

...

"postcode_docs": [

{

"_id": ObjectId("560053e280c3678b1978b524"),

"postcode": "SL6 7RJ",

"location": {

"type": "Point",

"coordinates": [

51.536848,

-0.735835

]

}

}

]

}

The pipeline can then be extended with a $project stage to refactor the documents, removing any information that isn’t needed. The documents are then sorted in reverse-price order:

db.homeSales.aggregate([

{$match: {

amount: {$gte:3000000}}

},

{$lookup: {

from: "postcodes",

localField: "address.postcode",

foreignField: "postcode",

as: "postcode_docs"}

},

{$project: {

_id: 0,

saleDate: "$date",

price: "$amount",

address: 1,

location: "$postcode_docs.location"}},

{$sort:

{

price: -1

}}

])

The address and physical location of every home sale, starting with the most expensive is then shown:

{

"address": {

"nameOrNumber": "2 - 3",

"street": "THE SWITCHBACK",

"town": "MAIDENHEAD",

"county": "WINDSOR AND MAIDENHEAD",

"postcode": "SL6 7RJ"

},

"saleDate": ISODate("1999-03-15T00:00:00Z"),

"price": 5425000,

"location": [

{

"type": "Point",

"coordinates": [

51.536848,

-0.735835

]

}

]

},

...

{

"address": {

"nameOrNumber": "TEMPLE FERRY PLACE",

"street": "MILL LANE",

"town": "MAIDENHEAD",

"county": "WINDSOR AND MAIDENHEAD",

"postcode": "SL6 5ND"

},

"saleDate": ISODate("2012-04-19T00:00:00Z"),

"price": 3000000,

"location": [

{

"type": "Point",

"coordinates": [

51.549516,

-0.80702

]

}

]

}

Building Further Pipelines

If all of the sales need to be analyzed (rather than just the most expensive few) then there would be too many results from the previous pipeline to be easily understood. For this reason, the pipeline is modified so that extra analysis and aggregation is performed within the database. This can be done using a $group stage – in this case to produce sales statistics by year:

db.homeSales.aggregate([

{

$group:

{

_id: {$year: "$date"},

higestPrice: {$max: "$amount"},

lowestPrice: {$min: "$amount"},

averagePrice: {$avg: "$amount"},

priceStdDev: {$stdDevPop: "$amount"}

}

},

{

$sort: {_id: 1}

}

])

{

"_id": 1995,

"higestPrice": 1000000,

"lowestPrice": 12000,

"averagePrice": 114059.35206869633,

"priceStdDev": 81540.50490801703

},

...

{

"_id": 2015,

"higestPrice": 1688000,

"lowestPrice": 125000,

"averagePrice": 451413.23917137476,

"priceStdDev": 228293.6005201898

}

Note that this is using the $stdDevPop (standard deviation) aggregation operator being introduced in MongoDB 3.2.

There’s more precision than needed for some of the keys and so a common pattern can be employed – use a final $project stage to tidy up the data:

db.homeSales.aggregate([

{

$group:

{

_id: {$year: "$date"},

higestPrice: {$max: "$amount"},

lowestPrice: {$min: "$amount"},

averagePrice: {$avg: "$amount"},

priceStdDev: {$stdDevPop: "$amount"}

}

},

{

$sort: {_id: 1}

},

{

$project:

{

_id: 1,

higestPrice: 1,

lowestPrice: 1,

averagePrice: {$trunc: "$averagePrice"},

priceStdDev: {$trunc: "$priceStdDev"}

}

}

])

{

"_id": 1995,

"higestPrice": 1000000,

"lowestPrice": 12000,

"averagePrice": 114059,

"priceStdDev": 81540

},

...

{

"_id": 2015,

"higestPrice": 1688000,

"lowestPrice": 125000,

"averagePrice": 451413,

"priceStdDev": 228293

}

It often makes sense to store the results in a new collection – either because the results will be reused many times or that subsequent aggregation pipelines will reference them. This is simple to achieve using a $out stage; note that:

- If the target collection already exists then its contents will be overwritten

- If used then the

$out must be the final stage in the pipeline

The following example writes the results to a collection called annualHomePrices:

db.homeSales.aggregate([

{

$group:

{

_id: {$year: "$date"},

highestPrice: {$max: "$amount"},

lowestPrice: {$min: "$amount"},

averagePrice: {$avg: "$amount"},

priceStdDev: {$stdDevPop: "$amount"}

}

},

{

$sort: {_id: 1}

},

{

$project:

{

_id: 0,

year: "$_id",

highestPrice: 1,

lowestPrice: 1,

averagePrice: {$trunc: "$averagePrice"},

priceStdDev: {$trunc: "$priceStdDev"}

}

},

{

$out: "annualHomePrices"

}

])

> db.annualHomePrices.findOne()

{

"_id": ObjectId("560957ac29a5574d557d426d"),

"highestPrice": 1000000,

"lowestPrice": 12000,

"averagePrice": 114059,

"priceStdDev": 81540,

"year": 1995

}

These “interim” results could then be used to build further result sets – without the need to run all of that processing again. As an example, using a simple $project stage, the gap between the highest and lowest house sale can be calculated for each year:

db.annualHomePrices.aggregate([

{$project:

{

Year: "$year",

hightToLowPriceGap: {

$subtract: ["$highestPrice", "$lowestPrice"]

},

_id: 0

}

}

])

{

"Year": 2012,

"hightToLowPriceGap": 2923000

},

{

"Year": 2013,

"hightToLowPriceGap": 5092250

},

{

"Year": 2014,

"hightToLowPriceGap": 3883050

},

{

"Year": 2015,

"hightToLowPriceGap": 1563000

}

As a final stage in this post, a pipeline is built to find the postal code and geographic location of the most expensive house sale for each of the recorded years:

Perform a $sort on the full homeSales data set so that the documents are ordered from most expensive sale first

$group the results based on the year of the home sale, deriving the priciestPostCode from the $first document in that group (year). Because the documents were sorted on price before entering the group stage, the first document is also the one with the highest price

$lookup the postal code in the postcodes collection to get the geolocation data

$sort the results by year

$project just the data that is of interest

db.homeSales.aggregate([

{

$sort: {amount: -1}

},

{

$group:

{

_id: {$year: "$date"},

priciestPostCode: {$first: "$address.postcode"}

}

},

{

$lookup:

{

from: "postcodes",

localField: "priciestPostCode",

foreignField: "postcode",

as: "locationData"

}

},

{

$sort: {_id: -1}

},

{

$project:

{

_id: 0,

Year: "$_id",

PostCode: "$priciestPostCode",

Location: "$locationData.location"

}

}

])

{

"Year": 2015,

"PostCode": "SL6 9UD",

"Location": [

{

"type": "Point",

"coordinates": [

51.558455,

-0.756023

]

}

]

},

{

"Year": 2014,

"PostCode": "SL6 1UP",

"Location": [

{

"type": "Point",

"coordinates": [

51.51407,

-0.704414

]

}

]

},

...

Adding Some Coding Glue and Geolocation

Obviously, there are limits as to how much can be achieved with a single aggregation pipeline but with the addition of just a little code outside of the database (in this example, JavaScript in the mongo shell), additional results can be produced.

We start by repeating an aggregation from the previous section but store the data in a collection so that we can build upon those results:

db.homeSales.aggregate([

{

$sort: {amount: -1}

},

{

$group:

{

_id: {$year: "$date"},

priciestPostCode: {$first: "$address.postcode"}

}

},

{

$lookup:

{

from: "postcodes",

localField: "priciestPostCode",

foreignField: "postcode",

as: "locationData"

}

},

{

$sort: {_id: -1}

},

{

$project:

{

_id: 0,

Year: "$_id",

PostCode: "$priciestPostCode",

Location: "$locationData.location"

}

},

{

$out: "hottestLocations"

}

])

db.hottestLocations.findOne()

{

"_id": ObjectId("5629108c96be45aba9cb0c98"),

"Year": 2015,

"PostCode": "SL6 9UD",

"Location": [

{

"type": "Point",

"coordinates": [

51.558455,

-0.756023

]

}

]

}

In this example, geospatial operations are performed on the location from the postcodes collection and so a geospatial index should be added:

db.postcodes.createIndex({location: "2dsphere"})

A function is created that, given a location, will find the five nearest postcodes – taking advantage of a $geoNear stage – note that this must be the first stage in the pipeline:

var findNeighbours = function (spot, yearTag) {

var result = db.postcodes.aggregate([

{

$geoNear:

{

near: spot,

distanceField: "distance",

num: 5,

spherical: true

}

},

{

$group: {

_id: yearTag,

"neighbours": {

$addToSet: "$postcode"

}

}

}

]);

return result.result;

}

Then, for each of the hottestLocations collection, that function is called to display and the contents of the returned cursor are displayed:

db.hottestLocations.find().forEach(function(myDoc) {

var myCursor = findNeighbours(myDoc.Location[0], myDoc.Year);

myCursor.forEach(printjson);

})

The result is a list of the 5 closest postcodes to the top selling home each year:

{

"_id": 1995,

"neighbours": [

"SL6 2NL",

"SL6 2JL",

"SL6 2NB",

"SL6 2JN",

"SL6 2NA"

]

}

...

{

"_id": 2015,

"neighbours": [

"SL6 9XB",

"SL6 9XL",

"SL6 9UE",

"SL6 9UB",

"SL6 9UD"

]

}

Bonus Query – For Those Choosing a School

There’s often a correlation between the house prices near a school and that school’s performance. So, if you were considering a specific school then it might make sense to check house prices in the area surrounding that school.

The following pipeline will find house price statistics, by year, for all postcodes within a 3 km radius of the school – which is located at coordinates (51.5156725, -0.727387):

db.postcodes.aggregate([

{

$geoNear:

{

near:

{

"type": "Point",

"coordinates": [

51.5156725,

-0.727387

]},

distanceField: "distance",

num: 10000,

maxDistance: 3000,

spherical: true

}

},

{

$lookup: {

from: "homeSales",

localField: "postcode",

foreignField: "address.postcode",

as: "priceData"

}

},

{

$unwind: "$priceData"

},

{

$group:

{

_id: {$year: "$priceData.date"},

highestPrice: {$max: "$priceData.amount"},

lowestPrice: {$min: "$priceData.amount"},

averagePrice: {$avg: "$priceData.amount"},

priceStdDev: {$stdDevPop: "$priceData.amount"}

}

},

{

$project:

{

_id: 0,

Year: "_id",

highestPrice: 1,

lowestPrice: 1,

averagePrice: {$trunc: "$averagePrice"},

priceStdDev: {$trunc: "$priceStdDev"}

}

},

{

$sort:

{

"Year": -1

}

}

]);

{

"highestPrice": 1350000,

"lowestPrice": 125000,

"averagePrice": 410593,

"priceStdDev": 182358,

"Year": 2015

},

...

{

"highestPrice": 930000,

"lowestPrice": 12000,

"averagePrice": 103455,

"priceStdDev": 68615,

"Year": 1995

}

Limitations

As seen, it’s possible to build up sophisticated analytical queries using the enhanced aggregation features – especially when pipelines are combined with a little application or scripting glue.

Some limitations that you may meet include:

-

$geoNear can only be used as the first stage in the pipeline

-

$lookup only supports equality for the match and the equality has to be between a single key from each collection

- The right-collection for

$lookup cannot be sharded

- The pipeline is linear; there are no forks and once data has been aggregated, the lost details can’t be reused later in the pipeline (this is why writing results to a new collection using

$out can be helpful)

- One can remove information at each stage but it’s impossible to add new raw data (other than through

$lookup)

- Indexes are only used for the beginning stages of the pipeline (and right tables in any subsequent

$lookup)

-

$out can only be used in the final stage of the pipeline

When to Use Full Data Visualization Solutions

A lot can be achieved directly in the database – especially when augmented with a small amount of code. So when would it make sense to use a BI visualization tool such as Tableau. The capabilities will vary from product to product but some general considerations are given here:

-

Visualization – displaying information in graphs and on maps (rather than in JSON result sets) makes it much simpler for the human mind to see patterns and draw conclusions from the data (see Figure 1 which is based on the same data set used earlier and graphically shows the highest home sale price by location and year)

-

Multiple Data Sources – combining data from multiple sources (data blending); for example from a MongoDB database and an Excel spreadsheet can greatly broaden the context of reports

-

Interactivity – visualization tools make it simple to create interactive queries/dashboards where business user can graphically tweak parameters to get precise results and test theories

-

Skills – performing all of the analytics directly in MongoDB requires knowledge of the MongoDB Query Language and possibly some basic coding skills. Using the visualization tools is analogous to using intermediate features in Microsoft Excel such as pivot tables and graphs and so it opens up the ability to analyze the data to a broader set of users in the organization

-

Extra functions – for example, the ability to add trend lines to a chart

Figure 1: MongoDB Data Visualized in a Tableau Map

MongoDB 3.2 introduces the MongoDB Connector for BI which allows Business Intelligence tools such as Tableau to access data from MongoDB using SQL – opening up a range of new options for performing analytics on live data.

Next Steps

To learn more about what’s coming up in MongoDB 3.2, register for the What’s new in MongoDB 3.2 webinar and review the MongoDB 3.2 release notes.

There’s a webinar recording explaining more about $lookup and the other aggregation features.

To get the best understanding of the new features then you should experiment with the software which is available in the MongoDB 3.2 (not for production) download – to use the new $lookup aggregation theMongoDB Enterprise Advanced download should be used.

The reason MongoDB releases development releases is to give the community a chance to try out the new software – and we hope that you’ll give us feedback, whether it be by joining the MongoDB 3.2 bug hunt or commenting on this post.